AWS Certified Solutions Architect – Associate Questions and Answers (Dumps and Practice Questions)

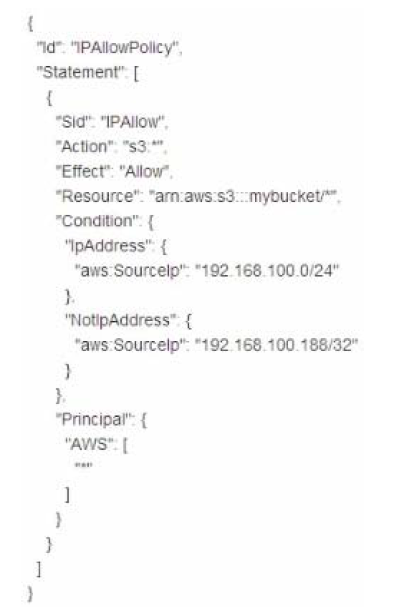

Question : Which of the following statements about this S bucket policy is true?

1. Denies the server with the IP address 192 168 100 0 full access to the "mybucket" bucket

2. Denies the server with the IP address 192 168 100 188 full access to the "mybucket" bucket

3. Access Mostly Uused Products by 50000+ Subscribers

4. Grants all the servers within the 192 168 100 188/32 subnet full access to the "mybucket" bucket

Correct Answer : Get Lastest Questions and Answer :

Question :

In ragards to Monitoring Volumes with CloudWatch with the 'Detailed' type the data available for your Amazon EBS volumes,

Provisioned IOPS volumes automatically send _____ minute metrics to Amazon CloudWatch.

1. 2

2. 1

3. Access Mostly Uused Products by 50000+ Subscribers

4. 10

Correct Answer : Get Lastest Questions and Answer :

Explanation: CloudWatch metrics are statistical data that you can use to view, analyze, and set alarms on the operational behavior of your volumes.

The following table describes the types of monitoring data available for your Amazon EBS volumes.

Type Basic : Data is available automatically in 5-minute periods at no charge. This includes data for the root device volumes for Amazon EBS-backed

instances.

Detailed : Provisioned IOPS volumes automatically send one-minute metrics to CloudWatch.

When you get data from CloudWatch, you can include a Period request parameter to specify the granularity of the returned data. This is different than the

period that we use when we collect the data (5-minute periods). We recommend that you specify a period in your request that is equal to or larger than the

collection period to ensure that the returned data is valid.

You can get the data using either the Amazon CloudWatch API or the Amazon EC2 console. The console takes the raw data from the Amazon CloudWatch API and

displays a series of graphs based on the data. Depending on your needs, you might prefer to use either the data from the API or the graphs in the console.

Question :

When Amazon EBS determines that a volume's data is potentially inconsistent, it disables I/O to the volume

from any attached EC2 instances by default. This causes the volume status check to fail, and creates a volume status event that indicates the cause of the

failure.

To automatically enable I/O on a volume with potential data inconsistencies, change the setting of the ___________ volume attribute.

1. AutoPermitIO

2. AutoEnableIO

3. Access Mostly Uused Products by 50000+ Subscribers

4. AutoAllowIP

Correct Answer : Get Lastest Questions and Answer :

Explanation: When Amazon EBS determines that a volume's data is potentially inconsistent, it disables I/O to the volume from any attached EC2 instances by default.

This causes the volume status check to fail, and creates a volume status event that indicates the cause of the failure.

To automatically enable I/O on a volume with potential data inconsistencies, change the setting of the AutoEnableIO volume attribute. For more information

about changing this attribute.

Each event includes a start time that indicates the time at which the event occurred, and a duration that indicates how long I/O for the volume was

disabled. The end time is added to the event when I/O for the volume is enabled.

When Amazon EBS determines that a volume's data is potentially inconsistent, it disables I/O to the volume from any attached EC2 instances by default. This

causes the volume status check to fail, and creates a volume status event that indicates the cause of the failure. If the consistency of a particular volume

is not a concern, and you prefer that the volume be made available immediately if it's impaired, you can override the default behavior by configuring the

volume to automatically enable I/O. If you enable the AutoEnableIO volume attribute, I/O between the volume and the instance is automatically reenabled and

the volume's status check will pass

Related Questions

Question : You are working with a IT Technology blogging company named QuickTechie Inc. They have huge number of blogs on various technologies. They

have their infrastructure developed in AWS, using EC2 and MYSQL database, installed on the same EC2 instance locally. And your chief architect informed

that there are some performance issues with this architect and as you know this is not a resilient solution. To solve this problem, you suggested using

RDS with Multi-AZ deployment, which solves the resilient requirement. Now, with regards to performance, you have been informed that 99% time blogging

site is accessed for reading the blogs and hardly for 1 % wring and updating the blogs. Which of the following option will help you to increase the

performance in given scenario?

1. As you have Multi-AZ deployment so that start writing to secondary copy whenever blog is created or updated.

2. You should have Multi-AZ deployment and all the write should go to the primary database and read from the secondary database.

3. Access Mostly Uused Products by 50000+ Subscribers

4. You should have used NoSQL database, as they are good fit for blogging solutions.

Question : You are working in a bank, Arinika Inc. This bank asked you to implement best solution for commenting and messaging on their mobile

applications. You have used DynamoDB to store all this comments and messages. However, now bank want to do some analytics based on user transactions,

messages and comments to analyze the spending behavior. What is your solution for this requirement?

1. You should move these comments and messages on the RDS and ask your analytics team to use this database for their analytical queries.

2. You should move transaction data on the Redshift and ask your analytics team to use this database for their analytical queries.

3. Access Mostly Uused Products by 50000+ Subscribers

4. You should use RRS to store comments and messages and combine it with the already stored transaction to do analytics.

Question : You have been working in a financial company, which creates indexes based on equity data. Your technical team are in process of creating

product based on newly acquired vendor and data stored in Amazon RDS, where automated backup is enabled. During production deployment you have asked DBA

team to create a table, with statement drop first and then create table. Now table have been dropped by DBA and new empty table has been created.

However, in few moments you got a complain that other applications were reading the data from that table and they are not getting these data now. It’s

very critical production application. How, will you and your DBA can handle this scenario?

1. As you are using Amazon RDS, in a few moment you can re-store the table which has been deleted.

2. You will restore the entire database from snapshot backup.

3. Access Mostly Uused Products by 50000+ Subscribers

4. as Amazon RDS store backup snapshots in Amazon Glacier. It will take 3-5 hrs to get the snapshot, and will be recovered.

Question : You are working as an AWS solution architect and you need to have a better solution for mobile wallet to store all the transaction data.

Hence, you decided to use Amazon RDS, but now question comes that, you need highly resilient solution. Which of the following RDS instances support

resiliency based on your requirement?

1. SQL Server, MySQL, Oracle

2. Oracle , Aurora, SQLServer

3. Access Mostly Uused Products by 50000+ Subscribers

4. All Database supported as part of RDS have Multi-Az support

Question : You are developing a technology blogging website. Where ’s of learners will create the blogs on weekly basis. However, more than

millions of user will read this blog website. And you decided to use the Amazon RDS, you are confusing which DB engine would be good for this requirement?

1. SQL Server, MySQL, Oracle

2. Oracle, Aurora, SQLServer

3. Access Mostly Uused Products by 50000+ Subscribers

4. All Database supported as part of RDS have Multi-Az support

5. MySQL, MariaDB, Amazon Aurora and PostGreSQL

Question : You have been working with an e-commerce company, which has its data stored in AWS RDS database. You are at the last stage of

productionizing this solution. For resiliency you have enabled multi-Az deployment. Now, your chief architect asked you that have you done testing for

failover or assumed it will work. Certainly, you should not assume it will work, you have to test for every scenario your solution. What will you do to

test failover for given scenario?

1. As this is about stopping primary DB instance in one of the availability zone. Hence, you need to raise support ticket to stop the

primary RDS instance.

2. You have to stop Secondary DB instance, as this does not require AWS support ticket. You can stop that and test the given scenario.

3. Access Mostly Uused Products by 50000+ Subscribers

in background.

4. This scenario practically cannot be tested, as required entire datacenter to bring down.