Cloudera Hadoop Developer Certification Questions and Answer (Dumps and Practice Questions)

Question : QuickTechie Inc has a log file which is tab-delimited text file. File contains two columns username and loginid

You want use an InputFormat that returns the username as the key and the loginid as the value. Which of the following

is the most appropriate InputFormat should you use?

1. KeyValueTextInputFormat

2. MultiFileInputFormat

3. Access Mostly Uused Products by 50000+ Subscribers

4. SequenceFileInputFormat

5. TextInputFormat

Correct Answer : Get Lastest Questions and Answer :

Explanation: An InputFormat for plain text files. Files are broken into lines. Either linefeed or carriage-return are used to signal end of line. Each line is divided into key and value parts by a separator byte. If no such a byte exists, the key will be the entire line and value will be empty.The KeyValueTextInputFormat parses each line of text as a key, a separator and a value. The default separator is the tab character. In new API (apache.hadoop.mapreduce.KeyValueTextInputFormat) , how to specify separator (delimiter) other than tab(which is default) to separate key and Value.

Sample Input :

one,first line

two,second line

Ouput Required :

Key : one

Value : first line

Key : two

Value : second line

Question : Apache Sqoop(TM) is a tool designed for efficiently transferring bulk data between Apache Hadoop and structured datastores such as relational

databases. You use Sqoop to import a table from your RDBMS into HDFS. You have configured to use 3 mappers in Sqoop, to controll the number of

parallelism and memory in use. Once the table import is finished, you notice that total 7 Mappers have run, there are 7 output files in HDFS,

and 4 of the output files is empty. Why?

1. The table does not have a numeric primary key

2. The table does not have a primary key

3. Access Mostly Uused Products by 50000+ Subscribers

4. The table does not have a unique key

Correct Answer : Get Lastest Questions and Answer :

Explanation: Sqoop imports data in parallel from most database sources. You can specify the number of map tasks (parallel processes) to use to perform the import by using the -m or --num-mappers argument. Each of these arguments takes an integer value which corresponds to the degree of parallelism to employ. By default, four tasks are used. Some databases may see improved performance by increasing this value to 8 or 16. Do not increase the degree of parallelism greater than that available within your MapReduce cluster; tasks will run serially and will likely increase the amount of time required to perform the import. Likewise, do not increase the degree of parallism higher than that which your database can reasonably support. Connecting 100 concurrent clients to your database may increase the load on the database server to a point where performance suffers as a result.

When performing parallel imports, Sqoop needs a criterion by which it can split the workload. Sqoop uses a splitting column to split the workload. By default, Sqoop will identify the primary key column (if present) in a table and use it as the splitting column. The low and high values for the splitting column are retrieved from the database, and the map tasks operate on evenly-sized components of the total range. For example, if you had a table with a primary key column of id whose minimum value was 0 and maximum value was 1000, and Sqoop was directed to use 4 tasks, Sqoop would run four processes which each execute SQL statements of the form SELECT * FROM sometable WHERE id >= lo AND id ( hi, with (lo, hi) set to (0, 250), (250, 500), (500, 750), and (750, 1001) in the different tasks.

If the actual values for the primary key are not uniformly distributed across its range, then this can result in unbalanced tasks. You should explicitly choose a different column with the --split-by argument. For example, --split-by employee_id. Sqoop cannot currently split on multi-column indices. If your table has no index column, or has a multi-column key, then you must also manually choose a splitting column.If some Map task attempts failed, they would be rerun but no data from the failed task attempts would be stored on disk. There is no sqoop.num.maps property. Sqoop typically reads the table in a single transaction, so modifying the data would have no effect; and the HDFS block size is irrelevant to the number of files created. The correct answer is that by default, Sqoop uses the table's primary key to determine how to split the data. If there is no numeric primary key, Sqoop will make a best-guess attempt at how the data is distributed, and may run more than its default configured Mappers, although some may end up not actually reading any data. By default, the import process will use JDBC which provides a reasonable cross-vendor import channel. Some databases can perform imports in a more high-performance fashion by using database-specific data movement tools. For example, MySQL provides the mysqldump tool which can export data from MySQL to other systems very quickly. By supplying the --direct argument, you are specifying that Sqoop should attempt the direct import channel. This channel may be higher performance than using JDBC. Currently, direct mode does not support imports of large object columns.When importing from PostgreSQL in conjunction with direct mode, you can split the import into separate files after individual files reach a certain size. This size limit is controlled with the --direct-split-size argument.By default, Sqoop will import a table named foo to a directory named foo inside your home directory in HDFS. For example, if your username is someuser, then the import tool will write to /user/someuser/foo/(files). You can adjust the parent directory of the import with the --warehouse-dir argument. For example:

$ sqoop import --connnect (connect-str) --table foo --warehouse-dir /shared \

This command would write to a set of files in the /shared/foo/ directory.

Question : In the QuickTechie Inc Hadoop cluster you have defined block size as MB. The input file contains MB of valid input data

and is loaded into HDFS. How many map tasks should run without considering any failure of MapTask during the execution of this job?

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

Correct Answer : Get Lastest Questions and Answer :

Explanation: 194/64 = will 3.xxx hence total 4 map task will be executed. 3 for full blcok of 64MB and last one for remaining data.

Watch the training Module 21 from http://hadoopexam.com/index.html/#hadoop-training

Related Questions

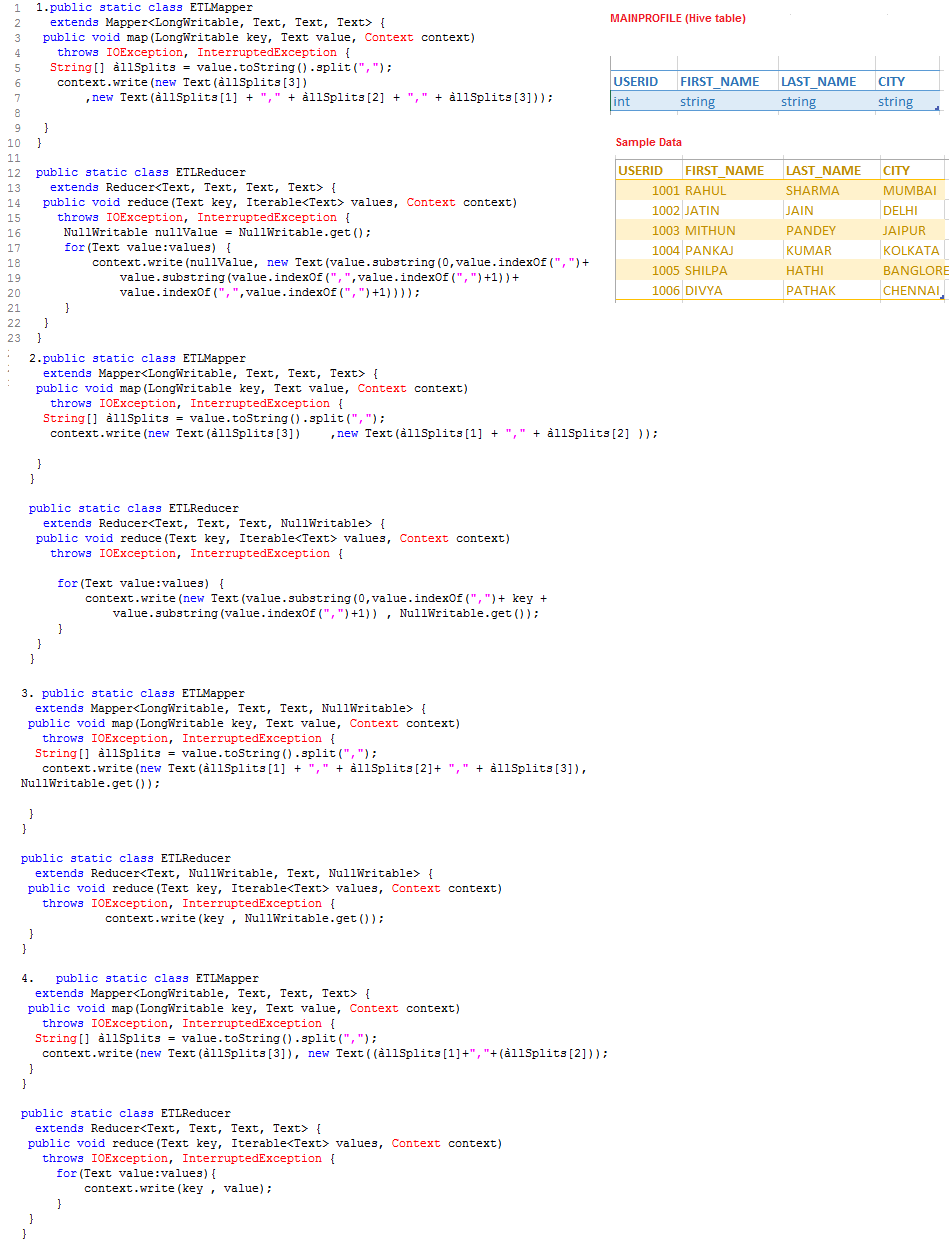

Question :We have extracted the data from

MySQL backend database of QuickTechie.com

website and stored in the

Hive table called MAINPROFILE as shown in image

with the sample data and also shown column datatype.

As this table is created from the data

which is already stored in a

warehouse directory of Hive.

As this table is created from the data

which is already stored in a

warehouse directory of Hive.

Select the correct MapReduce

code which simulate

the following Query in a single file.

SELECT FIRST_NAME,CITY,LAST_NAME

FROM MAINPROFILE

ORDER BY CITY;

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

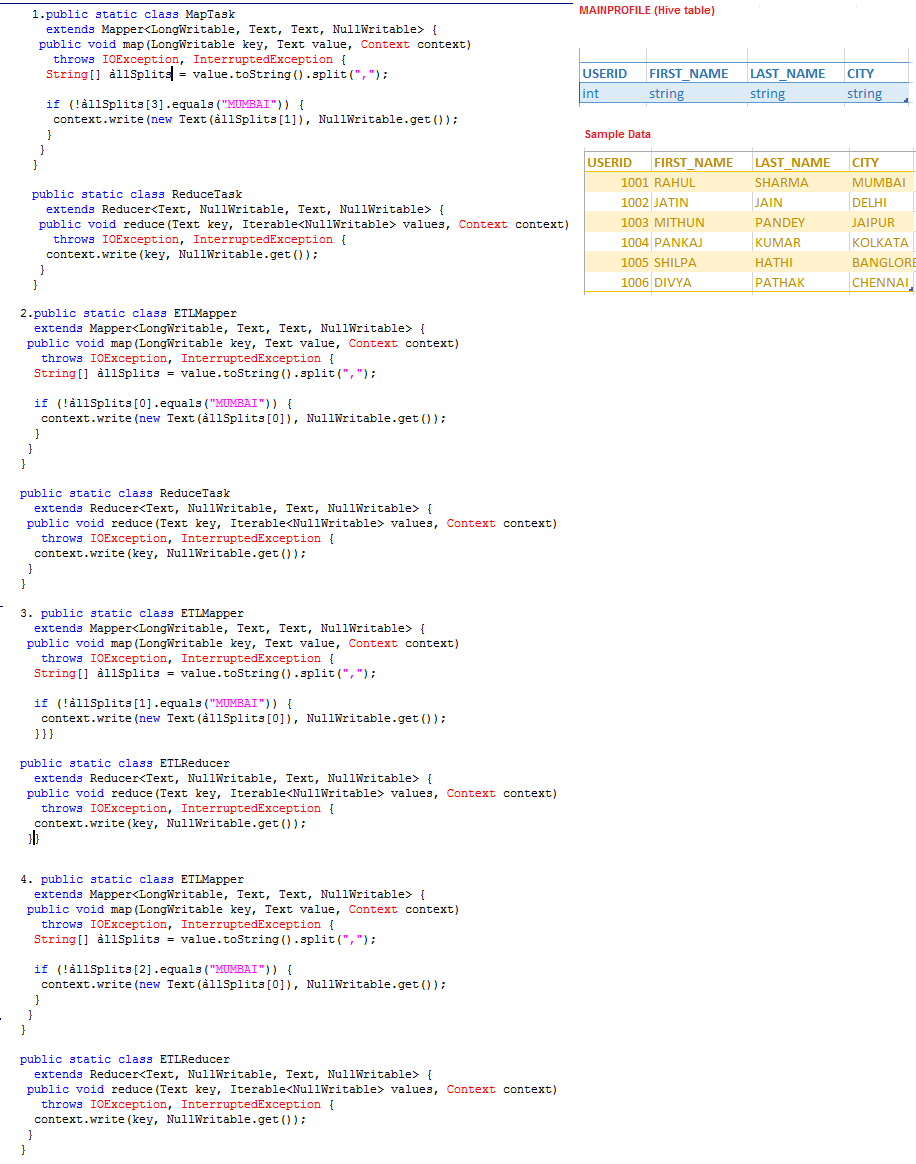

Question : We have extracted the data from

MySQL backend database of QuickTechie.com

website and stored in the

Hive table called MAINPROFILE as shown in image

with the sample data and also shown column datatype.

As this table is created from the data

which is already stored in a

warehouse directory of Hive.

As this table is created from the data

which is already stored in a

warehouse directory of Hive.

Select the correct MapReduce

code which simulate

the following Query in a single file.

SELECT DISTINCT FIRST_NAME

FROM MAINPROFILE

WHERE CITY != "MUMBAI"

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

Question : Given this data file: MAIN.PROFILE.log

1 Feeroz Fremon

2 Jay Jaipur

3 Amit Alwar

10 Banjara Banglore

11 Jayanti Jaipur

101 Sehwag Shimla

You write the following Pig script:

users = LOAD 'MAIN.PROFILE.log' AS (userid, username, city);

sortedusers = ORDER users BY id DESC;

DUMP sortedusers;

The output looks like this:

(3,Amit , Alwar)

(2,Jayanti, Jaipur)

(11,Jane, Jaipur)

(101,Sehwag, Shimla)

(10,Banjara, Banglore)

(1,Feeroz, Fremon)

Choose one line which, when modified as shown, would result in the output being displayed in descending ID order

1. DUMP sortedusers;

2. users = LOAD 'MAIN.PROFILE.log' AS (userid:int, usernamename,city);

3. Access Mostly Uused Products by 50000+ Subscribers

Question : QuickTechie Inc has a log file which is tab-delimited text file. File contains two columns username and loginid

You want use an InputFormat that returns the username as the key and the loginid as the value. Which of the following

is the most appropriate InputFormat should you use?

1. KeyValueTextInputFormat

2. MultiFileInputFormat

3. Access Mostly Uused Products by 50000+ Subscribers

4. SequenceFileInputFormat

5. TextInputFormat

Question : Apache Sqoop(TM) is a tool designed for efficiently transferring bulk data between Apache Hadoop and structured datastores such as relational

databases. You use Sqoop to import a table from your RDBMS into HDFS. You have configured to use 3 mappers in Sqoop, to controll the number of

parallelism and memory in use. Once the table import is finished, you notice that total 7 Mappers have run, there are 7 output files in HDFS,

and 4 of the output files is empty. Why?

1. The table does not have a numeric primary key

2. The table does not have a primary key

3. Access Mostly Uused Products by 50000+ Subscribers

4. The table does not have a unique key

Question : In the QuickTechie Inc Hadoop cluster you have defined block size as MB. The input file contains MB of valid input data

and is loaded into HDFS. How many map tasks should run without considering any failure of MapTask during the execution of this job?

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4