AWS Certified SysOps Administrator - Associate Questions and Answers (Dumps and Practice Questions)

Question : You have a server with a OGB Amazon EBS data volume. The volume is % full. You need to

back up the volume at regular intervals and be able to re-create the volume in a new Availability

Zone in the shortest time possible. All applications using the volume can be paused for a period of

a few minutes with no discernible user impact.

Which of the following backup methods will best fulfill your requirements?

1. Take periodic snapshots of the EBS volume

2. Use a third party Incremental backup application to back up to Amazon Glacier

3. Access Mostly Uused Products by 50000+ Subscribers

4. Create another EBS volume in the second Availability Zone attach it to the Amazon EC2 instance, and use a disk manager to mirror the two disks

Correct Answer : Get Lastest Questions and Answer :

Explanation: After writing data to an EBS volume, you can periodically create a snapshot of the volume to use as a baseline for new volumes or for data backup. If you make periodic

snapshots of a volume, the snapshots are incremental so that only the blocks on the device that have changed after your last snapshot are saved in the new snapshot. Even though

snapshots are saved incrementally, the snapshot deletion process is designed so that you need to retain only the most recent snapshot in order to restore the volume.

Snapshots occur asynchronously and the status of the snapshot is pending until the snapshot is complete.

Snapshots that are taken from encrypted volumes are automatically encrypted. Volumes that are created from encrypted snapshots are also automatically encrypted. Your encrypted

volumes and any associated snapshots always remain protected. For more information, see Amazon EBS Encryption.

By default, only you can launch volumes from snapshots that you own. However, you can choose to share your unencrypted snapshots with specific AWS accounts or make them public. For

more information, see Sharing an Amazon EBS Snapshot. Encrypted snapshots cannot be shared with anyone, because your volume encryption keys and master key are specific to your

account. If you need to share your encrypted snapshot data, you can migrate the data to an unencrypted volume and share a snapshot of that volume. For more information, see Migrating

Data.

When a snapshot is created from a volume with an AWS Marketplace product code, the product code is propagated to the snapshot.

You can take a snapshot of an attached volume that is in use. However, snapshots only capture data that has been written to your Amazon EBS volume at the time the snapshot command is

issued. This might exclude any data that has been cached by any applications or the operating system. If you can pause any file writes to the volume long enough to take a snapshot,

your snapshot should be complete. However, if you can't pause all file writes to the volume, you should unmount the volume from within the instance, issue the snapshot command, and

then remount the volume to ensure a consistent and complete snapshot. You can remount and use your volume while the snapshot status is pending.

To create a snapshot for Amazon EBS volumes that serve as root devices, you should stop the instance before taking the snapshot.

Answer 4 is wrong, since an EBS volume should be in the same AZ as the EC2 instance. You can not connect a EBS volume in an other AZ.

Question : Your company Is moving towards tracking web page users with a small tracking Image loaded on

each page Currently you are serving this image out of US-East, but are starting to get concerned

about the time It takes to load the image for users on the west coast.

What are the two best ways to speed up serving this image?

Choose 2 answers

A. Use Route 53's Latency Based Routing and serve the image out of US-West-2 as well as USEast-1

B. Serve the image out through CloudFront

C. Serve the image out of S3 so that it isn't being served oft of your web application tier

D. Use EBS PIOPs to serve the image faster out of your EC2 instances

1. A,C

2. C,D

3. Access Mostly Uused Products by 50000+ Subscribers

4. A,D

5. A,B

Correct Answer : Get Lastest Questions and Answer :

Explanation: Latency Based Routing (LBR) Run multiple stacks of your application in different EC2 regions around the world Create LBR records using the Route 53 API or Console . Tag each

destination end-point to the EC2 region that it's in . End-points can either be EC2 instances, Elastic IPs or ELBs Route 53 will route end users to the end-point that provides the

lowest latency , Inc. and its affiliates. All rights reserved. May not be copied, modified or distributed in whole or in part without the express consent of Amazon.com, Inc.

LBR Benefits Better performance than running in a single region Improved reliability relative to running in a single region Easier implementation than traditional DNS solutions Much

lower prices than traditional DNS solutions 8 2011 Amazon.com, Inc. and its affiliates. All rights reserved. May not be copied, modified or distributed in whole or in part without

the express consent of Amazon.com, Inc.

Amazon CloudFront is a web service that gives businesses and web application developers an easy and cost effective way to distribute content with low latency and high data transfer

speeds. Like other AWS services, Amazon CloudFront is a self-service, pay-per-use offering, requiring no long term commitments or minimum fees. With CloudFront, your files are

delivered to end-users using a global network of edge locations.

use Amazon CloudFront, you:

For static files, store the definitive versions of your files in one or more origin servers. These could be Amazon S3 buckets. For your dynamically generated content that is

personalized or customized, you can use Amazon EC2 - or any other web server - as the origin server. These origin servers will store or generate your content that will be

distributed through Amazon CloudFront.

Register your origin servers with Amazon CloudFront through a simple API call. This call will return a CloudFront.net domain name that you can use to distribute content from your

origin servers via the Amazon CloudFront service. For instance, you can register the Amazon S3 bucket "bucketname.s3.amazonaws.com" as the origin for all your static content and

an Amazon EC2 instance "dynamic.myoriginserver.com" for all your dynamic content. Then, using the API or the AWS Management Console, you can create an Amazon CloudFront

distribution that might return "abc123.cloudfront.net" as the distribution domain name.

Include the cloudfront.net domain name, or a CNAME alias that you create, in your web application, media player, or website. Each request made using the cloudfront.net domain

name (or the CNAME you set-up) is routed to the edge location best suited to deliver the content with the highest performance. The edge location will attempt to serve the request

with a local copy of the file. If a local copy is not available, Amazon CloudFront will get a copy from the origin. This copy is then available at that edge location for future

requests.

Amazon CloudFront employs a network of edge locations that cache copies of popular files close to your viewers. Amazon CloudFront ensures that end-user requests are served by the

closest edge location. As a result, requests travel shorter distances to request objects, improving performance. For files not cached at the edge locations, Amazon CloudFront keeps

persistent connections with your origin servers so that those files can be fetched from the origin servers as quickly as possible. Finally, Amazon CloudFront uses additional

optimizations - e.g. wider TCP initial congestion window - to provide higher performance while delivering your content to viewers.

Question : If you want to launch Amazon Elastic Compute Cloud (EC) Instances and assign each Instance a

predetermined private IP address you should:

1. Assign a group or sequential Elastic IP address to the instances

2. Launch the instances in a Placement Group

3. Access Mostly Uused Products by 50000+ Subscribers

4. Use standard EC2 instances since each instance gets a private Domain Name Service (DNS) already

5. Launch the Instance from a private Amazon Machine image (AMI)

Correct Answer : Get Lastest Questions and Answer :

Explanation: A private IP address is an IP address that's not reachable over the Internet. You can use private IP addresses for communication between instances in the same network

(EC2-Classic or a VPC). For more information about the standards and specifications of private IP addresses, go to RFC 1918.

When you launch an instance, we allocate a private IP address for the instance using DHCP. Each instance is also given an internal DNS hostname that resolves to the private IP

address of the instance; for example, ip-10-251-50-12.ec2.internal. You can use the internal DNS hostname for communication between instances in the same network, but we can't

resolve the DNS hostname outside the network that the instance is in.

An instance launched in a VPC is given a primary private IP address in the address range of the subnet. For more information, see Subnet Sizing in the Amazon VPC User Guide. If you

don't specify a primary private IP address when you launch the instance, we select an available IP address in the subnet's range for you. Each instance in a VPC has a default network

interface (eth0) that is assigned the primary private IP address. You can also specify additional private IP addresses, known as secondary private IP addresses. Unlike primary

private IP addresses, secondary private IP addresses can be reassigned from one instance to another. For more information, see Multiple Private IP Addresses.

For instances launched in EC2-Classic, we release the private IP address when the instance is stopped or terminated. If you restart your stopped instance, it receives a new private

IP address.

For instances launched in a VPC, a private IP address remains associated with the network interface when the instance is stopped and restarted, and is released when the instance is

terminated.

EC2-Classic : We select a single private IP address for your instance; multiple IP addresses are not supported.

VPC : You can assign multiple private IP addresses to your instance.

Related Questions

Question : You are designing a system that has a Bastion host. This component needs to be highly available without human intervention.

Which of the following approaches would you select?

1. Run the bastion on two instances one in each AZ

2. Run the bastion on an active Instance in one AZ and have an AMI ready to boot up in the event of failure

3. Access Mostly Uused Products by 50000+ Subscribers

4. Configure an ELB in front of the bastion instance

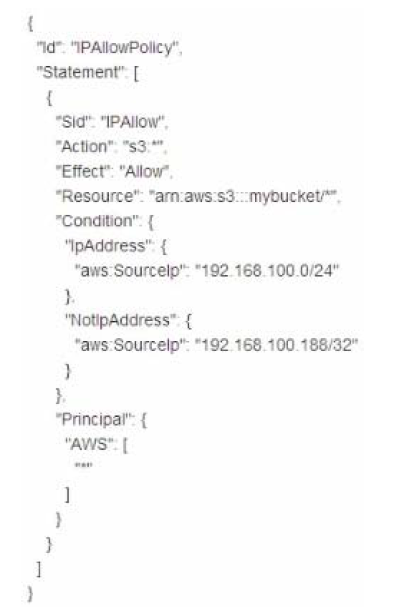

Question : Which of the following statements about this S bucket policy is true?

1. Denies the server with the IP address 192.168.100.0 full access to the "mybucket" bucket

2. Denies the server with the IP address 192.168.100.188 full access to the "mybucket" bucket

3. Access Mostly Uused Products by 50000+ Subscribers

4. Grants all the servers within the 192.168.100.188/32 subnet full access to the "mybucket" bucket

Question : Which of the following requires a custom CloudWatch metric to monitor?

1. Data transfer of an EC2 instance

2. Disk usage activity of an EC2 instance

3. Access Mostly Uused Products by 50000+ Subscribers

4. CPU Utilization of an EC2 instance

Question : You run a web application where web servers on EC instances are in an Auto Scaling group Monitoring over the last months shows that web servers are necessary to

handle the minimum load. During the day up to 12 servers are needed. Five to six days per year, the number of web servers required might go up to 15.

What would you recommend to minimize costs while being able to provide high availability?

1. 6 Reserved instances (heavy utilization). 6 Reserved instances {medium utilization), rest covered by On-Demand instances

2. 6 Reserved instances (heavy utilization). 6 On-Demand instances, rest covered by Spot Instances

3. Access Mostly Uused Products by 50000+ Subscribers

4. 6 Reserved instances (heavy utilization) 6 Reserved instances (medium utilization) rest covered by Spot instances

Question : You have been asked to propose a multi-region deployment of a web-facing application where a controlled portion of your traffic is being processed by an alternate

region. Which configuration would achieve that goal?

1. Route53 record sets with weighted routing policy

2. Route53 record sets with latency based routing policy

3. Access Mostly Uused Products by 50000+ Subscribers

4. Elastic Load Balancing with health checks enabled

Question : You have set up Individual AWS accounts for each project. You have been asked to make sure your AWS Infrastructure costs do not exceed the budget set per project for

each month. Which of the following approaches can help ensure that you do not exceed the budget each month?

1. Consolidate your accounts so you have a single bill for all accounts and projects

2. Set up auto scaling with CloudWatch alarms using SNS to notify you when you are running too

many Instances in a given account

3. Access Mostly Uused Products by 50000+ Subscribers

occurring when the amount for each resource tagged to a particular project matches the budget

allocated to the project.

4. Set up CloudWatch billing alerts for all AWS resources used by each account, with email notifications when it hits 50%. 80% and 90% of its budgeted monthly spend