AWS Certified Solutions Architect - Professional Questions and Answers (Dumps and Practice Questions)

Question : You are designing a multi-platform web application for AWS The application will run on

EC2 instances and will be accessed from PCs. tablets and smart phones Supported

accessing platforms are Windows. MACOS. IOS and Android Separate sticky session and

SSL certificate setups are required for different platform types which of the following

describes the most cost effective and performance efficient architecture setup?

1. Setup a hybrid architecture to handle session state and SSL certificates on-prem and

separate EC2 Instance groups running web applications for different platform types running

in a VPC.

2. Set up one ELB for all platforms to distribute load among multiple instance under it Each

EC2 instance implements ail functionality for a particular platform.

3. Access Mostly Uused Products by 50000+ Subscribers

ELB handles session stickiness for all platforms for each ELB run separate EC2 instance

groups to handle the web application for each platform.

4. Assign multiple ELBS to an EC2 instance or group of EC2 instances running the

common components of the web application, one ELB for each platform type Session

stickiness and SSL termination are done at the ELBs.

Answer: 4

Explanation: For EACH SSL , one ELB is needed.

Question : You are implementing a URL whitelisting system for a company that wants to restrict

outbound HTTP'S connections to specific domains from their EC2-hosted applications you

deploy a single EC2 instance running proxy software and configure It to accept traffic from

all subnets and EC2 instances in the VPC. You configure the proxy to only pass through

traffic to domains that you define in its whitelist configuration You have a nightly

maintenance window or 10 minutes where ail instances fetch new software updates. Each

update Is about 200MB In size and there are 500 instances In the VPC that routinely fetch

updates After a few days you notice that some machines are failing to successfully

download some, but not all of their updates within the maintenance window The download

URLs used for these updates are correctly listed in the proxy's whitelist configuration and

you are able to access them manually using a web browser on the instances What might

be happening? (Choose 2 answers)

A. You are running the proxy on an undersized EC2 instance type so network throughput is not sufficient for all instances to download their updates in time.

B. You have not allocated enough storage to the EC2 instance running me proxy so the network buffer is filling up. causing some requests to fall

C. You are running the proxy in a public subnet but have not allocated enough EIPs to support the needed network throughput through the Internet Gateway (IGW)

D. You are running the proxy on a EC2 instance in a private subnet and its network throughput is being throttled by a NAT running on an undersized EC2 instance

E. The route table for the subnets containing the affected EC2 instances is not configured to direct network traffic for the software update locations to the proxy.

1. A,B

2. A,D

3. Access Mostly Uused Products by 50000+ Subscribers

4. D,E

Answer: 2

Explanation: Amazon offers a range of instance types with varying amounts of memory and CPU. What is not well "documented" however, is network capabilities which are simply categorized as -

Low, Moderate, High, and 10Gb. Based on our experiments running Aerospike servers on AWS and iperf runs on AWS, we were able to better define these categories to the following

numbers:

Low - Up to 100 Mbps

Moderate - 100 Mbps to 300 Mbps

High - 100 Mbps to 1.86 Gbps

10Gb - upto 8.86Gbps

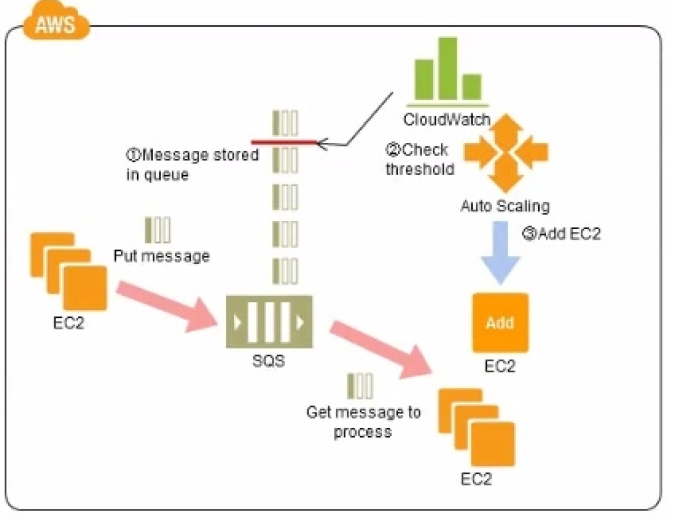

Question : Refer to the architecture diagram above of

a batch processing solution using Simple Queue

Service (SOS) to set up a message queue between

EC2 instances which are used as batch processors

Cloud Watch monitors the number of Job requests (queued messages)

and an Auto Scaling group adds or deletes batch

servers automatically based on parameters set in Cloud Watch alarms.

You can use this architecture to implement which of

the following features in a cost effective and efficient manner?

1. Reduce the overall time for executing jobs through parallel processing by allowing a

busy EC2 instance that receives a message to pass it to the next instance in a daisy-chain setup.

2. Implement fault tolerance against EC2 instance failure since messages would remain in

SQS and worn can continue with recovery of EC2 instances implement fault tolerance against SQS failure by backing up messages to S3.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Coordinate number of EC2 instances with number of job requests automatically thus Improving cost effectiveness.

5. Handle high priority jobs before lower priority jobs by assigning a priority metadata field to SQS messages.

Answer: 1 This architecture will help to do better Auto scalling and parallel processing based on the length of Queue. Amazon Simple Queue Service (Amazon SQS) is a scalable message queuing system that stores messages as they travel between various components of your application architecture. Amazon SQS enables web service applications to quickly and reliably queue messages that are generated by one component and consumed by another component. A queue is a temporary repository for messages that are awaiting processing.

Related Questions

Question : You are responsible for a legacy web application whose server environment is approaching

end of life You would like to migrate this application to AWS as quickly as possible, since

the application environment currently has the following limitations:

The VM's single 10GB VMDK is almost full

Me virtual network interface still uses the 10Mbps driver, which leaves your

100Mbps WAN connection completely underutilized

It is currently running on a highly customized. Windows VM within a VMware

environment:

You do not have me installation media

This is a mission critical application with an RTO (Recovery Time Objective) of 8 hours.

RPO (Recovery Point Objective) of 1 hour. How could you best migrate this application to

AWS while meeting your business continuity requirements?

1. Use the EC2 VM Import Connector for vCenter to import the VM into EC2.

2. Use Import/Export to import the VM as an EBS snapshot and attach to EC2.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Use me ec2-bundle-instance API to Import an Image of the VM into EC2

Question : You are migrating a legacy client-server application to AWS The application responds to a

specific DNS domain (e g www example com) and has a 2-tier architecture, with multiple

application servers and a database server Remote clients use TCP to connect to the

application servers. The application servers need to know the IP address of the clients in

order to function properly and are currently taking that information from the TCP socket A

Multi-AZ RDS MySQL instance will be used for the database.

During the migration you can change the application code but you have to file a change

request.

How would you implement the architecture on AWS In order to maximize scalability and

high ability?

1. File a change request to implement Proxy Protocol support In the application Use an

ELB with a TCP Listener and Proxy Protocol enabled to distribute load on two application

servers in different AZs.

2. File a change request to Implement Cross-Zone support in the application Use an ELB

with a TCP Listener and Cross-Zone Load Balancing enabled, two application servers in

different AZs.

3. Access Mostly Uused Products by 50000+ Subscribers

Use Route 53 with Latency Based Routing enabled to distribute load on two application

servers in different AZs.

4. File a change request to implement Alias Resource support in the application Use Route

53 Alias Resource Record to distribute load on two application servers in different AZs.

Question : Your department creates regular analytics reports from your company's log files All log data is collected in Amazon S and processed by daily Amazon Elastic MapReduce

(EMR) jobs that generate daily PDF reports and aggregated tables in CSV format for an Amazon Redshift data warehouse. Your CFO requests that you optimize the cost structure for this

system. Which of the following alternatives will lower costs without compromising average performance of the system or data integrity for the raw data?

1. Use reduced redundancy storage (RRS) for PDF and csv data in Amazon S3. Add Spot instances to Amazon EMR jobs Use Reserved Instances for Amazon Redshift.

2. Use reduced redundancy storage (RRS) for all data in S3. Use a combination of Spot instances and Reserved Instances for Amazon EMR jobs use Reserved instances fors

Amazon Redshift.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Use reduced redundancy storage (RRS) for PDF and csv data in S3. Add Spot Instances to EMR jobs Use Spot Instances for Amazon Redshift.

Question : You are the new IT architect in a company that operates a mobile sleep tracking application

When activated at night, the mobile app is sending collected data points of 1 kilobyte every 5 minutes to your backend

The backend takes care of authenticating the user and writing the data points into an Amazon DynamoDB table.

Every morning, you scan the table to extract and aggregate last night's data on a per user basis, and store the results in Amazon S3.

Users are notified via Amazon SMS mobile push notifications that new data is available, which is parsed and visualized by (he mobile app Currently you have around 100k users

who are mostly based out of North America. You have been tasked to optimize the architecture of the backend system to lower cost

what would you recommend? (Choose 2 answers)

A. Create a new Amazon DynamoDB Table each day and drop the one for the previous day after its data is on Amazon S3.

B. Have the mobile app access Amazon DynamoDB directly instead of JSON files stored on Amazon S3.

C. Introduce an Amazon SQS queue to buffer writes to the Amazon DynamoDB table and reduce provisioned write throughput.

D. Introduce Amazon Elasticache lo cache reads from the Amazon DynamoDB table and reduce provisioned read throughput.

E. Write data directly into an Amazon Redshift cluster replacing both Amazon DynamoDB and Amazon S3.

1. A,B

2. B,C

3. Access Mostly Uused Products by 50000+ Subscribers

4. A,C

Question : A benefits enrollment company is hosting a -tier web application running in a VPC on

AWS which includes a NAT (Network Address Translation) instance in the public Web tier.

There is enough provisioned capacity for the expected workload tor the new fiscal year

benefit enrollment period plus some extra overhead Enrollment proceeds nicely for two

days and then the web tier becomes unresponsive, upon investigation using CloudWatch

and other monitoring tools it is discovered that there is an extremely large and

unanticipated amount of inbound traffic coming from a set of 15 specific IP addresses over

port 80 from a country where the benefits company has no customers. The web tier

instances are so overloaded that benefit enrollment administrators cannot even SSH into

them. Which activity would be useful in defending against this attack?

1. Create a custom route table associated with the web tier and block the attacking IP

addresses from the IGW (internet Gateway)

2. Change the EIP (Elastic IP Address) of the NAT instance in the web tier subnet and

update the Main Route Table with the new EIP

3. Access Mostly Uused Products by 50000+ Subscribers

4. Create an inbound NACL (Network Access control list) associated with the web tier

subnet with deny rules to block the attacking IP addresses

Question : You have launched an EC instance with four () GB EBS Provisioned IOPS volumes attached. The EC Instance is EBS-Optimized and supports Mbps throughput between

EC2 and EBS. The two EBS volumes are configured as a single RAID 0 device, and each Provisioned IOPS volume is provisioned with 4.000 IOPS (4.000 16KB reads or writes) for a total

of 16.000 random IOPS on the instance. The EC2 Instance initially delivers the expected 16.000 IOPS random read and write performance. Sometime later in order to increase the total

random I/O performance of the instance, you add an additional two 500 GB EBS Provisioned IOPS volumes to the RAID. Each volume Is provisioned to 4.000 lOPs like the original four for

a total of 24.000 IOPS on the EC2 instance. Monitoring shows that the EC2 instance CPU utilization increased from 50% to 70%. but the total random IOPS measured at the instance level

does not increase at all. What is the problem and a valid solution?

1. Larger storage volumes support higher Provisioned IOPS rates: increase the provisioned volume storage of each of the 6 EBS volumes to 1TB.

2. The EBS-Optimized throughput limits the total IOPS that can be utilized use an EBS Optimized instance that provides larger throughput.

3. Access Mostly Uused Products by 50000+ Subscribers

4. RAID 0 only scales linearly to about 4 devices, use RAID 0 with 4 EBS Provisioned IOPS volumes but increase each Provisioned IOPS EBS volume to 6.000 IOPS

5. The standard EBS instance root volume limits the total IOPS rate, change the instant root volume to also be a 500GB 4.000 Provisioned IOPS volume.