AWS Certified SysOps Administrator - Associate Questions and Answers (Dumps and Practice Questions)

Question : When preparing for a compliance assessment of your system built inside of AWS. What are three best-practices for you to prepare for an audit?

Choose 3 answers

A. Gather evidence of your IT operational controls

B. Request and obtain applicable third-party audited AWS compliance reports and certifications

C. Request and obtain a compliance and security tour of an AWS data center for a preassessment security review.

D. Request and obtain approval from AWS to perform relevant network scans and in-depth penetration tests of your system's Instances and endpoints.

E. Schedule meetings with AWS's third-party auditors to provide evidence of AWS compliance that maps to your control objectives.

1. B,D,E

2. A,B,D

3. Access Mostly Uused Products by 50000+ Subscribers

4. C,D,E

5. A,B,C

Correct Answer : Get Lastest Questions and Answer :

Explanation: Data center tours. Are data center tours by customers allowed by the cloud provider?

No. Due to the fact that our data centers host multiple customers, AWS does not allow data center tours by customers, as this exposes a wide

range of customers to physical access of a third party. To meet this customer need, an independent and competent auditor validates the

presence and operation of controls as part of our SOC 1 Type II report. This broadly accepted third-party validation provides customers with

the independent perspective of the effectiveness of controls in place. AWS customers that have signed a non-disclosure agreement with AWS

may request a copy of the SOC 1 Type II report. Independent reviews of data center physical security is also a part of the ISO 27001 audit, the

PCI assessment, ITAR audit, and the FedRAMPsm testing programs

Hence, Option C is clearly out.

Customers can request permission to conduct scans of their cloud infrastructure as

long as they are limited to the customer's instances and do not violate the AWS Acceptable Use Policy. Advance

approval for these types of scans can be initiated by submitting a request via the AWS Vulnerability Penetration

Testing Request Form. (Hence, option D is in)

A - Even the systems are build in AWS, the operation team still need a solid IT Operation Control to make sure everything good.

Question : You have started a new job and are reviewing your company's infrastructure on AWS. You notice one web application where they have an Elastic Load Balancer (ELB) in

front of web instances in an Auto Scaling Group. When you check the metrics for the ELB in CloudWatch you see four healthy instances in Availability Zone (AZ) A and zero in AZ B.

There are zero unhealthy instances. What do you need to fix to balance the instances across AZs?

1. Set the ELB to only be attached to another AZ

2. Make sure Auto Scaling is configured to launch in both AZs

3. Access Mostly Uused Products by 50000+ Subscribers

4. Make sure the maximum size of the Auto Scaling Group is greater than 4

Correct Answer : Get Lastest Questions and Answer :

Explanation: Make AutoScaling to launch in both az's. A launch configuration is a template that an Auto Scaling group uses to launch EC2 instances. When you create a launch configuration,

you specify information for the instances such as the ID of the Amazon Machine Image (AMI), the instance type, a key pair, one or more security groups, and a block device mapping. If

you've launched an EC2 instance before, you specified the same information in order to launch the instance.

When you create an Auto Scaling group, you must specify a launch configuration. You can specify your launch configuration with multiple Auto Scaling groups. However, you can only

specify one launch configuration for an Auto Scaling group at a time, and you can't modify a launch configuration after you've created it. Therefore, if you want to change the launch

configuration for your Auto Scaling group, you must create a launch configuration and then update your Auto Scaling group with the new launch configuration. When you change the

launch configuration for your Auto Scaling group, any new instances are launched using the new configuration parameters, but existing instances are not affected.

Question : You have been asked to leverage Amazon VPC EC and SQS to implement an application that submits and receives millions of messages per second to a message queue.

You want to ensure your application has sufficient bandwidth between your EC2 instances and SQS. Which option will provide

(The most scalable solution for communicating between the application and SQS)?

1. Ensure the application instances are properly configured with an Elastic Load Balancer

2. Ensure the application instances are launched in private subnets with the EBS-optimized option enabled

3. Access Mostly Uused Products by 50000+ Subscribers

4. Launch application instances in private subnets with an Auto Scaling group and Auto Scaling triggers configured to watch the SQS queue size

Correct Answer : Get Lastest Questions and Answer :

Exp: An Amazon EBS-optimized instance uses an optimized configuration stack and provides additional, dedicated capacity for Amazon EBS I/O. This optimization provides the best

performance for your EBS volumes by minimizing contention between Amazon EBS I/O and other traffic from your instance.

EBS-optimized instances deliver dedicated throughput to Amazon EBS, with options between 500 Mbps and 4,000 Mbps, depending on the instance type you use. When attached to an

EBS-optimized instance, General Purpose SSD volumes are designed to deliver within 10 percent of their baseline and burst performance 99.9 percent of the time in a given year, and

Provisioned IOPS SSD volumes are designed to deliver within 10 percent of their provisioned performance 99.9 percent of the time in a given year. For more information, see Amazon EBS

Volume Types.

When you enable EBS optimization for an instance that is not EBS-optimized by default, you pay an additional low, hourly fee for the dedicated capacity. For pricing information, see

EBS-optimized Instances on the Amazon EC2 Pricing page.

The question is about most "scalable solution for communicating" for SQS that is parallel processing of SQS messages.

Auto-Scaling EC2 Instances

With auto-scaling, you're trying to simultaneously achieve two seemingly opposing goals:

To have plenty of EC2 instances running so that your application is responsive and able to meet defined service level agreements

To limit the number of EC2 instances running so that computing resources aren't being wasted

The key is to establish a relationship between the load on the application and the optimum number of EC2 instances required to handle the load. However, there isn't a single way to

define the load on an application or the right proxy for the load. There are several proxies for load, including:

Number of simultaneous user connections

Number of incoming requests

CPU, memory, or bandwidth utilization

Average response time

Some of the proxies are easier to measure and track than others.

An alternative is Amazon SQS, which provides a simple way to represent load and implement auto-scaling for EC2 instances. This is discussed in the next section.

Related Questions

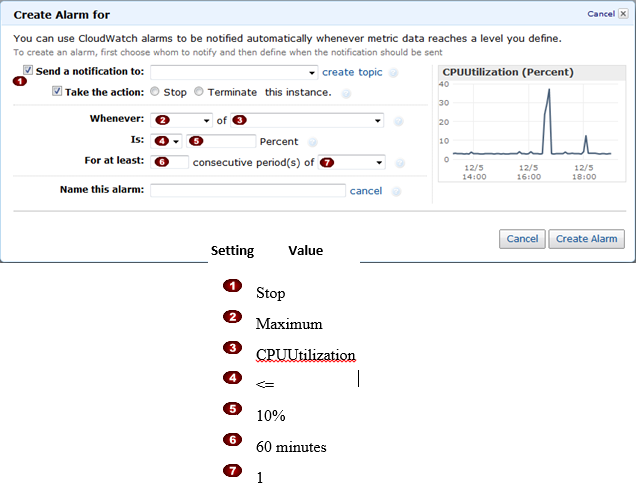

Question : In the Amazon Elastic Compute Cloud (Amazon EC) console

you have setup the Alarm as given setting, what would it implies

1. Create an alarm that terminates an instance used for software development or testing when it has been idle for at least an hour consecutive 3 times.

2. Create an alarm that terminates an instance used for software development or testing when it has been idle for at least an hour.

3. Access Mostly Uused Products by 50000+ Subscribers

Question : Using Amazon CloudWatch alarm actions, you __________ create alarms that automatically stop or terminate your Amazon Elastic

Compute Cloud (Amazon EC2) instances when you no longer need them to be running.

1. can not

2. can

3. Access Mostly Uused Products by 50000+ Subscribers

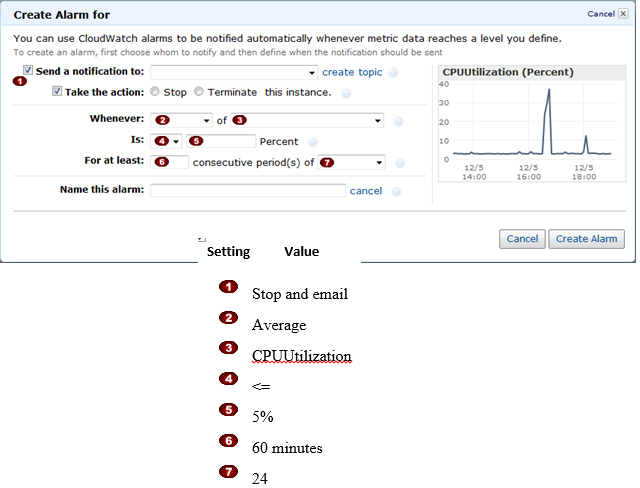

Question : In the Amazon Elastic Compute Cloud (Amazon EC)

console you have setup the Alarm as given setting, what would it implies

1. Create an alarm that terminates an instance and sends an email when the instance has been idle for 24 hours.

2. Create an alarm that stops an instance and sends an email when the instance has been idle for 1 hours.

3. Access Mostly Uused Products by 50000+ Subscribers

Question : What is the below command represent

% aws cloudwatch put-metric-alarm --alarm-name my-Alarm --alarm-description "" --namespace "AWS/EC2"

--dimensions Name=InstanceId,Value=i-abc123 --statistic Average --metric-name CPUUtilization --comparison-operator LessThanThreshold

--threshold 10 --period 86400 --evaluation-periods 4 --alarm-actions arn:aws:automate:us-east-1:ec2:stop

1. how to stop an instance if the average CPUUtilization is greater than 10 percent over a 24 hour period.

2. how to stop an instance if the average CPUUtilization is less than 10 percent over a 24 hour period.

3. Access Mostly Uused Products by 50000+ Subscribers

4. how to terminate an instance if the average CPUUtilization is greater than 10 percent over a 24 hour period.

Question : What is the below command represent

% aws cloudwatch put-metric-alarm --alarm-name my-Alarm --alarm-description "" --namespace "AWS/EC2" --dimensions Name=InstanceId,Value=i-abc123"

--statistic Average --metric-name CPUUtilization --comparison-operator LessThanThreshold --threshold 10 --period 86400 --evaluation-periods 4

-- alarm-actions arn:aws:automate:us-east-1:ec2:terminate

1. how to stop an instance if the average CPUUtilization is greater than 10 percent over a 24 hour period.

2. how to stop an instance if the average CPUUtilization is less than 10 percent over a 24 hour period.

3. Access Mostly Uused Products by 50000+ Subscribers

4. how to terminate an instance if the average CPUUtilization is greater than 10 percent over a 24 hour period.

Question : A user is trying to create a PIOPS EBS volume with GB size and IOPS. Will AWS create the volume?

1. Yes, since the ratio between EBS and IOPS is less than 30

2. No, since the PIOPS and EBS size ratio is less than 30

3. Access Mostly Uused Products by 50000+ Subscribers

4. Yes, since PIOPS is higher than 100