Cloudera Hadoop Developer Certification Questions and Answer (Dumps and Practice Questions)

Please find the answer to this Question at following URL in detail with explaination.

www.hadoopexam.com/P5_A55.jpg

Dont Remember Answers, please understand MapReduce in Depth. It is needed to clear live Question Exam Pattern

Question You have given following input file data..

119:12,Hadoop,Exam,ccd410

312:44,Pappu,Pass,cca410

441:53,"HBasa","Pass","ccb410"

5611:01',"No Event",

7881:12,Hadoop,Exam,ccd410

3451:12,HadoopExam

Special characters . * + ? ^ $ { [ ( | ) \ have special meaning and must be escaped with \ to be used without the special meaning : \. \* \+ \? \^ \$ \{ \[ \( \| \) \\Consider the meaning of regular expression as well

. any char, exactly 1 time

* any char, 0-8 times

+ any char, 1-8 times

? any char, 0-1 time

^ start of string (or line if multiline mode)

$ end of string (or line if multiline mode)

| equivalent to OR (there is no AND, check the reverse of what you need for AND)

After running the following MapReduce job what will be the output printed at console

1. 2 , 3

2. 2 , 4

3. Access Mostly Uused Products by 50000+ Subscribers

4. 5 , 1

5. 0 , 6

Correct Ans : 5

Exp : Meaning of regex as . any char, exactly 1 time (Please remember the regex)

* any char, 0-8 times

+ any char, 1-8 times

? any char, 0-1 time

^ start of string (or line if multiline mode)

$ end of string (or line if multiline mode)

| equivalent to OR (there is no AND, check the reverse of what you need for AND). First Record passed the regular expression

Second record also pass the expression

third record does not pass the expression, because hours part is in single digit as you can sse in the expression

first two d's are there.

It is expected that each record should have at least all five character as digit. Which no record suffice.

Hence in total matching records are 0 and non-matching records are 6

Please learn java regular expression it is mandatrory. Consider using Hadoop Professional Training Provided by HadoopExam.com if you face the problem.

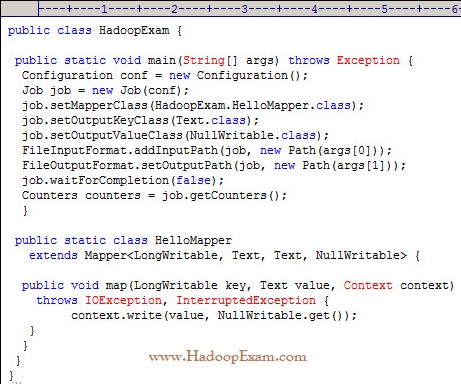

Question

What happens when you run the below job twice , having each input directory as one of the data file called data.csv.

with following command. Assuming there were no output directory exist

hadoop job HadoopExam.jar HadoopExam inputdata_1 output

hadoop job HadoopExam.jar HadoopExam inputdata_2 output

1. Both the job will write the output to output directoy and output will be appended

2. Both the job will fail, saying output directory does not exist.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Both the job will successfully completes and second job will overwrite the output of first.

Ans : 3

Exp : First job will successfully run and second one will fail, because, if (output directory already exist then it will not run

and throws exception, complaining output directory already exist.

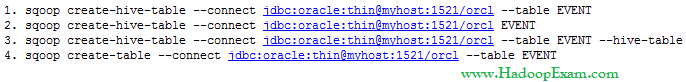

Question You have a an EVENT table with following schema in the Oracle database.

PAGEID NUMBER

USER VARCHAR2

EVENTTIME DATE

PLACE VARCHAR2

Which of the following command creates the correct HIVE table named EVENT

1.

2.

3. Access Mostly Uused Products by 50000+ Subscribers

4.

Ans : 2

Exp : The above is correct because it correctly uses the Sqoop operation to create a Hive table that matches the database table.

Option 3rd is not correct because --hive-table option for Sqoop requires a parameter that names the target table in the database.

Question : Which of the following command will delete the Hive table nameed EVENTINFO

1. hive -e 'DROP TABLE EVENTINFO'

2. hive 'DROP TABLE EVENTINFO'

3. Access Mostly Uused Products by 50000+ Subscribers

4. hive -e 'TRASH TABLE EVENTINFO'

1.

2.

3. Access Mostly Uused Products by 50000+ Subscribers

4.

Ans :1

Exp : Sqoop does not offer a way to delete a table from Hive, although it will overwrite the table definition during

import if the table already exists and --hive-overwrite is specified. The correct HiveQL statement to drop a table

is "DROP TABLE tablename". In Hive, table names are all case insensitives

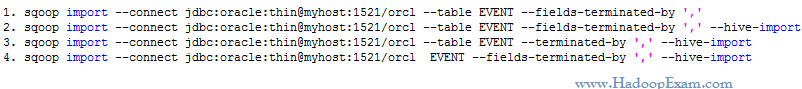

Question There is no tables in Hive, which command will

import the entire contents of the EVENT table from

the database into a Hive table called EVENT

that uses commas (,) to separate the fields in the data files?

1.

2.

3. Access Mostly Uused Products by 50000+ Subscribers

4.

Ans :2

Exp : --fields-terminated-by option controls the character used to separate the fields in the Hive table's data files.

Question :

You have following data in a hive table

ID:INT,COLOR:TEXT,WIDTH:INT

1,green,190

2,blue,300

3,yellow,220

4,blue,199

5,green,199

6,yellow,299

7,green,799

Select the correct Mapper and Reducer which

can produce the output similar to following queries

Select id,color,width from table where width >=200;

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

Correct Answer : Get Lastest Questions and Answer :

Explanation: Option 2 is correct,

Mapper : It iterate over each line in the tables and produce the output like as below from the Mapper which filter out

the records which has width is greater then 200.

Key 1 Value 2,blue,300

Key 1 Value 3,yellow,220

Key 1 Value 6,yellow,299

Key 1 Value 7,green,799

In the reducer part we ignore the key part and emit the value only.

Question :

You have following data in a hive table

ID:INT,COLOR:TEXT,WIDTH:INT

1,green,190

2,blue,300

3,yellow,220

4,blue,199

5,green,199

6,yellow,299

7,green,799

After running thje following MapReduce program, what output it will produces as first line.

1. 1,green,190

2. 4,blue,199

3. Access Mostly Uused Products by 50000+ Subscribers

4. it will through java.lang.ArrayIndexOutOfBoundsException

Correct Answer : Get Lastest Questions and Answer :

In the Mapper code we are splitting the value part with ";" , and it has "," instead of ";" , hence it does not

able to split the string and produce single element array. And when you it tries to access 2nd element it will through

ArrayIndexOutOfBoundsException

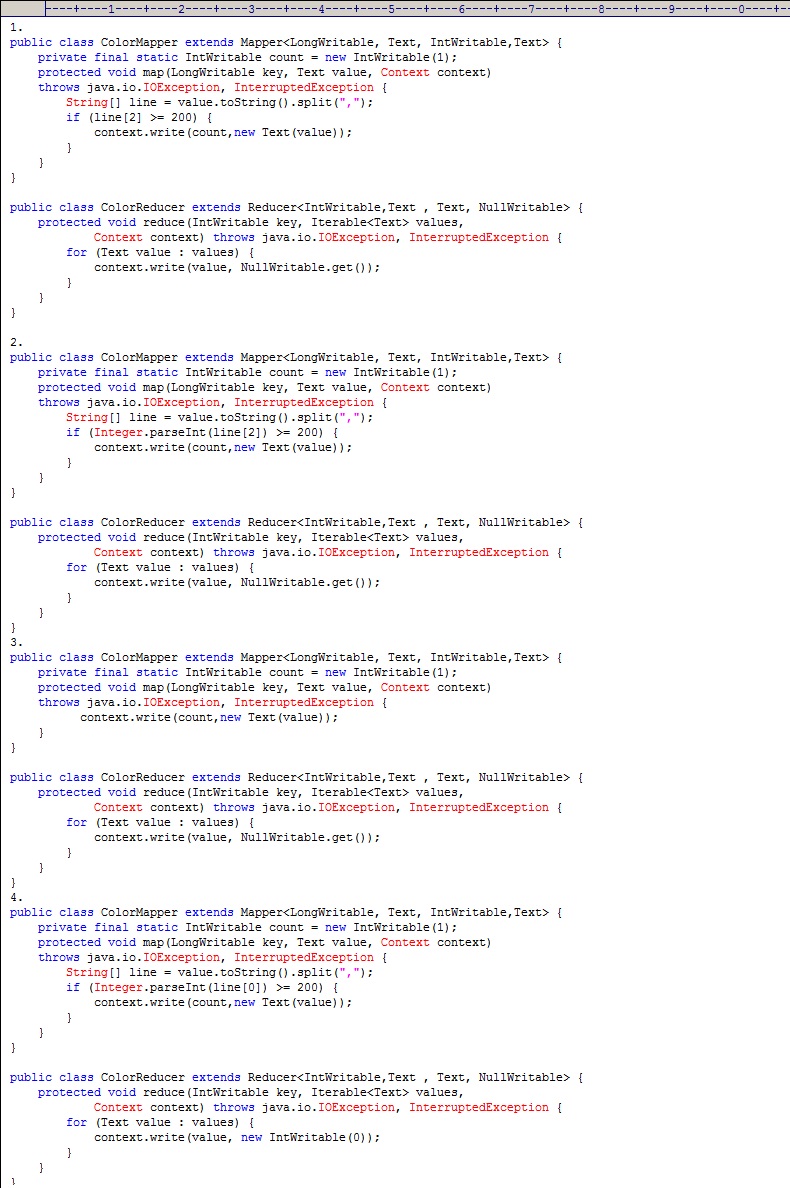

Question :

You have following data in a hive table

ID:INT,COLOR:TEXT,WIDTH:INT

1,green,190

2,blue,300

3,yellow,220

4,blue,199

5,green,199

6,yellow,299

7,green,799

Select the correct MapReduce program

which can produce the output similar

to below Hive Query.

Select color from table where width >=220;

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

Correct Answer : Get Lastest Questions and Answer :

In Mapper part it iterate over each value and produces the output like

Key and Value

blue 1

yellow 1

yellow 1

green 1

And reducer is going to print only key part from the emitted vlaue of the Mapper

Related Questions

Question :

What are supported programming language for Hadoop

1. Java and Scripting Language

2. Any Programming Language

3. Only Java

4. C , Cobol and Java

Question :

How does Hadoop process large volumes of data?

1. Hadoop uses a lot of machines in parallel. This optimizes data processing.

2. Hadoop was specifically designed to process large amount of data by taking advantage of MPP hardware

3. Hadoop ships the code to the data instead of sending the data to the code

4. Hadoop uses sophisticated cacheing techniques on namenode to speed processing of data

Question :

What are sequence files and why are they important?

1. Sequence files are binary format files that are compressed and are splitable.

They are often used in high-performance map-reduce jobs

2. Sequence files are a type of the file in the Hadoop framework that allow data to be sorted

3. Sequence files are intermediate files that are created by Hadoop after the map step

4. All of above

Question :

What are map files and why are they important?

1. Map files are stored on the namenode and capture the metadata for all blocks on a particular rack. This is how Hadoop is "rack aware"

2. Map files are the files that show how the data is distributed in the Hadoop cluster.

3. Map files are generated by Map-Reduce after the reduce step. They show the task distribution during job execution

4. Map files are sorted sequence files that also have an index. The index allows fast data look up.

Question :

Which of the following utilities allows you to create and run MapReduce jobs with any executable or script

as the mapper and or the reducer?

1. Oozie

2. Sqoop

3. Flume

4. Hadoop Streaming

Question :

You need a distributed, scalable, data Store that allows you random, realtime read-write access to hundreds of terabytes of data.

Which of the following would you use?

1. Hue

2. Pig

3. Hive

4. Oozie

5. HBase