Cloudera Hadoop Administrator Certification Certification Questions and Answer (Dumps and Practice Questions)

Question : You want to understand more about how users browse your public website. For example, you want to know

which pages they visit prior to placing an order. You have a server farm of 200 web servers hosting your

website. Which is the most efficient process to gather these web server across logs into your Hadoop cluster

analysis?

A. Sample the web server logs web servers and copy them into HDFS using curl

B. Ingest the server web logs into HDFS using Flume

C. Channel these clickstreams into Hadoop using Hadoop Streaming

D. Import all user clicks from your OLTP databases into Hadoop using Sqoop

E. Write a MapReeeduce job with the web servers for mappers and the Hadoop cluster nodes for reducers

1. A

2. B

3. Access Mostly Uused Products by 50000+ Subscribers

4. D

5. E

Correct Answer : Get Lastest Questions and Answer :

Explanation:

Watch the training Module 21 from http://hadoopexam.com/index.html/#hadoop-training

Question : You need to analyze ,, images stored in JPEG format, each of which is approximately KB.

Because you Hadoop cluster isn't optimized for storing and processing many small files, you decide to do the

following actions:

1. Group the individual images into a set of larger files

2. Use the set of larger files as input for a MapReduce job that processes them directly with python using

Hadoop streaming.

Which data serialization system gives the flexibility to do this?

A. CSV

B. XML

C. HTML

D. Avro

E. SequenceFiles

F. JSON

1. AB

2. AC

3. Access Mostly Uused Products by 50000+ Subscribers

4. CD

5. EF

Correct Answer : Get Lastest Questions and Answer :

Explanation: Avro provides: Rich data structures.

A compact, fast, binary data format.

A container file, to store persistent data.

Remote procedure call (RPC).

Simple integration with dynamic languages. Code generation is not required to read or write data files nor to use or implement RPC protocols. Code generation as an optional optimization, only worth implementing for statically typed languages. Schemas : Avro relies on schemas. When Avro data is read, the schema used when writing it is always present. This permits each datum to be written with no per-value overheads, making serialization both fast and small. This also facilitates use with dynamic, scripting languages, since data, together with its schema, is fully self-describing. When Avro data is stored in a file, its schema is stored with it, so that files may be processed later by any program. If the program reading the data expects a different schema this can be easily resolved, since both schemas are present. When Avro is used in RPC, the client and server exchange schemas in the connection handshake. (This can be optimized so that, for most calls, no schemas are actually transmitted.) Since both client and server both have the other's full schema, correspondence between same named fields, missing fields, extra fields, etc. can all be easily resolved. Avro schemas are defined with JSON . This facilitates implementation in languages that already have JSON libraries. Comparison with other systems : Avro provides functionality similar to systems such as Thrift, Protocol Buffers, etc. Avro differs from these systems in the following fundamental aspects. Dynamic typing: Avro does not require that code be generated. Data is always accompanied by a schema that permits full processing of that data without code generation, static datatypes, etc. This facilitates construction of generic data-processing systems and languages. Untagged data: Since the schema is present when data is read, considerably less type information need be encoded with data, resulting in smaller serialization size. No manually-assigned field IDs: When a schema changes, both the old and new schema are always present when processing data, so differences may be resolved symbolically, using field names. Apache Avro, Avro, Apache, and the Avro and Apache logos are trademarks of The Apache Software Foundation. SequenceFile are large and do contain : enough data repetition to make compression desirable, you still have the option to use RECORD level compression. A SequenceFile containing images or other

binary payloads would be a good example of when RECORD level compression is

a good idea.

Question : Identify two features/issues that YARN is designated to address:

A. Standardize on a single MapReduce API

B. Single point of failure in the NameNode

C. Reduce complexity of the MapReduce APIs

D. Resource pressure on the JobTracker

E. Ability to run framework other than MapReduce, such as MPI

F. HDFS latency

1. AB

2. AC

3. Access Mostly Uused Products by 50000+ Subscribers

4. CD

5. EF

Correct Answer : Get Lastest Questions and Answer :

Explanation: Hadoop MapReduce is not without its flaws. The team at Yahoo ran into a number of scalability limitations that were difficult to overcome given Hadoops existing architecture and design. In large-scale deployments such as Yahoo!’s Hammer cluster-a single, 4,000-plus node Hadoop cluster that powers various systems—the team found that the resource requirements on a single jobtracker were just too great. Further, operational issues such as dealing with upgrades and the single point of failure of the jobtracker were painful. YARN (or Yet Another Resource Negotiator) was created to address these issues. Rather than have a single daemon that tracks and assigns resources such as CPU and memory and handles MapReduce-specific job tracking, these functions are separated into two parts. The resource management aspect of the jobtracker is run as a new daemon called the resource manager,; a separate daemon responsible for creating and allocating resources to multiple applications. Each application is an individual Map-Reduce job, but rather than have a single jobtracker, each job now has its own jobtracker-

equivalent called an application master that runs on one of the workers of the cluster. This is very different from having a centralized jobtracker in that the application master of one job is now completely isolated from that of any other. This means that if some catastrophic failure were to occur within the jobtracker, other jobs are unaffected. Further, because the jobtracker is now dedicated to a specific job, multiple jobtrackers can be running on the cluster at once. Taken one step further, each jobtracker can be a different version of the software, which enables simple rolling upgrades and multiversion support. When an application completes, its application master, such as the jobtracker, and other resources are returned to the cluster. As a result, there’s no central jobtracker daemon in YARN. Worker nodes in YARN also run a new daemon called the node manager in place of the traditional tasktracker. While the tasktracker expressly handled MapReduce-specific functionality such as launching and managing tasks, the node manager is more generic. Instead, the node manager launches any type of process, dictated by the application, in an application container. For instance, in the case of a MapReduce application, the node manager manages both the application master (the jobtracker) as well as individual map and reduce tasks. With the ability to run arbitrary applications, each with its own application master, it’s

even possible to write non-MapReduce applications that run on YARN. Not entirely an accident, YARN provides a compute-model-agnostic resource management framework

for any type of distributed computing framework. Members of the Hadoop community have already started to look at alternative processing systems that can be built

on top of YARN for specific problem domains such as graph processing and more traditional HPC systems such as MPI. The flexibility of YARN is enticing, but it’s still a new system. At the time of this writing, YARN is still considered alpha-level software and is not intended for production use. Initially introduced in the Apache Hadoop 2.0 branch, YARN hasn’t yet been battletested in large clusters. Unfortunately, while the Apache Hadoop 2.0 lineage includes highly desirable HDFS features such as high availability, the old-style jobtracker and tasktracker daemons (now referred to as MapReduce version one, or MRv1) have been

removed in favor of YARN. This creates a potential conflict for Apache Hadoop users that want these features with the tried and true MRv1 daemons. CDH4, however, in- cludes the HDFS features as well as both MRv1 and YARN

Watch the training from http://hadoopexam.com/index.html/#hadoop-training

Related Questions

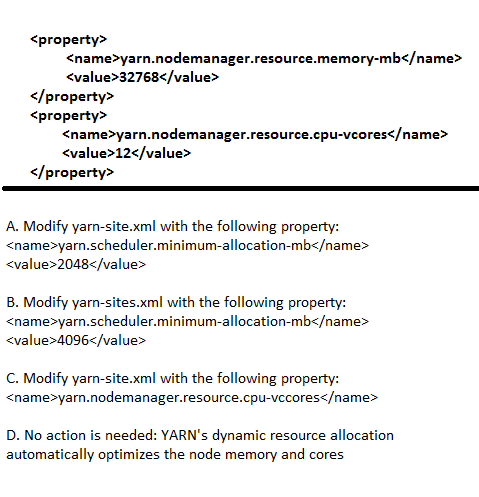

Question : Each node in your Hadoop cluster, running YARN, has GB memory and cores.

Your yarn-site.xml has the following configuration:

You want YARN to launch no more than 16 containers per node. What should you do?

1. A

2. B

3. Access Mostly Uused Products by 50000+ Subscribers

4. D

Question : You want to node to only swap Hadoop daemon data from RAM to disk when absolutely necessary. What

should you do?

1. Delete the /dev/vmswap file on the node

2. Delete the /etc/swap file on the node

3. Access Mostly Uused Products by 50000+ Subscribers

4. Set vm.swappiness file on the node

5. Delete the /swapfile file on the node

Question : You are configuring your cluster to run HDFS and MapReducer v (MRv) on YARN.

Which two daemons needs to be installed on your cluster's master nodes?

A. HMaster

B. ResourceManager

C. TaskManager

D. JobTracker

E. NameNode

F. DataNode

1. AB

2. AC

3. Access Mostly Uused Products by 50000+ Subscribers

4. DE

5. BE

Question : You observed that the number of spilled records from Map tasks far exceeds the number of map output

records. Your child heap size is 1GB and your io.sort.mb value is set to 1000MB. How would you tune your io.

sort.mb value to achieve maximum memory to disk I/O ratio?

1. For a 1GB child heap size an io.sort.mb of 128 MB will always maximize memory to disk I/O

2. Increase the io.sort.mb to 1GB

3. Access Mostly Uused Products by 50000+ Subscribers

4. Tune the io.sort.mb value until you observe that the number of spilled records equals (or is as close to

equals) the number of map output records.

Question : You are running a Hadoop cluster with a NameNode on host mynamenode, a secondary NameNode on host

mysecondarynamenode and several DataNodes.

Which best describes how you determine when the last checkpoint happened?

1. Execute hdfs namenode report on the command line and look at the Last Checkpoint information

2. Execute hdfs dfsadmin saveNamespace on the command line which returns to you the last checkpoint

value in fstime file

3. Access Mostly Uused Products by 50000+ Subscribers

Checkpoint information

4. Connect to the web UI of the NameNode (http://mynamenode:50070) and look at the Last Checkpoint

information

Question : What does CDH packaging do on install to facilitate Kerberos security setup?

1. Automatically configures permissions for log files at &MAPRED_LOG_DIR/userlogs

2. Creates users for hdfs and mapreduce to facilitate role assignment

3. Access Mostly Uused Products by 50000+ Subscribers

4. Creates a set of pre-configured Kerberos keytab files and their permissions

5. Creates and configures your kdc with default cluster values