Cloudera Hadoop Administrator Certification Certification Questions and Answer (Dumps and Practice Questions)

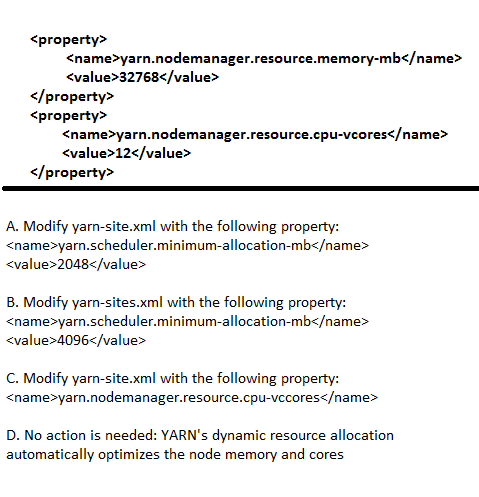

Question : Each node in your Hadoop cluster, running YARN, has GB memory and cores.

Your yarn-site.xml has the following configuration:

You want YARN to launch no more than 16 containers per node. What should you do?

1. A

2. B

3. Access Mostly Uused Products by 50000+ Subscribers

4. D

Correct Answer : Get Lastest Questions and Answer :

Explanation: yarn.scheduler.minimum-allocation-mb : Minimum limit of memory to allocate to each container request at the Resource Manager. In MBs

yarn.scheduler.maximum-allocation-mb : Maximum limit of memory to allocate to each container request at the Resource Manager. In MBs

(32768 MB total RAM) / (16 # of Containers) = 2048 MB minimum per Container

Watch the training from http://hadoopexam.com/index.html/#hadoop-training

Question : You want to node to only swap Hadoop daemon data from RAM to disk when absolutely necessary. What

should you do?

1. Delete the /dev/vmswap file on the node

2. Delete the /etc/swap file on the node

3. Access Mostly Uused Products by 50000+ Subscribers

4. Set vm.swappiness file on the node

5. Delete the /swapfile file on the node

Correct Answer : Get Lastest Questions and Answer :

Explanation: The kernel parameter vm.swappiness controls the kernel’s tendency to swap application

data from memory to disk, in contrast to discarding filesystem cache. The valid range

for vm.swappiness is 0 to 100 where higher values indicate that the kernel should be

more aggressive in swapping application data to disk, and lower values defer this behavior,

instead forcing filesystem buffers to be discarded. Swapping Hadoop daemon

data to disk can cause operations to timeout and potentially fail if the disk is performing

other I/O operations. This is especially dangerous for HBase as Region Servers must

maintain communication with ZooKeeper lest they be marked as failed. To avoid this,

vm.swappiness should be set to 0 (zero) to instruct the kernel to never swap application

data, if there is an option. Most Linux distributions ship with vm.swappiness set to 60

or even as high as 80.

Watch the training from http://hadoopexam.com/index.html/#hadoop-training

Question : You are configuring your cluster to run HDFS and MapReducer v (MRv) on YARN.

Which two daemons needs to be installed on your cluster's master nodes?

A. HMaster

B. ResourceManager

C. TaskManager

D. JobTracker

E. NameNode

F. DataNode

1. AB

2. AC

3. Access Mostly Uused Products by 50000+ Subscribers

4. DE

5. BE

Correct Answer : Get Lastest Questions and Answer :

Explanation: Each slave node in a cluster configured to run MapReduce v2 (MRv2) on YARN typically runs a DataNode daemon (for HDFS functions) and NodeManager daemon (for YARN functions).

The NodeManager handles communication with the ResourceManager, oversees application container lifecycles, monitors CPU and memory resource use of the containers, tracks the node health,

and handles log management. It also makes available a number of auxiliary services to YARN applications

HMaster: HMaster is the "master server" for HBase. An HBase cluster has one active master. If many masters are started, all compete. Whichever wins goes on to run the cluster. All others park themselves in their constructor until master or cluster shutdown or until the active master loses its lease in zookeeper. Thereafter, all running master jostle to take over master role.

ResourceManager: The ResourceManager (RM) is responsible for tracking the resources in a cluster and scheduling applications (for example, MapReduce jobs). Before CDH 5, the RM was a single point of failure in a YARN cluster. The High Availability feature adds redundancy in the form of an Active/Standby ResourceManager pair to remove this single point of failure. Furthermore, upon failover from the Standby ResourceManager to the Active, the applications can resume from their last check-pointed state; for example, completed map tasks in a MapReduce job are not re-run on a subsequent attempt. This allows events such the following to be handled without any significant performance effect on running applications.:

Unplanned events such as machine crashes

Planned maintenance events such as software or hardware upgrades on the machine running the ResourceManager.

ResourceManager is the central authority that manages resources and schedules applications running atop of YARN

NameNode: The NameNode is the centerpiece of an HDFS file system. It keeps the directory tree of all files in the file system, and tracks where across the cluster the file data is kept. It does not store the data of these files itself.

Watch the training Module 21 from http://hadoopexam.com/index.html/#hadoop-training

Related Questions

Question : As a client of HadoopExam, you are able to access the Hadoop cluster of HadoopExam Inc, Once a you are validated

with the identity and granted access to a file in HDFS, what is the remainder of the read path back to the client?

1. The NameNode gives the client the block IDs and a list of DataNodes on which those blocks are found, and the application reads the blocks directly from the DataNodes.

2. The NameNode maps the read request against the block locations in its stored metadata, and reads those blocks from the DataNodes. The client application then reads the blocks from the NameNode.

3. Access Mostly Uused Products by 50000+ Subscribers

4. DataNode closest to the client according to Hadoop's rack topology. The client application then reads the blocks from that single DataNode.

Question : In your QuickTechie Inc Hadoop cluster you have slave datanodes and two master nodes. You set the blcok size MB, with the

replication factor set to 3. How will the Hadoop framework distribute block writes from a MapReduce Reducer into HDFS when that MapReduce Reducer

outputs a 295MB file?

1. Reducers don't write blocks into HDFS.

2. The nine blocks will be written to three nodes, such that each of the three gets one copy of each block.

3. Access Mostly Uused Products by 50000+ Subscribers

4. The nine blocks will be written randomly to the nodes; some may receive multiple blocks, some may receive none.

5. The node on which the Reducer is running will receive one copy of each block. The other replicas will be placed on other nodes in the cluster.

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the classification analysis

you want to save all the data in a single file called QT31012015.log which is approximately in 30GB in size. Now using the MapReduce

ETL job you are able to push this full file in a directory on HDFS called /log/QT/QT31012015.log. After completing file write which

of the following metadata change will occur.

1. The metadata in RAM on the NameNode is updated

2. The change is written to the Secondary NameNode

3. The change is written to the fsimage file

4. The change is written to the edits file

5. The NameNode triggers a block report to update block locations in the edits file

6. The metadata in RAM on the NameNode is flushed to disk

1. 1,3,4

2. 1,4,6

3. Access Mostly Uused Products by 50000+ Subscribers

4. 5,6

4. 4,6

Ans : 3

Exp : The namenode stores its filesystem metadata on local filesystem disks in a few different files, the two most important of which are fsimage and edits. Just like a database would, fsimage contains a complete snapshot of the filesystem metadata whereas edits contains only incremental modifications made to the metadata. A common practice for high throughput data stores, use of a write ahead log (WAL) such as the edits file reduces I/O operations to sequential, append-only operations (in the context of the namenode, since it serves directly from RAM), which avoids costly seek operations and yields better overall performance. Upon namenode startup, the fsimage file is loaded into RAM and any changes in the edits file are replayed, bringing the in-memory view of the filesystem up to date.

The NameNode metadata contains information about every file stored in HDFS. The NameNode holds the metadata in RAM for fast access, so any change is reflected in that RAM version. However, this is not sufficient for reliability, since if the NameNode crashes information on the change would be lost. For that reason, the change is also written to a log file known as the edits file.

In more recent versions of Hadoop (specifically, Apache Hadoop 2.0 and CDH4;), the underlying metadata storage was updated to be more resilient to corruption and to support namenode high availability. Conceptually, metadata storage is similar, although transactions are no longer stored in a single edits file. Instead, the namenode periodically rolls the edits file (closes one file and opens a new file), numbering them by transaction ID. It's also possible for the namenode to now retain old copies of both fsimage and edits to better support the ability to roll back in time. Most of these changes won't impact you, although it helps to understand the purpose of the files on disk. That being said, you should never make direct changes to these files unless you really know what you are doing. The rest of this book will simply refer to these files using their base names, fsimage and edits, to refer generally to their function. Recall from earlier that the namenode writes changes only to its write ahead log, edits. Over time, the edits file grows and grows and as with any log-based system such as this, would take a long time to replay in the event of server failure. Similar to a relational database, the edits file needs to be periodically applied to the fsimage file. The problem is that the namenode may not have the available resources-CPU or RAM-to do this while continuing to provide service to the cluster. This is where the secondary namenode comes in.

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the

classification analysis you want to save all the data in a single file called QT31012015.log which is approximately in 30GB in size.

Now using the MapReduce ETL job you are able to push this full file in a directory on HDFS called /log/QT/QT31012015.log. This file is divided into the approximately 70 blocks. Select from below what is stored in each block

1. Each block writes a separate .meta file containing information on the filename of which the block is a part

2. Each block contains only data from the /log/QT/QT31012015.log file

3. Access Mostly Uused Products by 50000+ Subscribers

4. Each block has a header and a footer containing metadata of /log/QT/QT31012015.log

Ans: 2

Exp : The HDFS namespace is stored by the NameNode. The NameNode uses a transaction log called the EditLog to persistently record every change that occurs to file system metadata. For example, creating a new file in HDFS causes the NameNode to insert a record into the EditLog indicating this. Similarly, changing the replication factor of a file causes a new record to be inserted into the EditLog. The NameNode uses a file in its local host OS file system to store the EditLog. The entire file system namespace, including the mapping of blocks to files and file system properties, is stored in a file called the FsImage. The FsImage is stored as a file in the NameNode's local file system too.

The NameNode keeps an image of the entire file system namespace and file Blockmap in memory. This key metadata item is designed to be compact, such that a NameNode with 4 GB of RAM is plenty to support a huge number of files and directories. When the NameNode starts up, it reads the FsImage and EditLog from disk, applies all the transactions from the EditLog to the in-memory representation of the FsImage, and flushes out this new version into a new FsImage on disk. It can then truncate the old EditLog because its transactions have been applied to the persistent FsImage. This process is called a checkpoint. In the current implementation, a checkpoint only occurs when the NameNode starts up. Work is in progress to support periodic checkpointing in the near future.

The DataNode stores HDFS data in files in its local file system. The DataNode has no knowledge about HDFS files. It stores each block of HDFS data in a separate file in its local file system. The DataNode does not create all files in the same directory. Instead, it uses a heuristic to determine the optimal number of files per directory and creates subdirectories appropriately. It is not optimal to create all local files in the same directory because the local file system might not be able to efficiently support a huge number of files in a single directory. When a DataNode starts up, it scans through its local file system, generates a list of all HDFS data blocks that correspond to each of these local files and sends this report to the NameNode: this is the Blockreport.When a file is written into HDFS, it is split into blocks. Each block contains just a portion of the file; there is no extra data in the block file. Although there is also a .meta file associated with each block, that file contains checksum data which is used to confirm the integrity of the block when it is read. Nothing on the DataNode contains information about what file the block is a part of; that information is held only on the NameNode.

Question : Which statement is true with respect to MapReduce . or YARN

1. It is the newer version of MapReduce, using this performance of the data processing can be increased.

2. The fundamental idea of MRv2 is to split up the two major functionalities of the JobTracker,

resource management and job scheduling or monitoring, into separate daemons.

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above

5. Only 2 and 3 are correct

Ans : 5

Exp : MapReduce has undergone a complete overhaul in hadoop-0.23 and we now have, what we call, MapReduce 2.0 (MRv2) or YARN.

The fundamental idea of MRv2 is to split up the two major functionalities of the JobTracker,

resource management and job scheduling or monitoring, into separate daemons. The idea is to have a global ResourceManager (RM)

and per-application ApplicationMaster (AM). An application is either a single job in the classical sense of Map-Reduce jobs or a DAG of jobs.

Question : Which is the component of the ResourceManager

1. 1. Scheduler

2. 2. Applications Manager

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4. All of the above

5. Only 1 and 2 are correct

Ans : 5

Exp : The ResourceManager has two main components: Scheduler and ApplicationsManager.

The Scheduler is responsible for allocating resources to the various running applications subject to familiar constraints of capacities,

queues etc. The Scheduler is pure scheduler in the sense that it performs no monitoring or tracking of status for the application.

Question :

Schduler of Resource Manager guarantees about restarting failed tasks either due to application failure or hardware failures.

1. True

2. False

1. True

2. False

Ans : 2

Exp : The Scheduler is responsible for allocating resources to the various running applications subject to familiar constraints of

capacities, queues etc. The Scheduler is pure scheduler in the sense that it performs no monitoring or tracking of status

for the application. Also, it offers no guarantees about restarting failed tasks either due to application failure or hardware

failures. The Scheduler performs its scheduling function based the resource requirements of the applications;

it does so based on the abstract notion of a resource Container which incorporates elements such as memory,

cpu, disk, network etc.

Question :

Which statement is true about ApplicationsManager

1. is responsible for accepting job-submissions

2. negotiating the first container for executing the application specific ApplicationMaster

and provides the service for restarting the ApplicationMaster container on failure.

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above

5. 1 and 2 are correct

Ans : 5

Exp : The ApplicationsManager is responsible for accepting job-submissions,

negotiating the first container for executing the application specific ApplicationMaster and provides the

service for restarting the ApplicationMaster container on failure.

Question :

NameNode store block locations persistently ?

1. True

2. Flase

Ans : 2

Exp : NameNode does not store block locations persistently, since this information is reconstructed from datanodes when system starts.

Question :

Which tool is used to list all the blocks of a file ?

1. hadoop fs

2. hadoop fsck

3. Access Mostly Uused Products by 50000+ Subscribers

4. Not Possible

Ans : 2

Question : HDFS can not store a file which size is greater than one node disk size :

1. True

2. False

Ans : 2

Exp : It can store because it is divided in block and block can be stored anywhere..

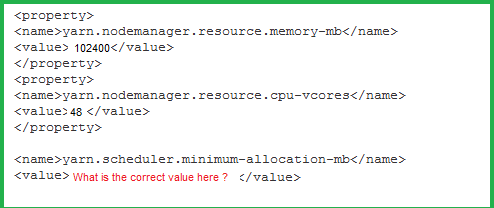

Question : Your HadoopExam Inc cluster has each node in your Hadoop

cluster with running YARN, and has 140GB memory and 40 cores.

Your yarn-site.xml has the configuration as shown in image :

You want YARN to launch a maxinum of 100 Containers per node.

Enter the property value that would restrict YARN from launching

more than 100 containers per node:

1. 2048

2. 1024

3. Access Mostly Uused Products by 50000+ Subscribers

4. 30

Ans : 2

Exp : YARN takes into account all of the available resources on each machine in the cluster. Based on the available resources, YARN negotiates resource requests from applications (such as MapReduce) running in the cluster. YARN then provides processing capacity to each application by allocating Containers. A Container is the basic unit of processing capacity in YARN, and is an encapsulation of resource elements (memory, CPU, etc.). In a Hadoop cluster, it is vital to balance the usage of memory (RAM), processors (CPU cores) and disks so that processing is not constrained by any one of these cluster resources. As a general recommendation, allowing for two Containers per disk and per core gives the best balance for cluster utilization. When determining the appropriate YARN and MapReduce memory configurations for a cluster node, start with the available hardware resources. Specifically, note the following values on each node:

RAM (Amount of memory)

CORES (Number of CPU cores)

DISKS (Number of disks)

The total available RAM for YARN and MapReduce should take into account the Reserved Memory. Reserved Memory is the RAM needed by system processes and other Hadoop processes, such as HBase. Reserved Memory = Reserved for stack memory + Reserved for HBase memory (If HBase is on the same node) Use the following table to determine the Reserved Memory per node.(102400 MB total RAM) / (100 # of Containers) = 1024 MB minimum per Container. The next calculation is to determine the maximum number of Containers allowed per node. The following formula can be used:

# of Containers = minimum of (2*CORES, 1.8*DISKS, (Total available RAM) / MIN_CONTAINER_SIZE)

Where MIN_CONTAINER_SIZE is the minimum Container size (in RAM). This value is dependent on the amount of RAM available -- in smaller memory nodes, the minimum Container size should also be smaller.The final calculation is to determine the amount of RAM per container:

RAM-per-Container = maximum of (MIN_CONTAINER_SIZE, (Total Available RAM) / Containers))

Question : In Acmeshell Inc Hadoop cluster you had set the value of mapred.child.java.opts to -XmxM on all TaskTrackers in the cluster.

You set the same configuration parameter to -Xmx256M on the JobTracker. What size heap will a Map task running on the cluster have?

1. 64MB

2. 128MB

3. Access Mostly Uused Products by 50000+ Subscribers

4. 256MB

5. The job will fail because of the discrepancy

Ans : 2

Exp : Setting mapred.child.java.opts thusly:

SET mapred.child.java.opts="-Xmx4G -XX:+UseConcMarkSweepGC";

is unacceptable. But this seem to go through fine:SET mapred.child.java.opts=-Xmx4G -XX:+UseConcMarkSweepGC; (minus the double-quotes)mapred.child.java.opts is a setting which is read by the TaskTracker when it starts up. The value configured on the JobTracker has no effect; it is the value on the slave node which is used. (The value can be modified by a job, if it contains a different value for the parameter.) Two other guards can restrict task memory usage. Both are designed for admins to enforce QoS, so if you're not one of the admins on the cluster, you may be unable to change them.

The first is the ulimit, which can be set directly in the node OS, or by setting mapred.child.ulimit.

The second is a pair of cluster-wide mapred.cluster.max.*.memory.mb properties that enforce memory usage by comparing job settings mapred.job.map.memory.mb and mapred.job.reduce.memory.mb against those cluster-wide limits.

I am beginning to think that this doesn't even have anything to do with the heap size setting. Tinkering with mapred.child.java.opts in any way is causing the same outcome. For example setting it thusly, SET mapred.child.java.opts="-XX:+UseConcMarkSweepGC"; is having the same result of MR jobs getting killed right away.

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the classification analysis you want to

save all the data in a single file called QT31012015.log which is approximately in 30GB in size. Now using the MapReduce ETL job you are able to

push this full file in a directory on HDFS called /log/QT/QT31012015.log. Select the operation which you can do on the /log/QT/QT31012015.log file

1. You can move the file

2. You can overwrite the file by creating a new file with the same name

3. You can rename the file

4. You can update the file's contents

5. You can delete the file

1. 1,2,3

2. 1,3,4

3. Access Mostly Uused Products by 50000+ Subscribers

4. 2,4,5

5. 2,3,4

Question : Indentify the utility that allows you to create and run MapReduce jobs with any

executable or script as the mapper and/or the reducer?

1. Oozie

2. Sqoop

3. Access Mostly Uused Products by 50000+ Subscribers

4. Hadoop Streaming

5. mapred

Ans : 4

Question : You have just executed a MapReduce job. Where is intermediate data written to after being emitted from the

Mapper's map method?

1. Intermediate data in streamed across the network from Mapper to the Reduce and is never written to disk

2. Into in-memory buffers on the TaskTracker node running the Mapper that spill over and are written into HDFS.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Into in-memory buffers that spill over to the local file system (outside HDFS) of the TaskTracker node running the Reducer

5. Into in-memory buffers on the TaskTracker node running the Reducer that spill over and are written into HDFS.

Ans : 3

Exp :The mapper output (intermediate data) is stored on the Local file system (NOT HDFS) of each individual

mapper nodes. This is typically a temporary directory location which can be setup in config by the hadoop

administrator. The intermediate data is cleaned up after the Hadoop Job completes

Question : Identify the MapReduce v (MRv / YARN) daemon responsible for launching application containers and

monitoring application resource usage?

1. ResourceManager

2. NodeManager

3. Access Mostly Uused Products by 50000+ Subscribers

4. ApplicationMasterService

5. TaskTracker.

Ans : 3

Exp :The fundamental idea of MRv2(YARN)is to split up the two major functionalities of the JobTracker, resource

management and job scheduling/monitoring, into separate daemons. The idea is to have a global

ResourceManager (RM) and per-application ApplicationMaster (AM). An application is either a single job in the

classical sense of Map-Reduce jobs or a DAG of jobs.

Question : hdfs dfsadmin -report command display the amount of space remaining in HDFS

1. True

2. False

Ans : 1

Exp :

Question : df command provides information about RAM.

1. True

2. False

Ans : 2

Exp :

Question : The Primary NameNode's Web UI contains information on when it last performed its checkpoint operation.

1. True

2. False

Ans : 2

Exp :

Question : Whenever you want to change the configuration in the slave DataNodes, you have to re-install all the datanodes with the changes.

1. True

2. False

Ans : 2

Exp :

Question : Hive provides additional capabilities to MapReduce.

1. Yes

2. No

Ans : 2

Exp :

Question : You have user profile records in your OLPT database, that you want to join with web logs you have already

ingested into the Hadoop file system. How will you obtain these user records?

1. HDFS command

2. Pig LOAD command

3. Access Mostly Uused Products by 50000+ Subscribers

4. Hive LOAD DATA command

5. Ingest with Flume agents

Ans : 3

Exp :Apache Hadoop and Pig provide excellent tools for extracting and analyzing data from very large Web logs.

We use Pig scripts for sifting through the data and to extract useful information from the Web logs.

We load the log file into Pig using the LOAD command.

raw_logs = LOAD 'apacheLog.log' USING TextLoader AS (line:chararray);

Note 1:

Data Flow and Components

*Content will be created by multiple Web servers and logged in local hard discs. This content will then be pushed to HDFS using FLUME framework.

FLUME has agents running on Web servers; these are machines that collect data intermediately using collectors and finally push that data to HDFS.

*Pig Scripts are scheduled to run using a job scheduler (could be cron or any sophisticated batch job solution). These scripts actually analyze

the logs on various dimensions and extract the results. Results from Pig are by default inserted into HDFS, but we can use storage implementation

for other repositories also such as HBase, MongoDB, etc. We have also tried the solution with HBase (please see the implementation section). Pig

Scripts can either push this data to HDFS and then MR jobs will be required to read and push this data into HBase, or Pig scripts can push this data into HBase directly. In this article, we use scripts to push data onto HDFS, as we are showcasing the Pig framework applicability for log analysis at large scale. *The database HBase will have the data processed by Pig scripts ready for reporting and further slicing and dicing. *The data-access Web service is a REST-based service that eases the access and integrations with data clients. The client can be in any language to access REST-based API. These clients could be BI- or UI-based

clients.

Note 2:

The Log Analysis Software Stack

*Hadoop is an open source framework that allows users to process very large data in parallel. It's based on the framework that supports Google search engine. The Hadoop core is mainly divided into two modules: 1.HDFS is the Hadoop Distributed File System. It allows you to store large amounts of data using multiple

commodity servers connected in a cluster. 2.Map-Reduce (MR) is a framework for parallel processing of large data sets. The default implementation is

bonded with HDFS. *The database can be a NoSQL database such as HBase. The advantage of a NoSQL database is that it provides scalability for the reporting module as well, as we can keep historical processed data for reporting purposes. HBase is an open source columnar DB or NoSQL DB, which uses HDFS. It can also use MR jobs to process data. It gives real-time, random read/write access to very large data sets -- HBase can save very large tables having million of rows. It's a distributed database and can also keep multiple versions of a single row. *The Pig framework is an open source platform for analyzing large data sets and is implemented as a layered language over the Hadoop Map-Reduce framework. It is built to ease the work of developers who write code in the Map-Reduce format, since code in Map-Reduce format needs to be written in Java. In contrast, Pig enables users to write code in a scripting language. *Flume is a distributed, reliable and available service for collecting, aggregating and moving a large amount of log data (src flume-wiki). It was built to push large logs into Hadoop-HDFS for further processing. It's a data flow solution, where there is an originator and destination for each node and is divided into Agent and Collector tiers for collecting logs and pushing them to destination storage.

============================================================================================

As you have completed third Question Paper, we would like to suggest you that, please

do not memorize any question and answer, understand each Question and Answers in depth.

Also do not forget to refer revision notes, read it continuously and understand in depth.

Before Appearing in the real exam, please drop an email to hadoopexam@gmail.com,

so we can provide you any update if it is there.

============================================================================================

Question : A client application creates an HDFS file named foo.txt with a replication factor of . Identify which best

describes the file access rules in HDFS if the file has a single block that is stored on data nodes A, B and C?

1. The file will be marked as corrupted if data node B fails during the creation of the file.

2. Each data node locks the local file to prohibit concurrent readers and writers of the file.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Each data node stores a copy of the file in the local file system with the same name as the HDFS file.

5. The file can be accessed if at least one of the data nodes storing the file is available.

Ans : 5

Exp :HDFS keeps three copies of a block on three different datanodes to protect against true data corruption.

HDFS also tries to distribute these three replicas on more than one rack to protect against data availability

issues. The fact that HDFS actively monitors any failed datanode(s) and upon failure detection immediately

schedules re-replication of blocks (if needed) implies that three copies of data on three different nodes is

sufficient to avoid corrupted files.

Note:

HDFS is designed to reliably store very large files across machines in a large cluster. It stores each file as a

sequence of blocks; all blocks in a file except the last block are the same size. The blocks of a file are

replicated for fault tolerance. The block size and replication factor are configurable per file. An application can

specify the number of replicas of a file. The replication factor can be specified at file creation time and can be

changed later. Files in HDFS are write-once and have strictly one writer at any time. The NameNode makes all

decisions regarding replication of blocks. HDFS uses rack-aware replica placement policy. In default

configuration there are total 3 copies of a datablock on HDFS, 2 copies are stored on datanodes on same rack

and 3rd copy on a different rack.

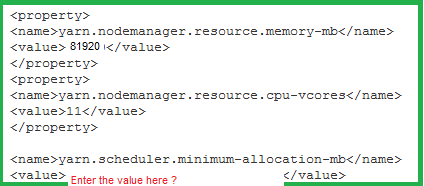

Question : Each node in your Hadoop cluster, running YARN, has GB memory. Your yarn-site.xml has the given configuration:

You want YARN to launch a maxinum of 20 Containers per node. Enter the property value that would restrict

YARN from launching more than 10 containers per node:

1. 12

2. 10

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4096

Ans : 4

Exp : As per formula (81920 MB total RAM) / (20 # of Containers) = 4096 MB minimum per Container

Question : In the QuickTechie Inc, You have configured Hadoop MRv cluster wih dfs.hosts property to point to a file on your NameNode

listing all the DataNode hosts allowed to join your cluster. You add a new node to the cluster and update dfs.hosts to include the new host.

Now without re-starting the cluster you want to NameNode reads the change, how this can be done?

1. There is nothing to be done because NameNode reads the file periodically in every 15 minutes.

2. At least you have to fire a command hadoop dfsadmin -refreshNodes.

3. Access Mostly Uused Products by 50000+ Subscribers

4. You must restart full cluster.

Ans : 2

Exp : I have a new node I want to add to a running Hadoop cluster; how do I start services on just one node?

This also applies to the case where a machine has crashed and rebooted, etc, and you need to get it to rejoin the cluster. You do not need to shutdown and/or restart the entire cluster in this case.

First, add the new node's DNS name to the conf/slaves file on the master node.

Then log in to the new slave node and execute:

$ cd path/to/hadoop

$ bin/hadoop-daemon.sh start datanode

$ bin/hadoop-daemon.sh start tasktracker

If you are using the dfs.include/mapred.include functionality, you will need to additionally add the node to the dfs.include/mapred.include file, then issue hadoop dfsadmin -refreshNodes and hadoop mradmin -refreshNodes so that the NameNode and JobTracker know of the additional node that has been added.The dfs.hosts property is optional, but if it is configured, it lists all of the machines which are allowed to act as DataNodes on the cluster. The NameNode reads this file when it starts up. To force the NameNode to re-read the file, you should issue the hadoop dfsadmin -refreshNodes command. The NameNode will, of course, also re-read the file if it is restarted, but that is not necessary, and it is certainly not necessary to restart any of the DataNodes on the cluster. dfs.hosts Out of the box, all datanodes are permitted to connect to the namenode and join the cluster. A datanode, upon its first connection to a namenode, captures the namespace ID (a unique identifier generated for the filesystem at the time it’s formatted), and is immediately eligible to receive blocks. It is possible for administrators to specify a file that contains a list of hostnames of datanodes that are explicitly allowed to connect and join the cluster, in which case, all others are denied. Those with stronger security requirements or who wish to explicitly control access will want to use this feature. The format of the file specified by dfs.hosts is a newline separated list of hostnames or IP addresses, depending on how machines identify themselves to the cluster.

Question : You have to provide the Security on Hadoop Cluster for that you have configured the Kerberos: The Network Authentication Protocol.

Select the features which are provided by Kerberos.

1. There will be a central KDC server which will authenticate the user for accessing cluster resources.

2. Root access to the cluster for users hdfs and mapred but non-root access for clients

3. Encryption for data during transfer between the Mappers and Reducers

4. User will be authenticated on all remote procedure calls (RPCs)

5. Before storing the data on the disk they will be encrypted.

1. 1,4

2. 2,4

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4,5

5. 1,5

Ans : 1

Exp : Kerberos principals for Hadoop Daemons and Users : For running hadoop service daemons in Hadoop in secure mode, Kerberos principals are required. Each service reads auhenticate information saved in keytab file with appropriate permission. HTTP web-consoles should be served by principal different from RPC's one.

Subsections below shows the examples of credentials for Hadoop services.Kerberos is a security system which provides authentication to the Hadoop cluster. It has nothing to do with data encryption, nor does it control login to the cluster nodes themselves. Instead, it concerns itself with ensuring that only authorized users can access the cluster via HDFS and MapReduce. (It also controls other access, such as via Hive, Sqoop etc.)

Hadoop supports strong authentication using the Kerberos protocol. Kerberos was developed by a team at MIT to provide strong authentication of clients to a server and is well-known to many enterprises. When operating in secure mode, all clients must provide a valid Kerberos ticket that can be verified by the server. In addition to clients being authenticated, daemons are also verified. In the case of HDFS, for instance, a datanode is not permitted to connect to the namenode unless it provides a valid ticket within each RPC. All of this amounts to an environment where every daemon and client application can be cryptographically verified as a known entityprior to allowing any operations to be performed, a desirable feature of any data storage and processing system.The idea is to configure Cloudera Manager and CDH to talk to the KDC which was set up specifically for Hadoop and then create a unidirectional cross-realm trust between this KDC and the (production) Active Directory or KDC.

The Kerberos integration using one-way cross-realm trust is the recommended solution by Cloudera. Why? Because the hadoop services also need to use certificates when Kerberos is enabled. In case of a large enough cluster we can have quite an increased number of certificate requests and added users to the Kerberos server (we will have one per service -i.e. hdfs, mapred, etc. - per server). In case we would not configure a one-way cross-realm trust, all these users would end up in our production Active Directory or KDC server. The Kerberos server dedicated for the hadoop cluster could contain all the hadoop related users (mostly services and hosts), then connect to the production server to obtain the actual users of the cluster. It would also act as a shield, catching all the certificate requests from the hadoop service/host requests and only contacting the Active Directory or KDC production server when a 'real' user wants to connect. To see why Cloudera recommends setting up a KDC with one-way cross-realm trust read the Integrating Hadoop Security with Active Directory site.

Question : In Acmeshell Inc you are configuring a Hadoop Cluster with the disk configuration for a slave node

where the each slave datanode has 12 x 1TB drives, select the appropriate disk configuration.

1. 2 RAID 12 array

2. 3 RAID 6 arrays

3. Access Mostly Uused Products by 50000+ Subscribers

4. None of the above is correct

Ans : 3

Exp : The first step in choosing a machine configuration is to understand the type of hardware your operations team already manages. Operations teams often have opinions or hard requirements about new machine purchases, and will prefer to work with hardware with which they’re already familiar. Hadoop is not the only system that benefits from efficiencies of scale. Again, as a general suggestion, if the cluster is new or you can’t accurately predict your ultimate workload, we advise that you use balanced hardware. There are four types of roles in a basic Hadoop cluster: NameNode (and Standby NameNode), JobTracker, TaskTracker, and DataNode. (A node is a machine performing a particular task.) Most machines in your cluster will perform two of these roles, functioning as both DataNode (for data storage) and TaskTracker (for data processing). Here are the recommended specifications for DataNode/TaskTrackers in a balanced Hadoop cluster:

12-24 1-4TB hard disks in a JBOD (Just a Bunch Of Disks) configuration

2 quad-/hex-/octo-core CPUs, running at least 2-2.5GHz

64-512GB of RAM

Bonded Gigabit Ethernet or 10Gigabit Ethernet (the more storage density, the higher the network throughput needed) The NameNode role is responsible for coordinating data storage on the cluster, and the JobTracker for coordinating data processing. (The Standby NameNode should not be co-located on the NameNode machine for clusters and will run on hardware identical to that of the NameNode.) Cloudera recommends that customers purchase enterprise-class machines for running the NameNode and JobTracker, with redundant power and enterprise-grade disks in RAID 1 or 10 configurations.The NameNode will also require RAM directly proportional to the number of data blocks in the cluster. A good rule of thumb is to assume 1GB of NameNode memory for every 1 million blocks stored in the distributed file system. With 100 DataNodes in a cluster, 64GB of RAM on the NameNode provides plenty of room to grow the cluster. We also recommend having HA configured on both the NameNode and JobTracker, features that have been available in the CDH4 line for some time. Here are the recommended specifications for NameNode/JobTracker/Standby NameNode nodes. The drive count will fluctuate depending on the amount of redundancy: 4–6 1TB hard disks in a JBOD configuration (1 for the OS, 2 for the FS image [RAID 1], 1 for Apache ZooKeeper, and 1 for Journal node)

2 quad-/hex-/octo-core CPUs, running at least 2-2.5GHz

64-128GB of RAM

Bonded Gigabit Ethernet or 10Gigabit Ethernet

Remember, the Hadoop ecosystem is designed with a parallel environment in mind. If you expect your Hadoop cluster to grow beyond 20 machines, we recommend that the initial cluster be configured as if it were to span two racks, where each rack has a top-of-rack 10 GigE switch. As the cluster grows to multiple racks, you will want to add redundant core switches to connect the top-of-rack switches with 40GigE. Having two logical racks gives the operations team a better understanding of the network requirements for intra-rack and cross-rack communication. With a Hadoop cluster in place, the team can start identifying workloads and prepare to benchmark those workloads to identify hardware bottlenecks. After some time benchmarking and monitoring, the team will understand how additional machines should be configured. Heterogeneous Hadoop clusters are common, especially as they grow in size and number of use cases – so starting with a set of machines that are not "ideal" for your workload will not be a waste of time. Cloudera Manager offers templates that allow different hardware profiles to be managed in groups, making it simple to manage heterogeneous clusters. Below is a list of various hardware configurations for different workloads, including our original "balanced" recommendation: Light Processing Configuration (1U/machine): Two hex-core CPUs, 24-64GB memory, and 8 disk drives (1TB or 2TB)

Balanced Compute Configuration (1U/machine): Two hex-core CPUs, 48-128GB memory, and 12 – 16 disk drives (1TB or 2TB) directly attached using the motherboard controller. These are often available as twins with two motherboards and 24 drives in a single 2U cabinet.

Storage Heavy Configuration (2U/machine): Two hex-core CPUs, 48-96GB memory, and 16-24 disk drives (2TB – 4TB). This configuration will cause high network traffic in case of multiple node/rack failures. Compute Intensive Configuration (2U/machine): Two hex-core CPUs, 64-512GB memory, and 4-8 disk drives (1TB or 2TB)Hadoop performs best when disks on slave nodes are configured as JBOD (Just a Bunch Of Disks). There is no need for RAID on individual nodes Hadoop replicates each block three times on different nodes for reliability. A single Linux LVM will not perform as well as having the disks configured as separate volumes and, importantly, the failure of one disk in an LVM volume would result in the loss of all data on that volume, whereas the failure of a single disk if all were configured as separate volumes would only result in the loss of data on that one disk (and, of course, each block on that disk is replicated on two other nodes in the cluster).

Question : Which project gives you a distributed, Scalable, data store that allows you random, realtime read/write access

to hundreds of terabytes of data?

1. HBase

2. Hue

3. Access Mostly Uused Products by 50000+ Subscribers

4. Hive

5. Oozie

Ans : 1

Exp : Use Apache HBase when you need random, realtime read/write access to your Big Data.

This HBase goal is the hosting of very large tables

- billions of rows X millions of columns

- atop clusters of commodity hardware. Apache HBase is an open-source, distributed, versioned, columnoriented

store modeled after Google's Bigtable:

- A Distributed Storage System for Structured Data. Just as Bigtable leverages the distributed data storage provided by the Google File System, Apache HBase provides Bigtable-like capabilities on top of Hadoop and HDFS.

Features of HBases

- Linear and modular scalability.

- Strictly consistent reads and writes.

- Automatic and configurable sharding of tables

- Automatic failover support between RegionServers.

- Convenient base classes for backing Hadoop MapReduce jobs with Apache HBase tables.

- Easy to use Java API for client access.

- Block cache and Bloom Filters for real-time queries.

- Query predicate push down via server side Filters

- Thrift gateway and a REST-ful Web service that supports XML, Protobuf, and binary data encoding options

- Extensible jruby-based (JIRB) shell

- Support for exporting metrics via the Hadoop metrics subsystem to files or Ganglia; or via JMX

Question : Identify the tool best suited to import a portion of a relational database every day as files into HDFS, and

generate Java classes to interact with that imported data?

1. Oozie

2. Flume

3. Access Mostly Uused Products by 50000+ Subscribers

4. Hue

5. Sqoop

Ans : 5

Exp :Sqoop ("SQL-to-Hadoop") is a straightforward command-line tool with the following capabilities:

Imports individual tables or entire databases to files in HDFS Generates Java classes to allow you to interact

with your imported data Provides the ability to import from SQL databases straight into your Hive data

warehouse

Data Movement Between Hadoop and Relational Databases

Data can be moved between Hadoop and a relational database as a bulk data transfer, or relational tables can

be accessed from within a MapReduce map function.

Note:

* Cloudera's Distribution for Hadoop provides a bulk data transfer tool (i.e., Sqoop) that imports individual

tables or entire databases into HDFS files. The tool also generates Java classes that support interaction with

the imported data. Sqoop supports all relational databases over JDBC, and Quest Software provides a

connector (i.e., OraOop) that has been optimized for access to data residing in Oracle databases.

Question : You have a directory named jobdata in HDFS that contains four files: _first.txt, second.txt, .third.txt and #data.

txt. How many files will be processed by the FileInputFormat.setInputPaths () command when it's given a path

object representing this directory?

1. Four, all files will be processed

2. Three, the pound sign is an invalid character for HDFS file names

3. Access Mostly Uused Products by 50000+ Subscribers

4. None, the directory cannot be named jobdata

5. One, no special characters can prefix the name of an input file

Ans : 3

Exp :Files starting with '_' are considered 'hidden' like unix files startingwith '.'.

# characters are allowed in HDFS file names.

Question : Table metadata in Hive is:

1. Stored as metadata on the NameNode.

2. Stored along with the data in HDFS.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Stored in ZooKeeper.

Ans : 3

Exp :

By default, hive use an embedded Derby database to store metadata information. The metastore is the "glue"

between Hive and HDFS. It tells Hive where your data files live in HDFS, what type of data they contain, what

tables they belong to, etc.

The Metastore is an application that runs on an RDBMS and uses an open source ORM layer called

DataNucleus, to convert object representations into a relational schema and vice versa. They chose this

approach as opposed to storing this information in hdfs as they need the Metastore to be very low latency. The

DataNucleus layer allows them to plugin many different RDBMS technologies.

*By default, Hive stores metadata in an embedded Apache Derby database, and other client/server databases

like MySQL can optionally be used.

*features of Hiveinclude:

Metadata storage in an RDBMS, significantly reducing the time to perform semantic checks during query

execution.

Store Hive Metadata into RDBMS

Question : While working the HadoopExam Inc, you are running two Hadoop clusters named hadoopexam and hadoopexam. Both are running the same version of

Hadoop 3.3, Which command copies the data inside /home/he1/ from hadoopexam1 to hadoopexam2 into the directory /home/he2/?

1. $ hadoop distcp hdfs://hadoopexam1/home/he1 hdfs://hadoopexam2/home/he2/

2. $ hadoop distcp hadoopexam1:/home/he1 hadoopexam2:/home/he2/

3. Access Mostly Uused Products by 50000+ Subscribers

4. $ distcp hdfs://hadoopexam1/home/he1 hdfs://hadoopexam2/home/he2/

Ans : 1

Exp : DistCp (distributed copy) is a tool used for large inter/intra-cluster copying. It uses MapReduce to effect its distribution, error handling and recovery, and reporting. It expands a list of files and directories into input to map tasks, each of which will copy a partition of the files specified in the source list. Its MapReduce pedigree has endowed it with some quirks in both its semantics and execution. The purpose of this document is to offer guidance for common tasks and to elucidate its model. Usage The most common invocation of DistCp is an inter-cluster copy: bash$ hadoop distcp hdfs://nn1:8020/foo/bar \ hdfs://nn2:8020/bar/foo This will expand the namespace under /foo/bar on nn1 into a temporary file, partition its contents among a set of map tasks, and start a copy on each TaskTracker from nn1 to nn2. Note that DistCp expects absolute paths. One can also specify multiple source directories on the command line:

bash$ hadoop distcp hdfs://nn1:8020/foo/a hdfs://nn1:8020/foo/b hdfs://nn2:8020/bar/foo

Or, equivalently, from a file using the -f option: bash$ hadoop distcp -f hdfs://nn1:8020/srclist hdfs://nn2:8020/bar/foo

Where srclist contains hdfs://nn1:8020/foo/a hdfs://nn1:8020/foo/b

When copying from multiple sources, DistCp will abort the copy with an error message if two sources collide, but collisions at the destination are resolved per the options specified. By default, files already existing at the destination are skipped (i.e. not replaced by the source file). A count of skipped files is reported at the end of each job, but it may be inaccurate if a copier failed for some subset of its files, but succeeded on a later attempt (see Appendix). It is important that each TaskTracker can reach and communicate with both the source and destination file systems. For HDFS, both the source and destination must be running the same version of the protocol or use a backwards-compatible protocol (see Copying Between Versions). After a copy, it is recommended that one generates and cross-checks a listing of the source and destination to verify that the copy was truly successful. Since DistCp employs both MapReduce and the FileSystem API, issues in or between any of the three could adversely and silently affect the copy. Some have had success running with -update enabled to perform a second pass, but users should be acquainted with its semantics before attempting this. It's also worth noting that if another client is still writing to a source file, the copy will likely fail. Attempting to overwrite a file being written at the destination should also fail on HDFS. If a source file is (re)moved before it is copied, the copy will fail with a FileNotFoundException. hadoop distcp is a command which runs a Map-only MapReduce job to copy data from one cluster to another (or within a single cluster).

The syntax, if both clusters are running the same version of Hadoop, is : hadoop distcp hdfs:///path/to/source hdfs:///path/to/destination

Question : In QuickTechie Inc Hadoop MRv cluster which is installed on multirack without any particular tpology script. You have defualt hadoop configuration.

Please select the correct statement which applies

1. NameNode will refuse to write any data until a rack topology script has been provided.

2. Namenode will automatically determine the rack locations of the nodes based on network latency.

3. Access Mostly Uused Products by 50000+ Subscribers

4. NameNode will randomly assign nodes to racks. It will then write one copy of the block to a node on one rack, and two copies to nodes on another rack.

Ans : 3

Exp : Topology scripts are used by Hadoop to determine the rack location of nodes. This information is used by Hadoop to replicate block data to redundant racks.If no rack topology script has been created, Hadoop has no knowledge of which rack each node is in. It cannot determine this dynamically, so it assumes that all nodes are in the same rack; when it chooses which nodes should be used for each of the three replicas of a block it therefore cannot have any consideration for rack location.Topology Script

A sample Bash shell script:

HADOOP_CONF=/etc/hadoop/conf

while [ $# -gt 0 ] ; do

nodeArg=$1

exec ${HADOOP_CONF}/topology.data

result=""

while read line ; do

ar=( $line )

if [ "${ar[0]}" = "$nodeArg" ] ; then

result="${ar[1]}"

fi

done

shift

if [ -z "$result" ] ; then

echo -n "/default/rack "

else

echo -n "$result "

fi

done

Topology data

hadoopdata1.ec.com /dc1/rack1

hadoopdata1 /dc1/rack1

10.1.1.1 /dc1/rack2

Question : You have MRv cluster already setup in Acmeshell Inc datacenter Geneva, One of the admin in your data center unplugs a slave data node.

From the Hadoop Web UI it is known that the cluster size has contacted and express concerns that there could be data loss and HDFS performance will degrade.

And this is the default hadoop configuration cluster. What can you tell the users?

1. It must not be ignored, The NameNode will re-replicate the data after the administrator issues a special command.

The data is not lost but is under-replicated until the administrator issues this command.

2. It should be ignored, After identifying the outage, the NameNode will naturally re-replicate the data and there will be no data loss.

The administrator can re-add the DataNode at any time. Data will be under-replicated but will become properly replicated over time.

3. Access Mostly Uused Products by 50000+ Subscribers

failures to the DataNode, so the end users can disregard such warnings.

4. It must not be ignored. The HDFS filesystem is corrupt until the the administrator re-adds the DataNode to the cluster.

The warnings associated with the event should be reported.

Ans : 2

Exp : Data Disk Failure, Heartbeats and Re-Replication

Each DataNode sends a Heartbeat message to the NameNode periodically. A network partition can cause a subset of DataNodes to lose connectivity with the NameNode. The NameNode detects this condition by the absence of a Heartbeat message. The NameNode marks DataNodes without recent Heartbeats as dead and does not forward any new IO requests to them. Any data that was registered to a dead DataNode is not available to HDFS any more. DataNode death may cause the replication factor of some blocks to fall below their specified value. The NameNode constantly tracks which blocks need to be replicated and initiates replication whenever necessary. The necessity for re-replication may arise due to many reasons: a DataNode may become unavailable, a replica may become corrupted, a hard disk on a DataNode may fail, or the replication factor of a file may be increased. Cluster Rebalancing : The HDFS architecture is compatible with data rebalancing schemes. A scheme might automatically move data from one DataNode to another if the free space on a DataNode falls below a certain threshold. In the event of a sudden high demand for a particular file, a scheme might dynamically create additional replicas and rebalance other data in the cluster. These types of data rebalancing schemes are not yet implemented.

Data Integrity : It is possible that a block of data fetched from a DataNode arrives corrupted. This corruption can occur because of faults in a storage device, network faults, or buggy software. The HDFS client software implements checksum checking on the contents of HDFS files. When a client creates an HDFS file, it computes a checksum of each block of the file and stores these checksums in a separate hidden file in the same HDFS namespace. When a client retrieves file contents it verifies that the data it received from each DataNode matches the checksum stored in the associated checksum file. If not, then the client can opt to retrieve that block from another DataNode that has a replica of that block. Metadata Disk Failure : The FsImage and the EditLog are central data structures of HDFS. A corruption of these files can cause the HDFS instance to be non-functional. For this reason, the NameNode can be configured to support maintaining multiple copies of the FsImage and EditLog. Any update to either the FsImage or EditLog causes each of the FsImages and EditLogs to get updated synchronously. This synchronous updating of multiple copies of the FsImage and EditLog may degrade the rate of namespace transactions per second that a NameNode can support. However, this degradation is acceptable because even though HDFS applications are very data intensive in nature, they are not metadata intensive. When a NameNode restarts, it selects the latest consistent FsImage and EditLog to use.

The NameNode machine is a single point of failure for an HDFS cluster. If the NameNode machine fails, manual intervention is necessary. Currently, automatic restart and failover of the NameNode software to another machine is not supported.HDFS is designed to deal with the loss of DataNodes automatically. Each DataNode heartbeats in to the NameNode every three seconds. If the NameNode does not receive a heartbeat from the DataNode for a certain amount of time (10 minutes and 30 seconds by default) it begins to re-replicate any blocks which were on the now-dead DataNode. This re-replication requires no manual input by the system administrator Data Disk Failure, Heartbeats and Re-Replication Each DataNode sends a Heartbeat message to the NameNode periodically. A network partition can cause a subset of DataNodes to lose connectivity with the NameNode. The NameNode detects this condition by the absence of a Heartbeat message. The NameNode marks DataNodes without recent Heartbeats as dead and does not forward any new IO requests to them. Any data that was registered to a dead DataNode is not available to HDFS any more. DataNode death may cause the replication factor of some blocks to fall below their specified value. The NameNode constantly tracks which blocks need to be replicated and initiates replication whenever necessary. The necessity for re-replication may arise due to many reasons: a DataNode may become unavailable, a replica may become corrupted, a hard disk on a DataNode may fail, or the replication factor of a file may be increased.

Question : You need to move a file titled "weblogs" into HDFS. When you try to copy the file, you can't. You know you

have ample space on your DataNodes. Which action should you take to relieve this situation and store more

files in HDFS?

1. Increase the block size on all current files in HDFS.

2. Increase the block size on your remaining files.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Increase the amount of memory for the NameNode.

5. Increase the number of disks (or size) for the NameNode.

Ans : 3

Exp :

*-put localSrc destCopies the file or directory from the local file system identified by localSrc to dest within the DFS.

*What is HDFS Block size? How is it different from traditional file system block size? In HDFS data is split into

blocks and distributed across multiple nodes in the cluster. Each block is typically 64Mb or 128Mb in size.

Each block is replicated multiple times. Default is to replicate each block three times. Replicas are stored on

different nodes. HDFS utilizes the local file system to store each HDFS block as a separate file. HDFS Block

size can not be compared with the traditional file system block size.

Question : Workflows expressed in Oozie can contain:

1. Sequences of MapReduce and Pig. These sequences can be combined with other actions including forks,

decision points, and path joins.

2. Sequences of MapReduce job only; on Pig on Hive tasks or jobs. These MapReduce sequences can be

combined with forks and path joins.

3. Access Mostly Uused Products by 50000+ Subscribers

handlers but no forks.

4. Iterntive repetition of MapReduce jobs until a desired answer or state is reached.

Ans : 1

Exp :

Oozie workflow is a collection of actions (i.e. Hadoop Map/Reduce jobs, Pig jobs) arranged in a control

dependency DAG (Direct Acyclic Graph), specifying a sequence of actions execution. This graph is specified

in hPDL (a XML Process Definition Language). hPDL is a fairly compact language, using a limited amount of

flow control and action nodes. Control nodes define the flow of execution and include beginning and end of a

workflow (start, end and fail nodes) and mechanisms to control the workflow execution path ( decision, fork

and join nodes).

Workflow definitions Currently running workflow instances, including instance states and variables

Oozie is a Java Web-Application that runs in a Java servlet-container - Tomcat and uses a database to store

Question : In the QuickTechie Inc you have slave nodes Hadoop cluster configured with the master nodes.

As an administrator you have executed

hadoop fsck /

Select the output from above command.

1. It will show the number of under-replicated blocks in the cluster

2. It will show the number of nodes in each rack in the cluster

3. It will also show the the number of DataNodes in the cluster

4. Hash Key of the the location of each block in the cluster

5. Metadata of all the files in the cluster

1. 1,5

2. 3,4

3. Access Mostly Uused Products by 50000+ Subscribers

4. 2,5

5. 4,5

Ans : 3

Exp : A file in HDFS can

become corrupt if all copies of one or more blocks are unavailable, which would leave a hole in the file of up to the block size of the file, and any attempt to read a file in this state would result in a failure in the form of an exception. For this problem to occur, all copies of a block must become unavailable fast enough for the system to not have enough time to detect the failure and create a new replica of the data. Despite being rare, catastrophic failures like this can happen and when they do, administrators need a tool to detect the problem and help them find the missing blocks. By default, the fsck tool generates a summary report that lists the overall health of the filesystem. HDFS is considered healthy if-and only if-all files have a minimum number of replicas available.

In its standard form, hadoop fsck / will return information about the cluster including the number of DataNodes and the number of under-replicated blocks.

To view a list of all the files in the cluster, the command would be hadoop fsck / -files

To view a list of all the blocks, and the locations of the blocks, the command would be hadoop fsck / -files -blocks -locations

Additionally, the -racks option would display the rack topology information for each block.

Back up the following critical data before attempting an upgrade. On the node that hosts the NameNode, open the Hadoop Command line shortcut that opens a command window in the Hadoop directory. Run the following commands:

Run the fsck command as the HDFS Service user and fix any errors. (The resulting file contains a complete block map of the file system):

hadoop fsck / -files -blocks -locations > dfs-old-fsck-1.log

Capture the complete namespace directory tree of the file system:

hadoop fs -lsr / > dfs-old-lsr-1.log

Create a list of DataNodes in the cluster:

hadoop dfsadmin -report > dfs-old-report-1.log

Capture output from fsck command:

hadoop fsck / -block -locations -files > fsck-old-report-1.log

Question : In the Acmeshell Inc. you have nodes Hadoop YARN cluster setup in the rack.

Select the scenario from given below where you are not going to lose the data

1. When first 100 node fails.

2. 5 racks simultaneously fail

3. Access Mostly Uused Products by 50000+ Subscribers

at once

4. 300 DataNodes fail at once

5. An entire rack fails

Ans : 5

Exp : For small clusters in which all servers are connected by a single switch, there are only two levels of locality: "on-machine" and "off-machine." When loading data from a DataNode's local drive into HDFS, the NameNode will schedule one copy to go into the local DataNode, and will pick two other machines at random from the cluster. For larger Hadoop installations which span multiple racks, it is important to ensure that replicas of data exist on multiple racks. This way, the loss of a switch does not render portions of the data unavailable due to all replicas being underneath it. HDFS can be made rack-aware by the use of a script which allows the master node to map the network topology of the cluster. While alternate configuration strategies can be used, the default implementation allows you to provide an executable script which returns the "rack address" of each of a list of IP addresses. The network topology script receives as arguments one or more IP addresses of nodes in the cluster. It returns on stdout a list of rack names, one for each input. The input and output order must be consistent. To set the rack mapping script, specify the key topology.script.file.name in conf/hadoop-site.xml. This provides a command to run to return a rack id; it must be an executable script or program. By default, Hadoop will attempt to send a set of IP addresses to the file as several separate command line arguments. You can control the maximum acceptable number of arguments with the topology.script.number.args key.

Rack ids in Hadoop are hierarchical and look like path names. By default, every node has a rack id of /default-rack. You can set rack ids for nodes to any arbitrary path, e.g., /foo/bar-rack. Path elements further to the left are higher up the tree. Thus a reasonable structure for a large installation may be /top-switch-name/rack-name.Hadoop rack ids are not currently expressive enough to handle an unusual routing topology such as a 3-d torus; they assume that each node is connected to a single switch which in turn has a single upstream switch. This is not usually a problem, however. Actual packet routing will be directed using the topology discovered by or set in switches and routers. The Hadoop rack ids will be used to find "near" and "far" nodes for replica placement (and in 0.17, MapReduce task placement). The following example script performs rack identification based on IP addresses given a hierarchical IP addressing scheme enforced by the network administrator. This may work directly for simple installations; more complex network configurations may require a file- or table-based lookup process. Care should be taken in that case to keep the table up-to-date as nodes are physically relocated, etcBy default, Hadoop replicates each block three times. The standard rack placement policy, assuming a rack topology script has been defined, is to place the first copy of the block on a node in one rack, and the remaining two copies on two nodes in a different rack. Because of this, an entire rack failing will not result in the total loss of any blocks, since at least one copy will be present in a different rack. Losing even ten percent of the nodes across multiple racks could result in all three nodes which contain a particular block being lost.

Question : In the QuickTechie Inc you found that before upgrading from MRv to MRv, you don't find that Pig is already installed in

the Hadoop cluster. But now data management research team had requested you to provide Pig support as well on new Hadoop cluster.

So what are you going to do for providing Pig infrastructure as well on the new infrastructure?

1. Install the Pig interpreter on the client machines only.

2. Install the Pig interpreter on the master node which is running the JobTracker.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Install the Pig interpreter on all nodes in the cluster, and the client machines.

Ans : 1

Exp : Pig[1] is a high-level platform for creating MapReduce programs used with Hadoop. The language for this platform is called Pig Latin.[1] Pig Latin abstracts the programming from the Java MapReduce idiom into a notation which makes MapReduce programming high level, similar to that of SQL for RDBMS systems. Pig Latin can be extended using UDF (User Defined Functions) which the user can write in Java, Python, JavaScript, Ruby or Groovy[2] and then call directly from the language. Pig is a high-level abstraction on top of MapReduce which allows developers to process data on the cluster using a scripting language rather than having to write Hadoop MapReduce. The Pig interpreter runs on the client; it processes the user's PigLatin script and then submits MapReduce jobs to the cluster based on the script. There is no need to have anything installed on the cluster; all that is required is the interpreter on the client machine.

Question : You have submitted two ETL MapReduce job on Hadoop YARN cluster as below.

1. First job named TSETL executed with the output directory name as TSETL_19012015

2. Second job named HOCETL executed with the output directory name as TSETL_19012015

What would happen when you execute the second job.

1. An error will occur immediately, because the output directory must not already exist when a MapReduce job commences.

2. An error will occur after the Mappers have completed but before any Reducers begin to run, because the output path must not exist when the Reducers commence.

3. Access Mostly Uused Products by 50000+ Subscribers

4. The job will run successfully. Output from the Reducers will overwrite the contents of the existing directory.

Ans : 1

Exp : When a job is run, one of the first things done on the client is a check to ensure that the output directory does not already exist. If it does, the client will immediately terminate. The job will not be submitted to the cluster; the check takes place on the client.

Question : Select the correct statement for OOzie workflow

1. OOzie workflow runs on a server which is typically outside of Hadoop Cluster

2. OOzie workflow definition are submitted via HTTP.

3. Access Mostly Uused Products by 50000+ Subscribers

4. All 1,2 and 3 are correct

5. Only 1 and 3 are correct

Ans : 4

Exp : Check the URL http://oozie.apache.org/docs/3.1.3-incubating/WorkflowFunctionalSpec.html

Question : Which of the following is correct for the OOzie control nodes

1. fork splits the execution path

2. join waits for all concurrent execution paths to complete before proceeding

3. Access Mostly Uused Products by 50000+ Subscribers

4. Only 1 and 3 are correct

5. All 1,2 and 3 are correct

Ans : 5

Exp : Check the URL http://oozie.apache.org/docs/3.1.3-incubating/WorkflowFunctionalSpec.html

Question : In Acmeshell Inc, You have a node Hadoop cluster with master nodes running HDFS with defaut replication factor ,

and a another machine outside the cluster from which developer submit jobs. So what exactly needed to do in order to run Impala on the cluster

and submit jobs from the command line of the another machine outside the cluser?

1. Install the impalad daemon on each machine in your cluste 2. Install the catalogd daemon on one machine in your cluster 3. Install the statestored daemon on each machine in your cluster

4. Install the statestored daemon on your gateway machine

5. Install the impalad daemon on one machine in your cluster

6. Install the statestored daemon on one machine in your cluster

7. Install the catalogd daemon on each machine in your cluster 8. Install the Impala shell (impala-shell) on your gateway machine

9. Install the Impala shell (impala-shell) on each machine in your cluster

10. Install the impalad daemon on your gateway machine 11. Install the catalogd daemon on your gateway machine

1. 2,6,10,11

2. 3,5,6,7

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1,2,6,8

5. 2,4,6,8

Ans : 4

Exp : What is Included in an Impala Installation : Impala is made up of a set of components that can be installed on multiple nodes throughout your cluster. The key installation step for performance is to install the impalad daemon (which does most of the query processing work) on all data nodes in the cluster. The Impala package installs these binaries: impalad - The Impala daemon. Plans and executes queries against HDFS and HBase data. Run one impalad process on each node in the cluster that has a data node. statestored - Name service that tracks location and status of all impalad instances in the cluster. Run one instance of this daemon on a node in your cluster. Most production deployments run this daemon on the namenode. catalogd - Metadata coordination service that broadcasts changes from Impala DDL and DML statements to all affected Impala nodes, so that new tables, newly loaded data, and so on are immediately visible to queries submitted through any Impala node. (Prior to Impala 1.2, you had to run the REFRESH or INVALIDATE METADATA statement on each node to synchronize changed metadata. Now those statements are only required if you perform the DDL or DML through Hive.) Run one instance of this daemon on a node in your cluster, preferable on the same host as the statestored daemon.

impala-shell - Command-line interface for issuing queries to the Impala daemon. You install this on one or more hosts anywhere on your network, not necessarily data nodes or even within the same cluster as Impala. It can connect remotely to any instance of the Impala daemon.

Before doing the installation, ensure that you have all necessary prerequisites. See Cloudera Impala Requirements for details.

Impala Installation Procedure for CDH 4 Users

You can install Impala under CDH 4 in one of two ways:

Using the Cloudera Manager installer. This is the recommended technique for doing a reliable and verified Impala installation. Cloudera Manager 4.8 or higher can automatically install, configure, manage, and monitor Impala 1.2.1 and higher. The latest Cloudera Manager is always preferable, because newer Cloudera Manager releases have configuration settings for the most recent Impala features. Using a manual process for systems not managed by Cloudera Manager. You must do additional verification steps in this case, to check that Impala can interact with other Hadoop components correctly, and that your cluster is configured for efficient Impala execution.To run Impala on your cluster, you should have the impalad daemon running on each machine in the cluster. Impala requires just one instance of the statestore daemon and one instance of the catalogd daemon; each of these should run on a node (ideally the same one) in the cluster. Finally, the impala shell allows users to connect to, and submit queries to, the Impala daemons, and so should be installed on the gateway machine.

Question : You have created Timeseries_ETL MapReduce job, which is placed in a Jar called TSETL.jar and the name of Driver

class is TSETL.class. Now you are going to execute the following command while submitting the ETL job.

hadoop jar TSETL.jar TSETL

Select the correct statement for the above command

1. TSETL.jar is placed in a temporary directory in HDFS

2. An error will occur, because you have not provided input and output directories

3. Access Mostly Uused Products by 50000+ Subscribers

4. TSETL.jar is sent directly to the JobTracker

Ans : 1

Exp : While messing around with MapReduce code, I’ve found it to be a bit tedious having to generate the jarfile, copy it to the machine running the JobTracker, and then run the job every time the job has been altered. I should be able to run my jobs directly from my development environment, as illustrated in the figure below. This post explains how I’ve "solved" this problem. This may also help when integrating Hadoop with other applications. I do by no means claim that this is the proper way to do it, but it does the trick for me. I assume that you have a (single-node) Hadoop 1.0.3 cluster properly installed on a dedicated or virtual machine. In this example, the JobTracker and HDFS resides on IP address 192.168.102.131. When a job is submitted to the cluster, it is placed in a temporary directory in HDFS and the JobTracker is notified of that location. The configuration for the job is serialized to an XML file, which is also placed in a directory in HDFS. Some jobs require you to specify the input and output directories on the command line, but this is not a Hadoop requirement.

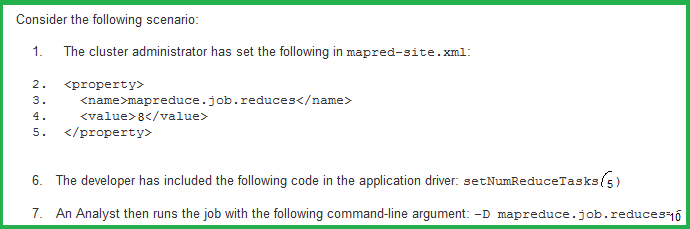

Question Which of the following is correct for the OOzie control nodes