Cloudera Hadoop Administrator Certification Certification Questions and Answer (Dumps and Practice Questions)

Question : Which of the following command will delete the Hive table nameed EVENTINFO

1. hive -e 'DROP TABLE EVENTINFO'

2. hive 'DROP TABLE EVENTINFO'

3. Access Mostly Uused Products by 50000+ Subscribers

4. hive -e 'TRASH TABLE EVENTINFO'

1.

2.

3. Access Mostly Uused Products by 50000+ Subscribers

4.

Ans :1

Exp : Sqoop does not offer a way to delete a table from Hive, although it will overwrite the table definition during

import if the table already exists and --hive-overwrite is specified. The correct HiveQL statement to drop a table

is "DROP TABLE tablename". In Hive, table names are all case insensitives

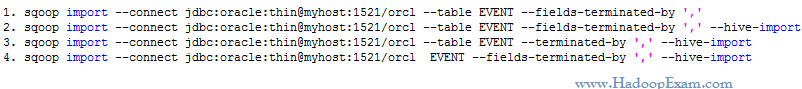

Question : There is no tables in Hive, which command will

import the entire contents of the EVENT table from

the database into a Hive table called EVENT

that uses commas (,) to separate the fields in the data files?

1.

2.

3. Access Mostly Uused Products by 50000+ Subscribers

4.

Ans :2

Exp : --fields-terminated-by option controls the character used to separate the fields in the Hive table's data files.

Question : You have a MapReduce job which is dependent on two external jdbc jars called ojdbc.jar and openJdbc.jar

which of the following command will correctly oncludes this external jars in the running Jobs classpath

1. hadoop jar job.jar HadoopExam -cp ojdbc6.jar,openJdbc6.jar

2. hadoop jar job.jar HadoopExam -libjars ojdbc6.jar,openJdbc6.jar

3. Access Mostly Uused Products by 50000+ Subscribers

4. hadoop jar job.jar HadoopExam -libjars ojdbc6.jar openJdbc6.jar

Ans : 2

Exp : The syntax for executing a job and including archives in the job's classpath is: hadoop jar -libjars ,[,...]

Question : You have a an EVENT table with following schema in the MySQL database.

PAGEID NUMBER

USER VARCHAR2

EVENTTIME DATE

PLACE VARCHAR2

Now that the database EVENT table has been imported and is stored in the dbimport directory in HDFS,

you would like to make the data available as a Hive table.

Which of the following statements is true? Assume that the data was imported in CSV format.

1. An Hive table can be created with the Hive CREATE command.

2. An external Hive table can be created with the Hive CREATE command that uses the data in the dbimport directory unchanged and in place.

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above is correct.

Ans : 2

Exp : An external Hive table can be created that points to any file in HDFS.

The table can be configured to use arbitrary field and row delimeters or even extract fields via regular expressions.

Question : You have Sqoop to import the EVENT table from the database,

then write a Hadoop streaming job in Python to scrub the data,

and use Hive to write the new data into the Hive EVENT table.

How would you automate this data pipeline?

1. Using first Sqoop job and then remaining Part using MapReduce job chaining.

2. Define the Sqoop job, the MapReduce job, and the Hive job as an Oozie workflow job, and define an Oozie coordinator job to run the workflow job daily.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Define the Sqoop job, the MapReduce job, and the Hive job as an Oozie workflow job,

and define an Zookeeper coordinator job to run the workflow job daily.

Ans :2

Exp : In Oozie, scheduling is the function of an Oozie coordinator job.

Oozie does not allow you to schedule workflow jobs

Oozie coordinator jobs cannot aggregate tasks or define workflows;

coordinator jobs are simple schedules of previously defined worksflows.

You must therefore assemble the various tasks into a single workflow

job and then use a coordinator job to execute the workflow job.

Question : Which of the following default character used by Sqoop as field delimiters in the Hive table data file?

1. 0x01

2. 0x001

3. Access Mostly Uused Products by 50000+ Subscribers

4. 0x011

Ans :1

Exp : By default Sqoop uses Hives default delimiters when doing a Hive table export, which is 0x01 (^A)

Question In a Sqoop job Assume $PREVIOUSREFRESH contains a date:time string for the last time the import was run, e.g., '-- ::'.

Which of the following import command control arguments prevent a repeating Sqoop job from downloading the entire EVENT table every day?

1. --incremental lastmodified --refresh-column lastmodified --last-value "$PREVIOUSREFRESH"

2. --incremental lastmodified --check-column lastmodified --last-time "$PREVIOUSREFRESH"

3. Access Mostly Uused Products by 50000+ Subscribers

4. --incremental lastmodified --check-column lastmodified --last-value "$PREVIOUSREFRESH"

Correct Answer : Get Lastest Questions and Answer :

Question : You have a log file loaded in HDFS, wich of of the folloiwng operation will allow you to create Hive table using this log file in HDFS.

1. Create an external table in the Hive shell to extract the column data from the logs

2. Create an external table in the Hive shell using org.apache.hadoop.hive.serde2.RegexSerDe to extract the column data from the logs

3. Access Mostly Uused Products by 50000+ Subscribers

4. Create an external table in the Hive shell using org.apache.hadoop.hive.serde2.CSVSerDe to extract the column data from the logs

Ans : 2

Exp : RegexSerDe uses regular expression (regex) to deserialize data.

It doesn't support data serialization. It can deserialize the data using regex and extracts groups as columns.

In deserialization stage, if a row does not match the regex, then all columns in the row will be NULL.

If a row matches the regex but has less than expected groups, the missing groups will be NULL.

If a row matches the regex but has more than expected groups, the additional groups are just ignored.

NOTE: Obviously, all columns have to be strings.

Users can use "CAST(a AS INT)" to convert columns to other types.

NOTE: This implementation is using String, and javaStringObjectInspector.

A more efficient implementation should use UTF-8 encoded Text and writableStringObjectInspector.

We should switch to that when we have a UTF-8 based Regex library.

When building a Hive table from log data, the column widths are not fixed,

so the only way to extract the data is with a regular expression.

The org.apache.hadoop.hive.serde2.RegexSerDe class reads data from a flat file and extracts column information

via a regular expression. The SerDe is specified as part of the table definition when the table is created.

Once the table is created, the LOAD command will add the log files to the table.

Question : You write a Sqoop job to pull the data from the USERS table, but your job pulls the

entire USERS table every day. Assume$LASTIMPORT contains a date:time string for the

last time the import was run, e.g., '2013-09-20 15:27:52'. Which import control

arguments prevent a repeating Sqoop job from downloading the entire USERS table every day?

1. --incremental lastmodified --last-value "$LASTIMPORT"

2. --incremental lastmodified --check-column lastmodified --last-value "$LASTIMPORT"

3. Access Mostly Uused Products by 50000+ Subscribers

4. --incremental "$LASTIMPORT" --check-column lastmodified --last-value "$LASTIMPORT"

Ans : 2

Exp : The --where import control argument lets you specify a select statement to use when importing data,

but it takes a full select statement and must include $CONDITIONS in the WHERE clause.

There is no --since option. The --incremental option does what we want.

Watch Module 22 : http://hadoopexam.com/index.html/#hadoop-training

And refer : http://sqoop.apache.org/docs/1.4.3/SqoopUserGuide.html

Question : Now that you have the USERS table imported into Hive, you need to make the log data available

to Hive so that you can perform a join operation. Assuming you have uploaded the log data into HDFS,

which approach creates a Hive table that contains the log data:

1. Create an external table in the Hive shell using org.apache.hadoop.hive.serde2.SerDeStatsStruct to extract the column data from the logs

2. Create an external table in the Hive shell using org.apache.hadoop.hive.serde2.RegexSerDe to extract the column data from the logs

3. Access Mostly Uused Products by 50000+ Subscribers

4. Create an external table in the Hive shell using org.apache.hadoop.hive.serde2.NullStructSerDe to extract the column data from the logs

Ans : 2

Exp : When building a Hive table from log data, the column widths are not fixed, so the only way to extract the data is with a regular expression. The org.apache.hadoop.hive.serde2.RegexSerDe class reads data from a flat file and extracts column information via a regular expression. The SerDe is specified as part of the table definition when the table is created. Once the table is created, the LOAD command will add the log files to the table. For more information about SerDes in Hive, see How-to: Use a SerDe in Apache Hive and chapter 12 in Hadoop: The Definitive Guide, 3rd Edition in the Tables: Storage Formats section.

Watch Module 12 and 13 : http://hadoopexam.com/index.html/#hadoop-training

And refer : https://hive.apache.org/javadocs/r0.10.0/api/org/apache/hadoop/hive/serde2/package-summary.html

Question :

The cluster block size is set to 128MB. The input file contains 170MB of valid input data

and is loaded into HDFS with the default block size. How many map tasks will be run during the execution of this job?

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

Correct Answer : Get Lastest Questions and Answer :

Explanation: The job has an implicit IdentityMapper that just passes along its input keys.

The number of mappers run is therefore exactly what you'd expect for any other job: 2.

Watch the training Module 21 from http://hadoopexam.com/index.html/#hadoop-training

Question : For each YARN job, the Hadoop framework generates task log file. Where are Hadoop task log files stored?

1. Cached by the NodeManager managing the job containers, then written to a log directory on the NameNode

2. Cached in the YARN container running the task, then copied into HDFS on job completion

3. Access Mostly Uused Products by 50000+ Subscribers

4. On the local disk of the slave mode running the task

Correct Answer : Get Lastest Questions and Answer :

Explanation: The general log-related configuration properties are yarn.nodemanager.log-dirs and yarn.log-aggregation-enable. The function of each is described next.

The yarn.nodemanager.log-dirs property determines where the container logs are stored on the node when the containers are running. Its default value is

${yarn.log.dir}/userlogs. An application’s localized log directory will be found in {yarn.nodemanager.log-dirs}/application_${appid}. Individual containers’ log directories

will be below this level, in subdirectories named container_{$containerId}.

For MapReduce applications, each container directory will contain the files stderr, stdin, and syslog generated by that container. Other frameworks can

choose to write more or fewer files—YARN doesn’t dictate the file names and number of files.

The yarn.log-aggregation-enable property specifies whether to enable or disable log aggregation. If this function is disabled, NodeManagers will keep the logs

locally (as in Hadoop version 1) and not aggregate them.

The following properties are in force when log aggregation is enabled:

yarn.nodemanager.remote-app-log-dir: This location is found on the default file system (usually HDFS) and indicates where the NodeManagers should

aggregate logs. It should not be the local file system, because otherwise serving daemons such as the history server will not able to serve the

aggregated logs. The default value is /tmp/logs.

yarn.nodemanager.remote-app-log-dir-suffix: The remote log directory will be created at {yarn.nodemanager.remote-app-log-dir}/${user}/{suffix}. The default suffix

value is "logs".

yarn.log-aggregation.retain-seconds: This property defines how long to wait before deleting aggregated logs; –1 or another negative number disables

the deletion of aggregated logs. Be careful not to set this property to a too-small value so as to not burden the distributed file system.

yarn.log-aggregation.retain-check-interval-seconds: This property determines how long to wait between aggregated log retention checks. If its value is set

to 0 or a negative value, then the value is computed as one-tenth of the aggregated log retention time. As with the previous configuration property,

be careful not to set it to an inordinately low value. The default is –1.

yarn.log.server.url: Once an application is done, NodeManagers redirect the web UI users to this URL, where aggregated logs are served. Today it

points to the MapReduce-specific JobHistory.

The following properties are used when log aggregation is disabled:

yarn.nodemanager.log.retain-seconds: The time in seconds to retain user logs on the individual nodes if log aggregation is disabled. The default is

10800.

yarn.nodemanager.log.deletion-threads-count: The number of threads used by the NodeManagers to clean up logs once the log retention time is hit for

local log files when aggregation is disabled.

Watch the training from http://hadoopexam.com/index.html/#hadoop-training

Related Questions

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the classification analysis you want

to save all the data in a single file called QT31012015.log which is approximately in 30GB in size. Now using the MapReduce ETL job you are

able to push this full file in a directory on HDFS called /log/QT/QT31012015.log. Now the permission for your file and directory as below in the HDFS.

QT31012015.log -> rw-rw-r-x

Which is the correct statement for the file (QT31012015.log)

1. No one can modify the contents of the file.

2. The owner and group can modify the contents of the file. Others cannot.

3. Access Mostly Uused Products by 50000+ Subscribers

4. HDFS runs in userspace which makes all users with access to the namespace able to read, write, and modify all files.

5. The owner and group cannot delete the file, but others can.

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the classification analysis you want

to save all the data in a single file called QT31012015.log which is approximately in 30GB in size. Now using the MapReduce ETL job you are able

to push this full file in a directory on HDFS called /log/QT/QT31012015.log. Now the permission for your file and directory as below in the HDFS.

QT31012015.log -> rw-r--r--

/log/QT -> rwxr-xr-x

Which is the correct statement for the file (QT31012015.log)

1. The file cannot be deleted by anyone but the owner

2. The file cannot be deleted by anyone

3. Access Mostly Uused Products by 50000+ Subscribers

4. The file's existing contents can be modified by the owner, but no-one else

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the classification analysis you want to

save all the data in a single file called QT31012015.log which is approximately in 30GB in size. Now using the MapReduce ETL job you are able to push this

full file in a directory on HDFS called /log/QT/QT31012015.log. You want that your data in /log/QT/QT31012015.log file will not be compromised,

so what does HDFS help us for this

1. Storing multiple replicas of data blocks on different DataNodes.

2. Reliance on SAN devices as a DataNode interface.

3. Access Mostly Uused Products by 50000+ Subscribers

4. DataNodes make copies of their data blocks, and put them on different local disks.

Question :What is HBASE?

1. Hbase is separate set of the Java API for Hadoop cluster

2. Hbase is a part of the Apache Hadoop project that provides interface for scanning large amount of data using Hadoop infrastructure

3. Access Mostly Uused Products by 50000+ Subscribers

4. HBase is a part of the Apache Hadoop project that provides a SQL like interface for data processing.

Question :What is the role of the namenode?

1. Namenode splits big files into smaller blocks and sends them to different datanodes

2. Namenode is responsible for assigning names to each slave node so that they can be identified by the clients

3. Access Mostly Uused Products by 50000+ Subscribers

4. Both 2 and 3 are valid answers

Question : What happen if a datanode loses network connection for a few minutes?

1. The namenode will detect that a datanode is not responsive and will start replication of the data from remaining replicas. When datanode comes back online, administrator will need to manually delete the extra replicas

2. All data will be lost on that node. The administrator has to make sure the proper data distribution between nodes

3. Access Mostly Uused Products by 50000+ Subscribers

4. The namenode will detect that a datanode is not responsive and will start replication of the data from remaining replicas. When datanode comes back online, the extra replicas will be deleted

Ans : 4

Exp : The replication factor is actively maintained by the namenode. The namenode monitors the status of all datanodes and keeps track which blocks are located on that node. The moment the datanode is not avaialble it will trigger replication of the data from the existing replicas. However, if the datanode comes back up, overreplicated data will be deleted. Note: the data might be deleted from the original datanode.

Question : What happen if one of the datanodes has much slower CPU? How will it effect the performance of the cluster?

1. The task execution will be as fast as the slowest worker.

However, if speculative execution is enabled, the slowest worker will not have such big impact

2. The slowest worker will significantly impact job execution time. It will slow everything down

3. Access Mostly Uused Products by 50000+ Subscribers

4. It depends on the level of priority assigned to the task. All high priority tasks are executed in parallel twice. A slower datanode would therefore be bypassed. If task is not high priority, however, performance will be affected.

Ans : 1

Exp : Hadoop was specifically designed to work with commodity hardware. The speculative execution helps to offset the slow workers. The multiple instances of the same task will be created and job tracker will take the first result into consideration and the second instance of the task will be killed

Question :If you have a file M size and replication factor is set to , how many blocks can you find on the cluster that will correspond to

that file (assuming the default apache and cloudera configuration)?

1. 3

2. 6

3. Access Mostly Uused Products by 50000+ Subscribers

4. 12

Ans : 2

Exp : Based on the configuration settings the file will be divided into multiple blocks according to the default block size of 64M. 128M / 64M = 2 . Each block will be replicated according to replication factor settings (default 3). 2 * 3 = 6 .

Question : What is replication factor?

1. Replication factor controls how many times the namenode replicates its metadata

2. Replication factor creates multiple copies of the same file to be served to clients

3. Access Mostly Uused Products by 50000+ Subscribers

4. None of these answers are correct.

Ans : 3

Exp : Data is replicated in the Hadoop cluster based on the replication factor. The high replication factor guarantees data availability in the event of failure.

Question : How does the Hadoop cluster tolerate datanode failures?

1. Failures are anticipated. When they occur, the jobs are re-executed.

2. Datanodes talk to each other and figure out what need to be re-replicated if one of the nodes goes down

3. Access Mostly Uused Products by 50000+ Subscribers

4. Since Hadoop is design to run on commodity hardware, the datanode failures are expected. Namenode keeps track of all available datanodes and actively maintains replication factor on all data.

Ans : 4

Exp : The namenode actively tracks the status of all datanodes and acts immediately if the datanodes become non-responsive. The namenode is the central "brain" of the HDFS and starts replication of the data the moment a disconnect is detected.

Question :Which of the following tool, defines a SQL like language..

1. Pig

2. Hive

3. Access Mostly Uused Products by 50000+ Subscribers

4. Flume

Ans 2

Question : Hadoop framework provides a mechanism for copying with machine issues such as faulty configuration or impeding hardware failure. MapReduce detects

that one or a number of machines are performing poorly and starts more copies of a map or reduce task. all the task run simulteneously

and the task taht finishes first are used. Which term describe this behaviour..

1. Partitioning

2. Combining

3. Access Mostly Uused Products by 50000+ Subscribers

4. Speculative Execution

Ans : 4

Question :

By using hadoop fs -put command to write a 500 MB file using 64 MB blcok, but while the file is half written, can other user read the already written block

1. It will throw an exception

2. File block would be accessible which are already written

3. Access Mostly Uused Products by 50000+ Subscribers

4. Until the whole file is copied nothing can be accessible.

Ans :4

Exp : While writing the file of 528MB size using following command

hadoop fs -put tragedies_big4 /user/training/shakespeare/

We tried to read the file using following command and output is below.

[hadoopexam@localhost ~]$ hadoop fs -cat /user/training/shakespeare/tragedies_big4 cat: "/user/training/shakespeare/tragedies_big4": No such file or directory [hadoopexam@localhost ~]$ hadoop fs -cat /user/training/shakespeare/tragedies_big4 cat: "/user/training/shakespeare/tragedies_big4": No such file or directory [training@localhost ~]$ hadoop fs -cat /user/training/shakespeare/tragedies_big4 cat: "/user/training/shakespeare/tragedies_big4": No such file or directory [training@localhost ~]$

Once the put command finishes then only we are able to "cat" this file.

Question :

What is a BloomFilter

1. It is a data structure

2. A bloom filter is a compect representation of a set that support only conatin query.

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above