Cloudera Hadoop Administrator Certification Certification Questions and Answer (Dumps and Practice Questions)

Question : As a client of HadoopExam, you are able to access the Hadoop cluster of HadoopExam Inc, Once a you are validated

with the identity and granted access to a file in HDFS, what is the remainder of the read path back to the client?

1. The NameNode gives the client the block IDs and a list of DataNodes on which those blocks are found, and the application reads the blocks directly from the DataNodes.

2. The NameNode maps the read request against the block locations in its stored metadata, and reads those blocks from the DataNodes. The client application then reads the blocks from the NameNode.

3. Access Mostly Uused Products by 50000+ Subscribers

4. DataNode closest to the client according to Hadoop's rack topology. The client application then reads the blocks from that single DataNode.

Correct Answer : Get Lastest Questions and Answer :

Explanation: When a client wishes to read a file from HDFS, it contacts the NameNode and requests the locations and names of the first few blocks in the file. It then directly contacts the DataNodes containing those blocks to read the data. It would be very wasteful to move blocks around the cluster based on a client's read request, so that is never done. Similarly, if all data was passed via the NameNode, the NameNode would immediately become a serious bottleneck and would slow down the cluster operation dramatically.

First, lets walk through the logic of performing an HDFS read operation. For this, well assume theres a file /user/esammer/foo.txt already in HDFS. In addition to using Hadoops client library-usually a Java JAR file-each client must also have a copy of the cluster configuration data that specifies the location of the namenode The client begins by contacting the namenode, indicating which file it would like to read. The client identity is first validated-either by trusting the client and allowing it to specify a username or by using a strong authentication mechanism such as Kerberos and then checked against the owner and permissions of the file. If the file exists and the user has access to it, the namenode responds to the client with the first block ID and the list of datanodes on which a copy of the block can be found, sorted by their distance to the client. Distance to the client is measured according to Hadoops rack topology-configuration data that indicates

which hosts are located in which racks. If the namenode is unavailable for some reason-because of a problem with either the namenode itself or the network, for example-clients will receive timeouts or exceptions (as appropriate) and will be unable to proceed. With the block IDs and datanode hostnames, the client can now contact the most appropriate datanode directly and read the block data it needs. This process repeats until all blocks in the file have been read or the client closes the file stream.

It is also possible that while reading from a datanode, the process or host on which it runs, dies. Rather than give up, the library will automatically attempt to read another replica of the data from another datanode. If all replicas are unavailable, the read operation fails and the client receives an exception. Another corner case that can occur is that the information returned by the namenode about block locations can be outdated by the time the client attempts to contact a datanode, in which case either a retry will occur if there are other replicas or the read will fail. While rare, these kinds of corner cases make troubleshooting a large distributed system such as Hadoop so complex.

Question : In your QuickTechie Inc Hadoop cluster you have slave datanodes and two master nodes. You set the blcok size MB, with the

replication factor set to 3. How will the Hadoop framework distribute block writes from a MapReduce Reducer into HDFS when that MapReduce Reducer

outputs a 295MB file?

1. Reducers don't write blocks into HDFS.

2. The nine blocks will be written to three nodes, such that each of the three gets one copy of each block.

3. Access Mostly Uused Products by 50000+ Subscribers

4. The nine blocks will be written randomly to the nodes; some may receive multiple blocks, some may receive none.

5. The node on which the Reducer is running will receive one copy of each block. The other replicas will be placed on other nodes in the cluster.

Correct Answer : Get Lastest Questions and Answer :

Explanation: HDFS is designed to reliably store very large files across machines in a large cluster. It stores each file as a sequence of blocks; all blocks in a file except the last block are the same size. The blocks of a file are replicated for fault tolerance. The block size and replication factor are configurable per file. An application can specify the number of replicas of a file. The replication factor can be specified at file creation time and can be changed later. Files in HDFS are write-once and have strictly one writer at any time. The NameNode makes all decisions regarding replication of blocks. It periodically receives a Heartbeat and a Blockreport from each of the DataNodes in the cluster. Receipt of a Heartbeat implies that the DataNode is functioning properly. A Blockreport contains a list of all blocks on a DataNode.with a block size of 128MB and a replication factor of 3, when the Reducer writes the file it will be split into three blocks (a 128MB block, another 128MB block, and a 39MB block). Each block will be replicated three times. For efficiency, if a client (in this case the Reduce task) is running in a YARN container on a cluster node, the first replica of each block it creates will be sent to the DataNode daemon running on that same node. The other two replicas will be written to DataNodes on other machines in the cluster. eplica Placement: The First Baby Steps : The placement of replicas is critical to HDFS reliability and performance. Optimizing replica placement distinguishes HDFS from most other distributed file systems. This is a feature that needs lots of tuning and experience. The purpose of a rack-aware replica placement policy is to improve data reliability, availability, and network bandwidth utilization. The current implementation for the replica placement policy is a first effort in this direction. The short-term goals of implementing this policy are to validate it on production systems, learn more about its behavior, and build a foundation to test and research more sophisticated policies. Large HDFS instances run on a cluster of computers that commonly spread across many racks. Communication between two nodes in different racks has to go through switches. In most cases, network bandwidth between machines in the same rack is greater than network bandwidth between machines in different racks. The NameNode determines the rack id each DataNode belongs to via the process outlined in Hadoop Rack Awareness. A simple but non-optimal policy is to place replicas on unique racks. This prevents losing data when an entire rack fails and allows use of bandwidth from multiple racks when reading data. This policy evenly distributes replicas in the cluster which makes it easy to balance load on component failure. However, this policy increases the cost of writes because a write needs to transfer blocks to multiple racks. For the common case, when the replication factor is three, HDFS's placement policy is to put one replica on one node in the local rack, another on a different node in the local rack, and the last on a different node in a different rack. This policy cuts the inter-rack write traffic which generally improves write performance. The chance of rack failure is far less than that of node failure; this policy does not impact data reliability and availability guarantees. However, it does reduce the aggregate network bandwidth used when reading data since a block is placed in only two unique racks rather than three. With this policy, the replicas of a file do not evenly distribute across the racks. One third of replicas are on one node, two thirds of replicas are on one rack, and the other third are evenly distributed across the remaining racks. This policy improves write performance without compromising data reliability or read performance.The current, default replica placement policy described here is a work in progress. Replica Selection : To minimize global bandwidth consumption and read latency, HDFS tries to satisfy a read request from a replica that is closest to the reader. If there exists a replica on the same rack as the reader node, then that replica is preferred to satisfy the read request. If angg/ HDFS cluster spans multiple data centers, then a replica that is resident in the local data center is preferred over any remote replica. Safemode :

On startup, the NameNode enters a special state called Safemode. Replication of data blocks does not occur when the NameNode is in the Safemode state. The NameNode receives Heartbeat and Blockreport messages from the DataNodes. A Blockreport contains the list of data blocks that a DataNode is hosting. Each block has a specified minimum number of replicas. A block is considered safely replicated when the minimum number of replicas of that data block has checked in with the NameNode. After a configurable percentage of safely replicated data blocks checks in with the NameNode (plus an additional 30 seconds), the NameNode exits the Safemode state. It then determines the list of data blocks (if any) that still have fewer than the specified number of replicas. The NameNode then replicates these blocks to other DataNodes.

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the classification analysis

you want to save all the data in a single file called QT31012015.log which is approximately in 30GB in size. Now using the MapReduce

ETL job you are able to push this full file in a directory on HDFS called /log/QT/QT31012015.log. After completing file write which

of the following metadata change will occur.

1. The metadata in RAM on the NameNode is updated

2. The change is written to the Secondary NameNode

3. The change is written to the fsimage file

4. The change is written to the edits file

5. The NameNode triggers a block report to update block locations in the edits file

6. The metadata in RAM on the NameNode is flushed to disk

1. 1,3,4

2. 1,4,6

3. Access Mostly Uused Products by 50000+ Subscribers

4. 5,6

4. 4,6

Ans : 3

Exp : The namenode stores its filesystem metadata on local filesystem disks in a few different files, the two most important of which are fsimage and edits. Just like a database would, fsimage contains a complete snapshot of the filesystem metadata whereas edits contains only incremental modifications made to the metadata. A common practice for high throughput data stores, use of a write ahead log (WAL) such as the edits file reduces I/O operations to sequential, append-only operations (in the context of the namenode, since it serves directly from RAM), which avoids costly seek operations and yields better overall performance. Upon namenode startup, the fsimage file is loaded into RAM and any changes in the edits file are replayed, bringing the in-memory view of the filesystem up to date.

The NameNode metadata contains information about every file stored in HDFS. The NameNode holds the metadata in RAM for fast access, so any change is reflected in that RAM version. However, this is not sufficient for reliability, since if the NameNode crashes information on the change would be lost. For that reason, the change is also written to a log file known as the edits file.

In more recent versions of Hadoop (specifically, Apache Hadoop 2.0 and CDH4;), the underlying metadata storage was updated to be more resilient to corruption and to support namenode high availability. Conceptually, metadata storage is similar, although transactions are no longer stored in a single edits file. Instead, the namenode periodically rolls the edits file (closes one file and opens a new file), numbering them by transaction ID. It's also possible for the namenode to now retain old copies of both fsimage and edits to better support the ability to roll back in time. Most of these changes won't impact you, although it helps to understand the purpose of the files on disk. That being said, you should never make direct changes to these files unless you really know what you are doing. The rest of this book will simply refer to these files using their base names, fsimage and edits, to refer generally to their function. Recall from earlier that the namenode writes changes only to its write ahead log, edits. Over time, the edits file grows and grows and as with any log-based system such as this, would take a long time to replay in the event of server failure. Similar to a relational database, the edits file needs to be periodically applied to the fsimage file. The problem is that the namenode may not have the available resources-CPU or RAM-to do this while continuing to provide service to the cluster. This is where the secondary namenode comes in.

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the

classification analysis you want to save all the data in a single file called QT31012015.log which is approximately in 30GB in size.

Now using the MapReduce ETL job you are able to push this full file in a directory on HDFS called /log/QT/QT31012015.log. This file is divided into the approximately 70 blocks. Select from below what is stored in each block

1. Each block writes a separate .meta file containing information on the filename of which the block is a part

2. Each block contains only data from the /log/QT/QT31012015.log file

3. Access Mostly Uused Products by 50000+ Subscribers

4. Each block has a header and a footer containing metadata of /log/QT/QT31012015.log

Ans: 2

Exp : The HDFS namespace is stored by the NameNode. The NameNode uses a transaction log called the EditLog to persistently record every change that occurs to file system metadata. For example, creating a new file in HDFS causes the NameNode to insert a record into the EditLog indicating this. Similarly, changing the replication factor of a file causes a new record to be inserted into the EditLog. The NameNode uses a file in its local host OS file system to store the EditLog. The entire file system namespace, including the mapping of blocks to files and file system properties, is stored in a file called the FsImage. The FsImage is stored as a file in the NameNode's local file system too.

The NameNode keeps an image of the entire file system namespace and file Blockmap in memory. This key metadata item is designed to be compact, such that a NameNode with 4 GB of RAM is plenty to support a huge number of files and directories. When the NameNode starts up, it reads the FsImage and EditLog from disk, applies all the transactions from the EditLog to the in-memory representation of the FsImage, and flushes out this new version into a new FsImage on disk. It can then truncate the old EditLog because its transactions have been applied to the persistent FsImage. This process is called a checkpoint. In the current implementation, a checkpoint only occurs when the NameNode starts up. Work is in progress to support periodic checkpointing in the near future.

The DataNode stores HDFS data in files in its local file system. The DataNode has no knowledge about HDFS files. It stores each block of HDFS data in a separate file in its local file system. The DataNode does not create all files in the same directory. Instead, it uses a heuristic to determine the optimal number of files per directory and creates subdirectories appropriately. It is not optimal to create all local files in the same directory because the local file system might not be able to efficiently support a huge number of files in a single directory. When a DataNode starts up, it scans through its local file system, generates a list of all HDFS data blocks that correspond to each of these local files and sends this report to the NameNode: this is the Blockreport.When a file is written into HDFS, it is split into blocks. Each block contains just a portion of the file; there is no extra data in the block file. Although there is also a .meta file associated with each block, that file contains checksum data which is used to confirm the integrity of the block when it is read. Nothing on the DataNode contains information about what file the block is a part of; that information is held only on the NameNode.

Question : Which statement is true with respect to MapReduce . or YARN

1. It is the newer version of MapReduce, using this performance of the data processing can be increased.

2. The fundamental idea of MRv2 is to split up the two major functionalities of the JobTracker,

resource management and job scheduling or monitoring, into separate daemons.

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above

5. Only 2 and 3 are correct

Ans : 5

Exp : MapReduce has undergone a complete overhaul in hadoop-0.23 and we now have, what we call, MapReduce 2.0 (MRv2) or YARN.

The fundamental idea of MRv2 is to split up the two major functionalities of the JobTracker,

resource management and job scheduling or monitoring, into separate daemons. The idea is to have a global ResourceManager (RM)

and per-application ApplicationMaster (AM). An application is either a single job in the classical sense of Map-Reduce jobs or a DAG of jobs.

Question : Which is the component of the ResourceManager

1. 1. Scheduler

2. 2. Applications Manager

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4. All of the above

5. Only 1 and 2 are correct

Ans : 5

Exp : The ResourceManager has two main components: Scheduler and ApplicationsManager.

The Scheduler is responsible for allocating resources to the various running applications subject to familiar constraints of capacities,

queues etc. The Scheduler is pure scheduler in the sense that it performs no monitoring or tracking of status for the application.

Question :

Schduler of Resource Manager guarantees about restarting failed tasks either due to application failure or hardware failures.

1. True

2. False

1. True

2. False

Ans : 2

Exp : The Scheduler is responsible for allocating resources to the various running applications subject to familiar constraints of

capacities, queues etc. The Scheduler is pure scheduler in the sense that it performs no monitoring or tracking of status

for the application. Also, it offers no guarantees about restarting failed tasks either due to application failure or hardware

failures. The Scheduler performs its scheduling function based the resource requirements of the applications;

it does so based on the abstract notion of a resource Container which incorporates elements such as memory,

cpu, disk, network etc.

Question :

Which statement is true about ApplicationsManager

1. is responsible for accepting job-submissions

2. negotiating the first container for executing the application specific ApplicationMaster

and provides the service for restarting the ApplicationMaster container on failure.

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above

5. 1 and 2 are correct

Ans : 5

Exp : The ApplicationsManager is responsible for accepting job-submissions,

negotiating the first container for executing the application specific ApplicationMaster and provides the

service for restarting the ApplicationMaster container on failure.

Question :

NameNode store block locations persistently ?

1. True

2. Flase

Ans : 2

Exp : NameNode does not store block locations persistently, since this information is reconstructed from datanodes when system starts.

Question :

Which tool is used to list all the blocks of a file ?

1. hadoop fs

2. hadoop fsck

3. Access Mostly Uused Products by 50000+ Subscribers

4. Not Possible

Ans : 2

Question : HDFS can not store a file which size is greater than one node disk size :

1. True

2. False

Ans : 2

Exp : It can store because it is divided in block and block can be stored anywhere..

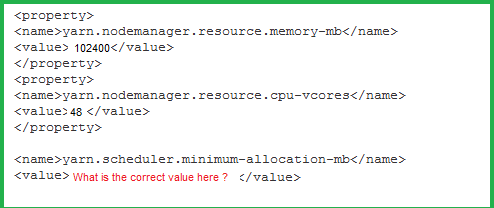

Question : Your HadoopExam Inc cluster has each node in your Hadoop

cluster with running YARN, and has 140GB memory and 40 cores.

Your yarn-site.xml has the configuration as shown in image :

You want YARN to launch a maxinum of 100 Containers per node.

Enter the property value that would restrict YARN from launching

more than 100 containers per node:

1. 2048

2. 1024

3. Access Mostly Uused Products by 50000+ Subscribers

4. 30

Ans : 2

Exp : YARN takes into account all of the available resources on each machine in the cluster. Based on the available resources, YARN negotiates resource requests from applications (such as MapReduce) running in the cluster. YARN then provides processing capacity to each application by allocating Containers. A Container is the basic unit of processing capacity in YARN, and is an encapsulation of resource elements (memory, CPU, etc.). In a Hadoop cluster, it is vital to balance the usage of memory (RAM), processors (CPU cores) and disks so that processing is not constrained by any one of these cluster resources. As a general recommendation, allowing for two Containers per disk and per core gives the best balance for cluster utilization. When determining the appropriate YARN and MapReduce memory configurations for a cluster node, start with the available hardware resources. Specifically, note the following values on each node:

RAM (Amount of memory)

CORES (Number of CPU cores)

DISKS (Number of disks)

The total available RAM for YARN and MapReduce should take into account the Reserved Memory. Reserved Memory is the RAM needed by system processes and other Hadoop processes, such as HBase. Reserved Memory = Reserved for stack memory + Reserved for HBase memory (If HBase is on the same node) Use the following table to determine the Reserved Memory per node.(102400 MB total RAM) / (100 # of Containers) = 1024 MB minimum per Container. The next calculation is to determine the maximum number of Containers allowed per node. The following formula can be used:

# of Containers = minimum of (2*CORES, 1.8*DISKS, (Total available RAM) / MIN_CONTAINER_SIZE)

Where MIN_CONTAINER_SIZE is the minimum Container size (in RAM). This value is dependent on the amount of RAM available -- in smaller memory nodes, the minimum Container size should also be smaller.The final calculation is to determine the amount of RAM per container:

RAM-per-Container = maximum of (MIN_CONTAINER_SIZE, (Total Available RAM) / Containers))

Question : In Acmeshell Inc Hadoop cluster you had set the value of mapred.child.java.opts to -XmxM on all TaskTrackers in the cluster.

You set the same configuration parameter to -Xmx256M on the JobTracker. What size heap will a Map task running on the cluster have?

1. 64MB

2. 128MB

3. Access Mostly Uused Products by 50000+ Subscribers

4. 256MB

5. The job will fail because of the discrepancy

Ans : 2

Exp : Setting mapred.child.java.opts thusly:

SET mapred.child.java.opts="-Xmx4G -XX:+UseConcMarkSweepGC";

is unacceptable. But this seem to go through fine:SET mapred.child.java.opts=-Xmx4G -XX:+UseConcMarkSweepGC; (minus the double-quotes)mapred.child.java.opts is a setting which is read by the TaskTracker when it starts up. The value configured on the JobTracker has no effect; it is the value on the slave node which is used. (The value can be modified by a job, if it contains a different value for the parameter.) Two other guards can restrict task memory usage. Both are designed for admins to enforce QoS, so if you're not one of the admins on the cluster, you may be unable to change them.

The first is the ulimit, which can be set directly in the node OS, or by setting mapred.child.ulimit.

The second is a pair of cluster-wide mapred.cluster.max.*.memory.mb properties that enforce memory usage by comparing job settings mapred.job.map.memory.mb and mapred.job.reduce.memory.mb against those cluster-wide limits.

I am beginning to think that this doesn't even have anything to do with the heap size setting. Tinkering with mapred.child.java.opts in any way is causing the same outcome. For example setting it thusly, SET mapred.child.java.opts="-XX:+UseConcMarkSweepGC"; is having the same result of MR jobs getting killed right away.

Question : You have a website www.QuickTechie.com, where you have one month user profile updates log. Now for the classification analysis you want to

save all the data in a single file called QT31012015.log which is approximately in 30GB in size. Now using the MapReduce ETL job you are able to

push this full file in a directory on HDFS called /log/QT/QT31012015.log. Select the operation which you can do on the /log/QT/QT31012015.log file

1. You can move the file

2. You can overwrite the file by creating a new file with the same name

3. You can rename the file

4. You can update the file's contents

5. You can delete the file

1. 1,2,3

2. 1,3,4

3. Access Mostly Uused Products by 50000+ Subscribers

4. 2,4,5

5. 2,3,4

Correct Answer : Get Lastest Questions and Answer :

Explanation: HDFS supports writing files once (they cannot be updated). This is a stark difference between HDFS and a generic file system (like a Linux file system). Generic file systems allows files to be modified.However appending to a file is supported. Appending is supported to enable applications like HBase.HDFS is a write-once filesystem; after a file has been written to HDFS it cannot be modified. In some versions of HDFS you can append to the file, but you can never modify the existing contents. Files can be moved, deleted, or renamed, as these are metadata operations. You cannot overwrite a file with an existing name. HDFS applications need a write-once-read-many access model for files. A file once created, written, and closed need not be changed. This assumption simplifies data coherency issues and enables high throughput data access. A Map/Reduce application or a web crawler application fits perfectly with this model. There is a plan to support appending-writes to files in the future.

Related Questions

Question :

Select the feature of Mapreduce

1. Automatic parallelization and distribution

2. fault-tolerance

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above

Question : While upgrading your cluster from MRv to MRv, you wish to have additional data nodes with the higher amount of Hard Drive and Memory.

But you are worried that, you can have new nodes with the advanced hardware or not. Select the correct statement regarding new nodes in MRv2 cluster.

1. With new slave nodes you can have any amount of hard drive space

2. With new slave nodes you must have at least 12 X2TB of hard drive space

3. Access Mostly Uused Products by 50000+ Subscribers

4. New node hardware must be equal to existing hardware.

Question : In the QuickTechie Inc Hadoop Infrastructure, you have created a rack topology and in the script to identify each machine

as being in hadooprack1, hadooprack2, or hadooprack3. Now as a developer from your desktop which is outside of your QuickTechie Hadoop cluster

but on the same network, you writes 64MB of data. Your Hadoop cluster has all the default configuration, with first replica of the block is written

to a node on hadooprack2. Now select the correct statement for other two replicas.

1. One of the replica will be written on hadooprack2, and the other on hadooprack3.

2. Either both will be written to nodes on hadooprack1, or both will be written to nodes on hadooprack3.

3. Access Mostly Uused Products by 50000+ Subscribers

4. One will be written to hadooprack1, and one will be written to hadooprack3.

Question : The NameNode uses a file in its _______ to store the EditLog.

1. Any HDFS Block

2. metastore

3. Access Mostly Uused Products by 50000+ Subscribers

4. local hdfs block

Question : Select the correct options

1. Either FsImage or the EditLog must be accurate to HDFS wrok properly

2. NameNode can be configured to support maintaining multiple copies of the FsImage and EditLog

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1 and 2

5. 1,2 and 3

Question : Select the correct option

1. When a file is deleted by a user or an application, it is immediately removed from HDFS

2. When a file is deleted by a user or an application, it is not immediately removed from HDFS. Instead, HDFS first renames it to a file in the /trash directory.

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1,2

5. 2,3