Cloudera Hadoop Developer Certification Questions and Answer (Dumps and Practice Questions)

Question :

Is there anyway in the MapReduce model that reducers communicate with each other in Hadoo framework ?

1. Yes, using JobConf confguration object it is possible

2. Using distributed cache it is possible

3. Access Mostly Uused Products by 50000+ Subscribers

4. No, each reducers runs independently and in isolation.

Correct Answer : Get Lastest Questions and Answer :

Question :

What is true about combiner ?

1. Combiner does the local aggregation of data, thereby allowing the number of mappers to process input data faster.

2. Combiner does the local aggregation of data, thereby reducing the number of mappers that need to run.

3. Access Mostly Uused Products by 50000+ Subscribers

Correct Answer : Get Lastest Questions and Answer :

Question :

in 3 mappers and 2 reducers how many distinct copy operations will be there in the sort or shuffle phase

1. 3

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 5

Correct Answer : Get Lastest Questions and Answer :

Explanation: Since each mappers intermediate output will go to every reducers

Related Questions

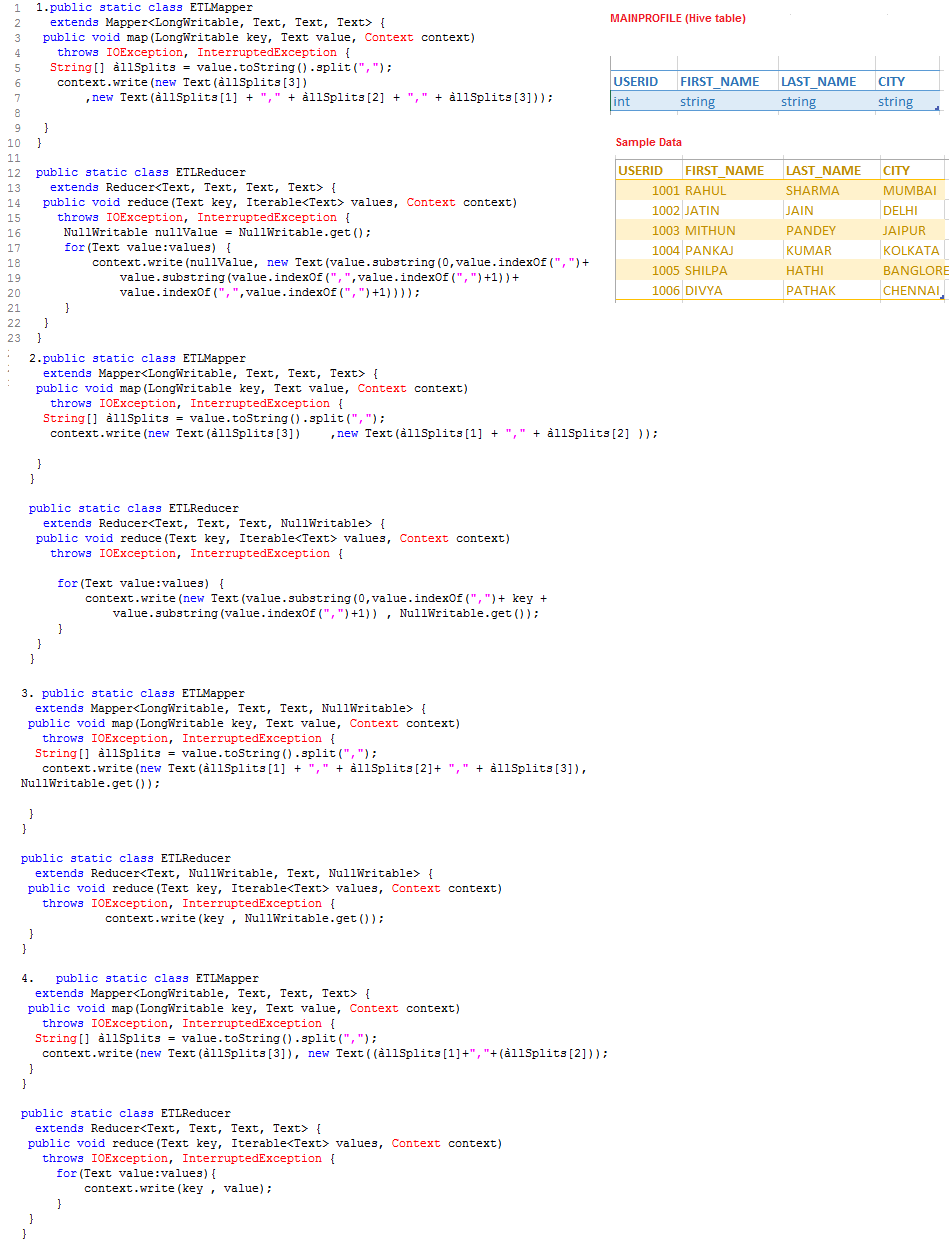

Question :We have extracted the data from

MySQL backend database of QuickTechie.com

website and stored in the

Hive table called MAINPROFILE as shown in image

with the sample data and also shown column datatype.

As this table is created from the data

which is already stored in a

warehouse directory of Hive.

As this table is created from the data

which is already stored in a

warehouse directory of Hive.

Select the correct MapReduce

code which simulate

the following Query in a single file.

SELECT FIRST_NAME,CITY,LAST_NAME

FROM MAINPROFILE

ORDER BY CITY;

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

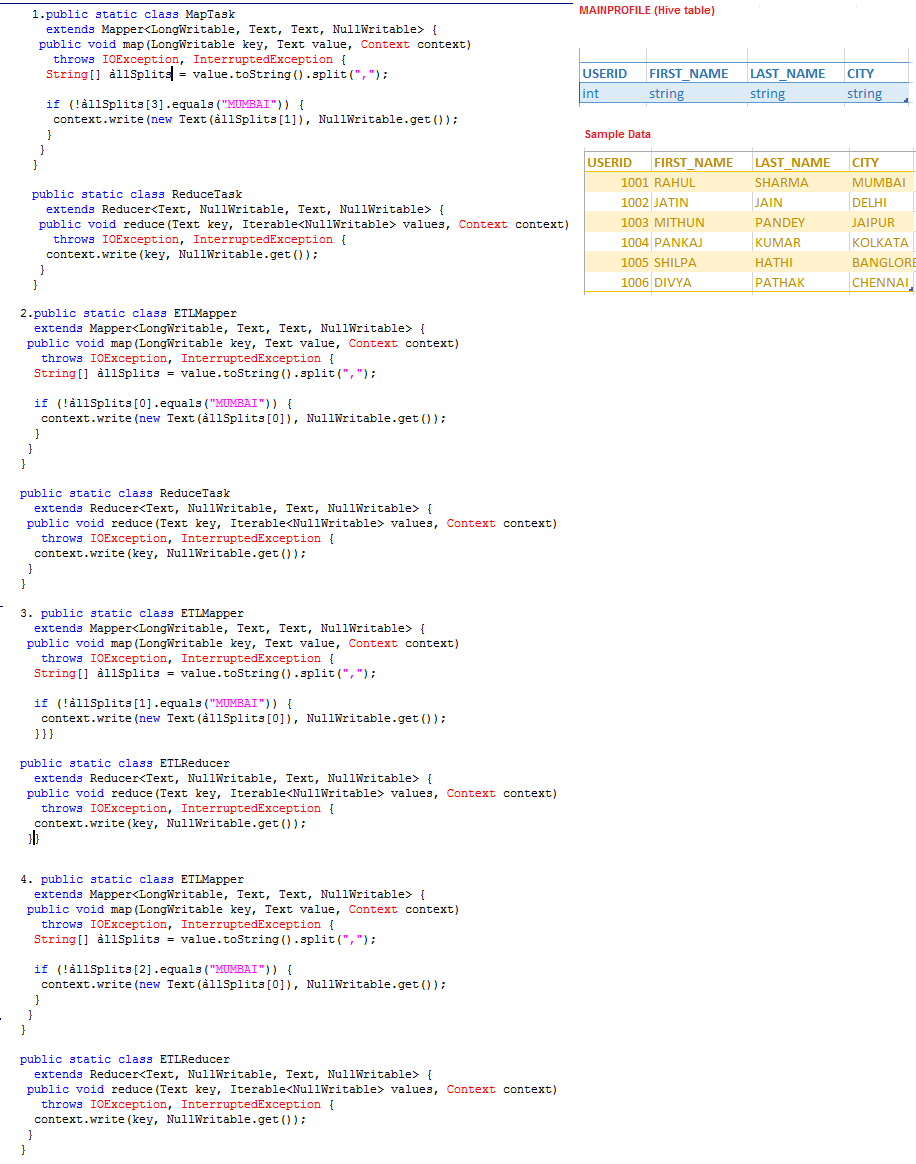

Question : We have extracted the data from

MySQL backend database of QuickTechie.com

website and stored in the

Hive table called MAINPROFILE as shown in image

with the sample data and also shown column datatype.

As this table is created from the data

which is already stored in a

warehouse directory of Hive.

As this table is created from the data

which is already stored in a

warehouse directory of Hive.

Select the correct MapReduce

code which simulate

the following Query in a single file.

SELECT DISTINCT FIRST_NAME

FROM MAINPROFILE

WHERE CITY != "MUMBAI"

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

Question : Given this data file: MAIN.PROFILE.log

1 Feeroz Fremon

2 Jay Jaipur

3 Amit Alwar

10 Banjara Banglore

11 Jayanti Jaipur

101 Sehwag Shimla

You write the following Pig script:

users = LOAD 'MAIN.PROFILE.log' AS (userid, username, city);

sortedusers = ORDER users BY id DESC;

DUMP sortedusers;

The output looks like this:

(3,Amit , Alwar)

(2,Jayanti, Jaipur)

(11,Jane, Jaipur)

(101,Sehwag, Shimla)

(10,Banjara, Banglore)

(1,Feeroz, Fremon)

Choose one line which, when modified as shown, would result in the output being displayed in descending ID order

1. DUMP sortedusers;

2. users = LOAD 'MAIN.PROFILE.log' AS (userid:int, usernamename,city);

3. Access Mostly Uused Products by 50000+ Subscribers

Question : QuickTechie Inc has a log file which is tab-delimited text file. File contains two columns username and loginid

You want use an InputFormat that returns the username as the key and the loginid as the value. Which of the following

is the most appropriate InputFormat should you use?

1. KeyValueTextInputFormat

2. MultiFileInputFormat

3. Access Mostly Uused Products by 50000+ Subscribers

4. SequenceFileInputFormat

5. TextInputFormat

Question : Apache Sqoop(TM) is a tool designed for efficiently transferring bulk data between Apache Hadoop and structured datastores such as relational

databases. You use Sqoop to import a table from your RDBMS into HDFS. You have configured to use 3 mappers in Sqoop, to controll the number of

parallelism and memory in use. Once the table import is finished, you notice that total 7 Mappers have run, there are 7 output files in HDFS,

and 4 of the output files is empty. Why?

1. The table does not have a numeric primary key

2. The table does not have a primary key

3. Access Mostly Uused Products by 50000+ Subscribers

4. The table does not have a unique key

Question : In the QuickTechie Inc Hadoop cluster you have defined block size as MB. The input file contains MB of valid input data

and is loaded into HDFS. How many map tasks should run without considering any failure of MapTask during the execution of this job?

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4