Dell EMC Data Science Associate Certification Questions and Answers (Dumps and Practice Questions)

Question : You have been assigned to run a linear regression model for each of , distinct districts, and

all the data is currently stored in a PostgreSQL database. Which tool/library would you use to

produce these models with the least effort?

1. MADlib

2. Mahout

3. Access Mostly Uused Products by 50000+ Subscribers

4. HBase

Correct Answer : Get Lastest Questions and Answer :

Exp: Key philosophies driving the architecture of MADlib are: Operate on the data locally-in database. Do not move it between multiple runtime environments unnecessarily. Utilize best of breed database engines, but separate the machine learning logic from database specific implementation details. Leverage MPP Share nothing technology, such as the Pivotal Greenplum Database, to provide parallelism and scalability. Open implementation maintaining active ties into ongoing academic research. Classification

When the desired output is categorical in nature we use classification methods to build a model that predicts which of the various categories a new result would fall into. The goal of classification is to be able to correctly label incoming records with the correct class for the record. Example: If we had data that described various demographic data and other features of individuals applying for loans and we had historical data that included what past loans had defaulted, then we could build a model that described the likelihood that a new set of demographic data would result in a loan default. In this case the categories are "will default" or "won't default" which are two discrete classes of output.

Regression : When the desired output is continuous in nature we use regression methods to build a model that predicts the output value.

Example: If we had data that described properties of real estate listings then we could build a model to predict the sale value for homes based on the known characteristics of the houses. This is a regression because the output response is continuous in nature rather than categorical. (Ideally a link to a more developed use case here)

Clustering : In which we are trying to identify groups of data such that the items within one cluster are more similar to each other than they are to the items in any other cluster.

Example: In customer segmentation analysis the goal is to identify specific groups of customers that behave in a similar fashion so that various marketing campaigns can be designed to reach these markets. When the customer segments are known in advance this would be a supervised classification task, when we let the data itself identify the segments this is a clustering task.

Topic Modeling : Topic modeling is similar to clustering in that it attempts to identify clusters of documents that are similar to each other, but it is more specialized in a text domain where it is also trying to identify the main themes of those documents.

Association Rule Mining : Also called market basket analysis or frequent itemset mining, this is attempting to identify which items tend to occur together more frequently than random chance would indicate suggesting an underlying relationship between the items.

Example: In an online web store association rule mining can be used to identify what products tend to be purchased together. This can then be used as input into a product recommender engine to suggest items that may be of interest to the customer and provide upsell opportunities.

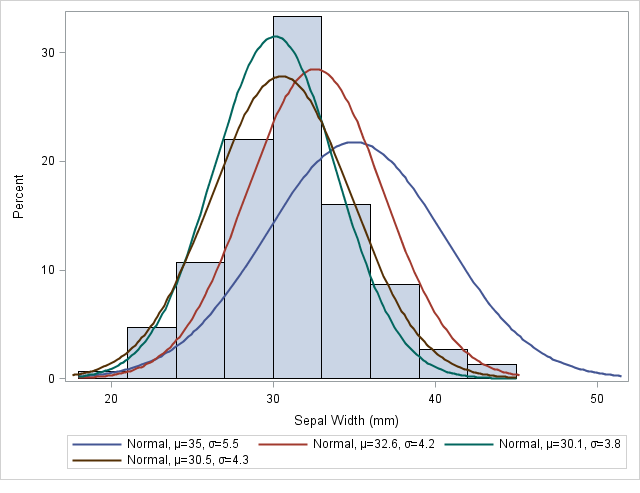

Descriptive Statistics : Descriptive statistics don't provide a model and thus are not considered a learning method, but they can be helpful in providing information to an analyst to understand the underlying data and can provide valuable insights into the data that may influence choice of data model.

Example: Calculating the distribution of data within each variable of a dataset can help an analyst understand which variables should be treated as categorical variables and which as continuous variables as well as understanding what sort of distribution the values fall in.

Validation : Using a model without understanding the accuracy of the model can lead to disastrous consequences. For that reason it is important to understand the error of a model and to evaluate the model for accuracy on testing data. Frequently in data analysis a separation is made between training data and testing data solely for the purpose of providing statistically valid analysis of the validity of the model and assessment that the model is not over-fitting the training data. N-fold cross validation is also frequently utilized.

Question : Your customer provided you with , unlabeled records and asked you to separate them into

three groups. What is the correct analytical method to use?

1. Semi Linear Regression

2. Logistic regression

3. Access Mostly Uused Products by 50000+ Subscribers

4. Linear regression

5. K-means clustering

Correct Answer : Get Lastest Questions and Answer :

Explanation: k-means clustering is a method of vector quantization, originally from signal processing, that is popular for cluster analysis in data mining. k-means clustering aims to partition n observations into k clusters in which each observation belongs to the cluster with the nearest mean, serving as a prototype of the cluster. This results in a partitioning of the data space into Voronoi cells.

The problem is computationally difficult (NP-hard); however, there are efficient heuristic algorithms that are commonly employed and converge quickly to a local optimum. These are usually similar to the expectation-maximization algorithm for mixtures of Gaussian distributions via an iterative refinement approach employed by both algorithms. Additionally, they both use cluster centers to model the data; however, k-means clustering tends to find clusters of comparable spatial extent, while the expectation-maximization mechanism allows clusters to have different shapes.

The algorithm has nothing to do with and should not be confused with k-nearest neighbor, another popular machine learning technique.

Question : You are performing a market basket analysis using the Apriori algorithm. Which measure is a ratio

describing the how many more times two items are present together than would be expected if

those two items are statistically independent?

1. Confidence

2. Support

3. Access Mostly Uused Products by 50000+ Subscribers

4. Lift

Correct Answer : Get Lastest Questions and Answer :

Explanation: The so-called lift value is simply the quotient of the posterior and the prior confidence of an association rule. That is, if " bread" has a confidence of 60% and "cheese ? bread" has a confidence of 72%, then the lift value (of the second rule) is 72/60 = 1.2. Obviously, if the posterior confidence equals the prior confidence, the value of this measure is 1. If the posterior confidence is greater than the prior confidence, the lift value exceeds 1 (the presence of the antecedent items raises the confidence), and if the posterior confidence is less than the prior confidence, the lift value is less than 1 (the presence of the antecedent items lowers the confidence).

Related Questions

Question : Assume that you have a data frame in R. Which function would you use to display descriptive

statistics about this variable?

1. levels

2. attributes

3. str

4. summary

Question : What is the mandatory Clause that must be included when using Window functions?

1. OVER

2. RANK

3. PARTITION BY

4. RANK BY

Question : What is the purpose of the process step "parsing" in text analysis?

1. computes the TF-IDF values for all keywords and indices

2. executes the clustering and classification to organize the contents

3. performs the search and/or retrieval in finding a specific topic or an entity in a document

4. imposes a structure on the unstructured/semi-structured text for downstream analysis

Question : Which word or phrase completes the statement? A data warehouse is to a centralized database

for reporting as an analytic sandbox is to a _______?

1. Collection of data assets for modeling

2. Collection of low-volume databases

3. Centralized database of KPIs

4. Collection of data assets for ETL

Question : You do a Students t-test to compare the average test scores of sample groups from populations A

and B. Group A averaged 10 points higher than group B. You find that this difference is significant,

with a p-value of 0.03. What does that mean?

1. There is a 3% chance that you have identified a difference between the populations when in

reality there is none.

2. The difference in scores between a sample from population A and a sample from population B

will tend to be within 3% of 10 points.

3. There is a 3% chance that a sample group from population A will score 10 points higher that a

sample group from population B.

4. There is a 97% chance that a sample group from population A will score 10 points higher that a

sample group from population B.

Question : What is one modeling or descriptive statistical function in MADlib that is typically not provided in a

standard relational database?

1. Expected value

2. Variance

3. Linear regression

4. Quantiles