Cloudera Hadoop Administrator Certification Certification Questions and Answer (Dumps and Practice Questions)

Question : The NameNode uses a file in its _______ to store the EditLog.

1. Any HDFS Block

2. metastore

3. Access Mostly Uused Products by 50000+ Subscribers

4. local hdfs block

Correct Answer : Get Lastest Questions and Answer :

Explanation:

The HDFS namespace is stored by the NameNode. The NameNode uses a transaction log called the EditLog to persistently record every change that occurs to file system metadata. For example, creating a new file in HDFS causes the NameNode to insert a record into the EditLog indicating this. Similarly, changing the replication factor of a file causes a new record to be inserted into the EditLog. The NameNode uses a file in its local host OS file system to store the EditLog. The entire file system namespace, including the mapping of blocks to files and file system properties, is stored in a file called the FsImage. The FsImage is stored as a file in the NameNodes local file system too.

The NameNode keeps an image of the entire file system namespace and file Blockmap in memory. This key metadata item is designed to be compact, such that a NameNode with 4 GB of RAM is plenty to support a huge number of files and directories. When the NameNode starts up, it reads the FsImage and EditLog from disk, applies all the transactions from the EditLog to the in-memory representation of the FsImage, and flushes out this new version into a new FsImage on disk. It can then truncate the old EditLog because its transactions have been applied to the persistent FsImage. This process is called a checkpoint. In the current implementation, a checkpoint only occurs when the NameNode starts up. Work is in progress to support periodic checkpointing in the near future.

The DataNode stores HDFS data in files in its local file system. The DataNode has no knowledge about HDFS files. It stores each block of HDFS data in a separate file in its local file system. The DataNode does not create all files in the same directory. Instead, it uses a heuristic to determine the optimal number of files per directory and creates subdirectories appropriately. It is not optimal to create all local files in the same directory because the local file system might not be able to efficiently support a huge number of files in a single directory. When a DataNode starts up, it scans through its local file system, generates a list of all HDFS data blocks that correspond to each of these local files and sends this report to the NameNode: this is the Blockreport.

Question : Select the correct options

1. Either FsImage or the EditLog must be accurate to HDFS wrok properly

2. NameNode can be configured to support maintaining multiple copies of the FsImage and EditLog

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1 and 2

5. 1,2 and 3

Correct Answer : Get Lastest Questions and Answer :

Explanation: Metadata Disk Failure

The FsImage and the EditLog are central data structures of HDFS. A corruption of these files can cause the HDFS instance to be non-functional. For this reason, the NameNode can be configured to support maintaining multiple copies of the FsImage and EditLog. Any update to either the FsImage or EditLog causes each of the FsImages and EditLogs to get updated synchronously. This synchronous updating of multiple copies of the FsImage and EditLog may degrade the rate of namespace transactions per second that a NameNode can support. However, this degradation is acceptable because even though HDFS applications are very data intensive in nature, they are not metadata intensive. When a NameNode restarts, it selects the latest consistent FsImage and EditLog to use.

The NameNode machine is a single point of failure for an HDFS cluster. If the NameNode machine fails, manual intervention is necessary. Currently, automatic restart and failover of the NameNode software to another machine is not supported.

Question : Select the correct option

1. When a file is deleted by a user or an application, it is immediately removed from HDFS

2. When a file is deleted by a user or an application, it is not immediately removed from HDFS. Instead, HDFS first renames it to a file in the /trash directory.

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1,2

5. 2,3

Correct Answer : Get Lastest Questions and Answer :

Explanation: File Deletes and Undeletes

When a file is deleted by a user or an application, it is not immediately removed from HDFS. Instead, HDFS first renames it to a file in the /trash directory. The file can be restored quickly as long as it remains in /trash. A file remains in /trash for a configurable amount of time. After the expiry of its life in /trash, the NameNode deletes the file from the HDFS namespace. The deletion of a file causes the blocks associated with the file to be freed. Note that there could be an appreciable time delay between the time a file is deleted by a user and the time of the corresponding increase in free space in HDFS.

A user can Undelete a file after deleting it as long as it remains in the /trash directory. If a user wants to undelete a file that he/she has deleted, he/she can navigate the /trash directory and retrieve the file. The /trash directory contains only the latest copy of the file that was deleted. The /trash directory is just like any other directory with one special feature: HDFS applies specified policies to automatically delete files from this directory. The current default policy is to delete files from /trash that are more than 6 hours old. In the future, this policy will be configurable through a well defined interface.

Decrease Replication Factor

When the replication factor of a file is reduced, the NameNode selects excess replicas that can be deleted. The next Heartbeat transfers this information to the DataNode. The DataNode then removes the corresponding blocks and the corresponding free space appears in the cluster. Once again, there might be a time delay between the completion of the setReplication API call and the appearance of free space in the cluster.

Related Questions

Question :

Select the correct statement which applies to "Fair Scheduler"

1. Fair Scheduler allows assigning guaranteed minimum shares to queues

2. queue does not need its full guaranteed share, the excess will not be splitted between other running apps.

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1 and 3

5. 1,2 and 3

Question : In fair scheduler you have defined a Hierarchical queue named QueueC, whose parent is QueueB and QueueB's parent is QueueA. Which is the

correct name format to reffer the QueueB

1. root.QueueB

2. QueueC.QueueB

3. Access Mostly Uused Products by 50000+ Subscribers

4. leaf.QueueC.QueueB

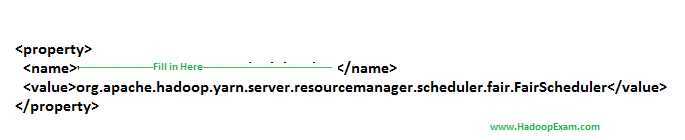

Question : To use the Fair Scheduler first assign the appropriate scheduler class in yarn-site.xml:

Select the correct value which can be placed in the name field.

1. yarn.resourcemanager.scheduler

2. yarn.resourcemanager.class

3. Access Mostly Uused Products by 50000+ Subscribers

4. yarn.scheduler.class

Question : You have a setup of YARN cluster where the total application memory available is GB, there are department queues,

IT and DCS. The IT queue has 15 GB allocated and DCS queue has 5 GB allocated. Each map task requires 10 GB allocation.

How does the FairScheduler assign the available memory resources under the Single Resource Fairness(SRF) rule?

1. DCS has less resources and will be granted the next 10 GB that becomes available

2. None of them will be granted any memory as both queues have allocated memory

3. Access Mostly Uused Products by 50000+ Subscribers

4. IT has more resources and will be granted the next 10 GB that becomes available

Question : Your Data Management Hadoop cluster has a total of GB of memory capacity.

Researcher Allen submits a MapReduce Job Equity which is configured to require a total of 100 GB of memory and few seconds later,

Researcher Babita submits a MapReduce Job ETF which is configured to require a total of 15 GB of memory. Using the YARN FairScheduler,

do the tasks in Job Equity have to finish before the tasks in Job ETF can start?

1. The tasks in Job ETF have to wait until the tasks in Job Equity to finish as there is no memory left

2. The tasks in Job ETF do not have to wait for the tasks in Job Equity to finish, tasks in Job ETF can start when resources become available

3. Access Mostly Uused Products by 50000+ Subscribers

4. The tasks in Job Equity have to wait until the tasks in Job ETF to finish as small jobs will be given priority

Question : Select the two correct statements from below regarding the Hadoop Cluster Infrastructure

configured with Fair Scheduler and each MapReduce ETL work will be assigned to a pool.

1. Pools are assigned priorities. Pools with higher priorities are executed before pools with lower priorities.

2. Each pool's share of task slots remains static during the execution of any individual job.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Each pool's share of task slots may change throughout the course of job execution.

5. Each pool gets exactly 1/N of the total available task slots, where N is the number of jobs running on the cluster.

6. Pools get a dynamically-allocated share of the available task slots (subject to additional constraints)

1. 1,4,6

2. 3,4,6

3. Access Mostly Uused Products by 50000+ Subscribers

4. 2,3,4

5. 4,5,6