AWS Certified Solutions Architect - Professional Questions and Answers (Dumps and Practice Questions)

Question : Acmeshell.com has people in the IT operations team who are responsible to manage the AWS infrastructure. And wants to setup that each user will

have access to launch and manage an instance in a zone which the other user cannot modify.

Which of the below mentioned options is the best solution to set this up?

1. Create four AWS accounts and give each user access to a separate account.

2. Create four IAM users and four VPCs and allow each IAM user to have access to separate VPCs.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Create an IAM user and allow them permission to launch an instance of a different sizes only.

Correct Answer : Get Lastest Questions and Answer : A Virtual Private Cloud (VPC) is a virtual network dedicated to the user's AWS account. The user can create subnets as per the requirement within a VPC. The VPC

also work with IAM and the organization can create IAM users who have access to various VPC services. The organization can setup access for the IAM user who can modify the security

groups of the VPC. The sample policy is given below:

{

"Version": "2012-10-17",

"Statement":

[{ "Effect": "Allow",

"Action": "ec2:RunInstances",

"Resource":

[ "arn:aws:ec2:region::image/ami-*",

"arn:aws:ec2:region:account:subnet/subnet-1a2b3c4d",

"arn:aws:ec2:region:account:network-interface/*",

"arn:aws:ec2:region:account:volume/*",

"arn:aws:ec2:region:account:key-pair/*",

"arn:aws:ec2:region:account:security-group/sg-123abc123" ]

}]

}

With this policy the user can create four subnets in separate zones and provide IAM user access to each subnet.

Your security credentials identify you to services in AWS and grant you unlimited use of your AWS resources, such as your Amazon VPC resources. You can use AWS Identity and Access

Management (IAM) to allow other users, services, and applications to use your Amazon VPC resources without sharing your security credentials. You can choose to allow full use or

limited use of your resources by granting users permission to use specific Amazon EC2 API actions. Some API actions support resource-level permissions, which allow you to control the

specific resources that users can create or modify.

Question : QuickTechie.com has created a multi-tenant Learning Management System (LMS). The application is hosted for five different tenants (clients) in the

VPCs of the respective AWS accounts of the tenant. QuickTechie.com wants to setup a centralized server which can connect with the LMS of each tenant upgrade if

required. QuickTechie.com also wants to ensure that one tenant VPC should not be able to connect to the other tenant VPC for security reasons.

How can QuickTechie.com setup this scenario?

1. QuickTechie should setup all the VPCs meshed together with VPC peering for all VPCs.

2. QuickTechie should setup VPC peering with all the VPCs peering each other but block the IPs from CIDR of the tenant VPCs to deny them.

3. Access Mostly Uused Products by 50000+ Subscribers

4. QuickTechie should setup all the VPCs with the same CIDR but have a centralized VPC. This way only the centralized VPC can talk to the other VPCs using VPC peering.

Correct Answer : Get Lastest Questions and Answer :

Explanation: A Virtual Private Cloud (VPC) is a virtual network dedicated to the user's AWS account. It enables the user to launch AWS resources into a virtual network that the user has

defined. A VPC peering connection allows the user to route traffic between the peer VPCs using private IP addresses as if they are a part of the same network.

This is helpful when one VPC from the same or different AWS account wants to connect with resources of the other VPC. The organization wants to setup that one VPC can connect with

all the other VPCs but all other VPCs cannot connect among each other. This can be achieved by configuring VPC peering where one VPC is peered with all the other VPCs, but the other

VPCs are not peered to each other. The VPCs are in the same or a separate AWS account and should not have overlapping CIDR blocks.

Question : QuickTechie is planning to use NoSQL DB for its scalable data needs. The organization wants to host an application securely in AWS VPC.

What action can be recommended to the organization?

1. QuickTechie should only use a DynamoDB because by default it is always a part of the default subnet provided by AWS.

2. QuickTechie should setup their own NoSQL cluster on the AWS instance and configure route tables and subnets.

3. Access Mostly Uused Products by 50000+ Subscribers

4. QuickTechie should use a DynamoDB while creating a table within a private subnet.

Correct Answer : Get Lastest Questions and Answer :

Explanation: Amazon Virtual Private Cloud (Amazon VPC) enables you to launch Amazon Web Services (AWS) resources into a virtual network that you've defined. This virtual network closely

resembles a traditional network that you'd operate in your own data center, with the benefits of using the scalable infrastructure of AWS.

The Amazon Virtual Private Cloud (Amazon VPC) allows the user to define a virtual networking environment in a private, isolated section of the Amazon Web Services (AWS) cloud. The

user has complete control over the virtual networking environment. Currently VPC does not support DynamoDB. Thus, if the user wants to implement VPC, he has to setup his own NoSQL DB

within the VPC. As you get started with Amazon VPC, you should understand the key concepts of this virtual network, and how it is similar to or different from your own networks. This

section provides a brief description of the key concepts for Amazon VPC.

Amazon VPC is the networking layer for Amazon EC2. If you're new to Amazon EC2, see What is Amazon EC2? in the Amazon EC2 User Guide for Linux Instances to get a brief overview.

VPCs and Subnets

A virtual private cloud (VPC) is a virtual network dedicated to your AWS account. It is logically isolated from other virtual networks in the AWS cloud. You can launch your AWS

resources, such as Amazon EC2 instances, into your VPC. You can configure your VPC; you can select its IP address range, create subnets, and configure route tables, network gateways,

and security settings.

A subnet is a range of IP addresses in your VPC. You can launch AWS resources into a subnet that you select. Use a public subnet for resources that must be connected to the Internet,

and a private subnet for resources that won't be connected to the Internet.

To protect the AWS resources in each subnet, you can use multiple layers of security, including security groups and network access control lists (ACL).

Related Questions

Question : You are designing a multi-platform web application for AWS The application will run on

EC2 instances and will be accessed from PCs. tablets and smart phones Supported

accessing platforms are Windows. MACOS. IOS and Android Separate sticky session and

SSL certificate setups are required for different platform types which of the following

describes the most cost effective and performance efficient architecture setup?

1. Setup a hybrid architecture to handle session state and SSL certificates on-prem and

separate EC2 Instance groups running web applications for different platform types running

in a VPC.

2. Set up one ELB for all platforms to distribute load among multiple instance under it Each

EC2 instance implements ail functionality for a particular platform.

3. Access Mostly Uused Products by 50000+ Subscribers

ELB handles session stickiness for all platforms for each ELB run separate EC2 instance

groups to handle the web application for each platform.

4. Assign multiple ELBS to an EC2 instance or group of EC2 instances running the

common components of the web application, one ELB for each platform type Session

stickiness and SSL termination are done at the ELBs.

Question : You are implementing a URL whitelisting system for a company that wants to restrict

outbound HTTP'S connections to specific domains from their EC2-hosted applications you

deploy a single EC2 instance running proxy software and configure It to accept traffic from

all subnets and EC2 instances in the VPC. You configure the proxy to only pass through

traffic to domains that you define in its whitelist configuration You have a nightly

maintenance window or 10 minutes where ail instances fetch new software updates. Each

update Is about 200MB In size and there are 500 instances In the VPC that routinely fetch

updates After a few days you notice that some machines are failing to successfully

download some, but not all of their updates within the maintenance window The download

URLs used for these updates are correctly listed in the proxy's whitelist configuration and

you are able to access them manually using a web browser on the instances What might

be happening? (Choose 2 answers)

A. You are running the proxy on an undersized EC2 instance type so network throughput is not sufficient for all instances to download their updates in time.

B. You have not allocated enough storage to the EC2 instance running me proxy so the network buffer is filling up. causing some requests to fall

C. You are running the proxy in a public subnet but have not allocated enough EIPs to support the needed network throughput through the Internet Gateway (IGW)

D. You are running the proxy on a EC2 instance in a private subnet and its network throughput is being throttled by a NAT running on an undersized EC2 instance

E. The route table for the subnets containing the affected EC2 instances is not configured to direct network traffic for the software update locations to the proxy.

1. A,B

2. A,D

3. Access Mostly Uused Products by 50000+ Subscribers

4. D,E

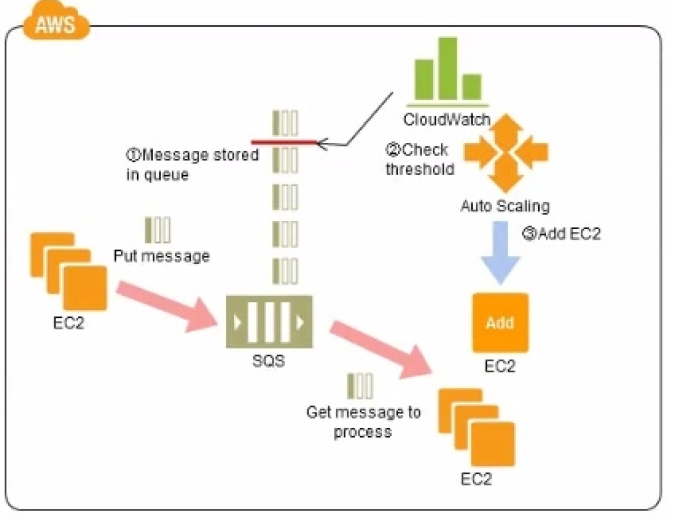

Question : Refer to the architecture diagram above of

a batch processing solution using Simple Queue

Service (SOS) to set up a message queue between

EC2 instances which are used as batch processors

Cloud Watch monitors the number of Job requests (queued messages)

and an Auto Scaling group adds or deletes batch

servers automatically based on parameters set in Cloud Watch alarms.

You can use this architecture to implement which of

the following features in a cost effective and efficient manner?

1. Reduce the overall time for executing jobs through parallel processing by allowing a

busy EC2 instance that receives a message to pass it to the next instance in a daisy-chain setup.

2. Implement fault tolerance against EC2 instance failure since messages would remain in

SQS and worn can continue with recovery of EC2 instances implement fault tolerance against SQS failure by backing up messages to S3.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Coordinate number of EC2 instances with number of job requests automatically thus Improving cost effectiveness.

5. Handle high priority jobs before lower priority jobs by assigning a priority metadata field to SQS messages.

Question : Your company currently has a -tier web application running in an on-premises data center.

You have experienced several infrastructure failures in the past two months resulting in

significant financial losses. Your CIO is strongly agreeing to move the application to AWS.

While working on achieving buy-in from the other company executives, he asks you to

develop a disaster recovery plan to help improve Business continuity in the short term. He

specifies a target Recovery Time Objective (RTO) of 4 hours and a Recovery Point

Objective (RPO) of 1 hour or less. He also asks you to implement the solution within 2

weeks. Your database is 200GB in size and you have a 20Mbps Internet connection. How

would you do this while minimizing costs?

1. Create an EBS backed private AMI which includes a fresh install or your application.

Setup a script in your data center to backup the local database every 1 hour and to encrypt

and copy the resulting file to an S3 bucket using multi-part upload.

2. Install your application on a compute-optimized EC2 instance capable of supporting the

application's average load synchronously replicate transactions from your on-premises

database to a database instance in AWS across a secure Direct Connect connection.

3. Access Mostly Uused Products by 50000+ Subscribers

availability zones asynchronously replicate transactions from your on-premises database to

a database instance in AWS across a secure VPN connection.

4. Create an EBS backed private AMI that includes a fresh install of your application.

Develop a Cloud Formation template which includes your AMI and the required EC2. Auto-

Scaling and ELB resources to support deploying the application across Multiple-Ability

Zones. Asynchronously replicate transactions from your on-premises database to a

database instance in AWS across a secure VPN connection.

Question : You have a periodic Image analysis application that gets some files. In Input analyzes them

and for each file writes some data in output to a text file the number of files in input per day

is high and concentrated in a few hours of the day.

Currently you have a server on EC2 with a large EBS volume that hosts the input data and

the results it takes almost 20 hours per day to complete the process

What services could be used to reduce the elaboration time and improve the availability of

the solution?

1. S3 to store I/O files. SQS to distribute elaboration commands to a group of hosts

working in parallel. Auto scaling to dynamically size the group of hosts depending on the

length of the SQS queue

2. EBS with Provisioned IOPS (PIOPS) to store I/O files. SNS to distribute elaboration

commands to a group of hosts working in parallel Auto Scaling to dynamically size the

group of hosts depending on the number of SNS notifications

3. Access Mostly Uused Products by 50000+ Subscribers

working in parallel. Auto scaling to dynamically size the group of hosts depending on the

number of SNS notifications

4. EBS with Provisioned IOPS (PIOPS) to store I/O files SQS to distribute elaboration

commands to a group of hosts working in parallel Auto Scaling to dynamically size the

group ot hosts depending on the length of the SQS queue.

Question : Your company runs a customer facing event registration site. This site is built with a -tier

architecture with web and application tier servers and a MySQL database. The application

requires 6 web tier servers and 6 application tier servers for normal operation, but can run

on a minimum of 65% server capacity and a single MySQL database. When deploying this

application in a region with three availability zones (AZs) which architecture provides high

availability?

1. A web tier deployed across 2 AZs with 3 EC2 (Elastic Compute Cloud) instances in each

AZ inside an Auto Scaling Group behind an ELB (elastic load balancer), and an application

tier deployed across 2 AZs with 3 EC2 instances in each AZ inside an Auto Scaling Group

behind an ELB. and one RDS (Relational Database Service) instance deployed with read

replicas in the other AZ.

2. A web tier deployed across 3 AZs with 2 EC2 (Elastic Compute Cloud) instances in each

A2 inside an Auto Scaling Group behind an ELB (elastic load balancer) and an application

tier deployed across 3 AZs with 2 EC2 instances in each AZ inside an Auto Scaling Group

behind an ELB and one RDS (Relational Database Service) Instance deployed with read

replicas in the two other AZs.

3. Access Mostly Uused Products by 50000+ Subscribers

each AZ inside an Auto Scaling Group behind an ELB (elastic load balancer) and an

application tier deployed across 2 AZs with 3 EC2 instances m each AZ inside an Auto

Scaling Group behind an ELS and a Multi-AZ RDS (Relational Database Service)

deployment.

4. A web tier deployed across 3 AZs with 2 EC2 (Elastic Compute Cloud) instances in each

AZ Inside an Auto Scaling Group behind an ELB (elastic load balancer). And an application

tier deployed across 3 AZs with 2 EC2 instances In each AZ inside an Auto Scaling Group

behind an ELB. And a Multi-AZ RDS (Relational Database services) deployment.