Cloudera Databricks Data Science Certification Questions and Answers (Dumps and Practice Questions)

Question : PCA analyzes the all the variance in the in the variables and reorganizes it into a new set of

components equal to the number of original variables. Regarding these new variables which of the following statement are correct?

1. They are independent

2. They decrease in the amount of variance in the originals they account for First component captures most of the variance, 2ndsecond most and so on until all the variance is accounted for

3. Access Mostly Uused Products by 50000+ Subscribers

4. Only 1 and 3

5. All 1,2 and 3

Correct Answer : Get Lastest Questions and Answer :

Conceptually the goal of PCA is to reduce the number of variables of interest into a smaller set of components.

PCA analyzes the all the variance in the in the variables and reorganizes it into a new set of components equal to the number of original variables.

Regarding the new components:

- They are independent

- They decrease in the amount of variance in the originals they account forFirst component captures most of the variance, 2ndsecond most and so on until all the variance is accounted for

- Only some will be retained for further study (dimension reduction)Since the first few capture most of the variance they are typically of focus

Question : PCA is a parametric method of extracting relevant information form confusing data sets.

1. True

2. False

Correct Answer : Get Lastest Questions and Answer :

Principal component analysis (PCA) has been called one of the most valuable results from applied linear algebra. PCA is used abundantly in all forms of analysis - from neuroscience to computer graphics - because it is a simple, non-parametric method of extracting relevant information from confusing data sets. With minimal additional effort PCA provides a roadmap for how to reduce a complex data set to a lower dimension to reveal the sometimes hidden, simplified dynamics that often underlie it.

Question : In Supervised Learning you have performed the following steps

1. Determine the type of training examples

2. Gather a training set. The training set needs to be representative of the real-world use of the function

3. Access Mostly Uused Products by 50000+ Subscribers

4. Determine the structure of the learned function and corresponding learning algorithm,

5. Complete the design. Run the learning algorithm on the gathered training set

6.Evaluate the accuracy of the learned function.

In the 4th step which of the following algorithm you can apply

1. Support Vector Machine

2. Decision trees

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1 and 2 only

5. All 1,2 and 3

Correct Answer : Get Lastest Questions and Answer :

In order to solve a given problem of supervised learning, one has to perform the following steps:

1.Determine the type of training examples. Before doing anything else, the user should decide what kind of data is to be used as a training set. In the case of handwriting analysis, for example, this might be a single handwritten character, an entire handwritten word, or an entire line of handwriting.

2.Gather a training set. The training set needs to be representative of the real-world use of the function. Thus, a set of input objects is gathered and corresponding outputs are also gathered, either from human experts or from measurements.

3. Access Mostly Uused Products by 50000+ Subscribers

4.Determine the structure of the learned function and corresponding learning algorithm. For example, the engineer may choose to use support vector machines or decision trees.

5.Complete the design. Run the learning algorithm on the gathered training set. Some supervised learning algorithms require the user to determine certain control parameters. These parameters may be adjusted by optimizing performance on a subset (called a validation set) of the training set, or via cross-validation.

6.Evaluate the accuracy of the learned function. After parameter adjustment and learning, the performance of the resulting function should be measured on a test set that is separate from the training set.

Related Questions

Question :Select the correct Hive function which will Returns the population covariance of a pair of numeric columns in the group.

1. covar_pop(col1, col2)

2. corr(col1, col2)

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1 and 2

5. All 1,2 and 3

Question : Which of the following Hive function Returns the standard deviation of a numeric column in the group

1. stddev_pop(col)

2. stddev_samp(col)

3. Access Mostly Uused Products by 50000+ Subscribers

4. dev_pop(col)

Question : You have two tables in Hive that are populated with data:

Employee

emp_id int

salary string

Employee_Detail;

emp_id int

name string

You now create a new table de-normalized one and populate it with the results of joining the two tables as follows:

CREATE TABLE EMPLOYEE_FULL AS SELECT Employee_Detail.*,Employee.salary AS s

FROM Employee JOIN Employee_Detail ON (Employee.emp_id== Employee_Detail.emp_id);

You then export the table and download the file:

EXPORT TABLE EMPLOYEE_FULL TO '/hadoopexam/employee/Employee_Detail.data';

You have downloaded the file and read the file as a CSV in R. How many columns will the resulting variable in R have?

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. 4

5. 5

Ans : 1

Exp :The Stinger Initiative successfully delivered a fundamental new Apache Hive, which evolved Hive's traditional architecture and made it faster, with richer SQL semantics and petabyte scalability. We continue to work within the community to advance these three key facets of hive: Speed Deliver sub-second query response times. Scale : The only SQL interface to Hadoop designed for queries that scale from Gigabytes, to Terabytes and Petabytes

SQL : Enable transactions and SQL:2011 Analytics for Hive

Stinger.next is focused on the vision of delivering enterprise SQL at Hadoop scale, accelerating the production deployment of Hive for interactive analytics, reporting and and ETL. More explicitly, some of the key areas that we will invest in include: When exporting a table from Hive, the data file will use the delimiters form the table. Because table3 wasn't created with specific delimiters, it will use the default Hive delimiter, which is \001 or Control-A. When the file is imported into R as a CSV, there will be only 1 column because the file isn't actually comma delimited.adoop was built to organize and store massive amounts of data of all shapes, sizes and formats. Because of Hadoop's "schema on read" architecture, a Hadoop cluster is a perfect reservoir of heterogeneous data-structured and unstructured-from a multitude of sources. Data analysts use Hive to explore, structure and analyze that data, then turn it into business insight.

Here are some advantageous characteristics of Hive for enterprise SQL in Hadoop:

Feature Description, Familiar , Query data with a SQL-based language , Fast Interactive response times, even over huge datasets Scalable and Extensible

As data variety and volume grows, more commodity machines can be added, without a corresponding reduction in performance. The tables in Hive are similar to tables in a relational database, and data units are organized in a taxonomy from larger to more granular units. Databases are comprised of tables, which are made up of partitions. Data can be accessed via a simple query language and Hive supports overwriting or appending data. Within a particular database, data in the tables is serialized and each table has a corresponding Hadoop Distributed File System (HDFS) directory. Each table can be sub-divided into partitions that determine how data is distributed within sub-directories of the table directory. Data within partitions can be further broken down into buckets. Hive supports all the common primitive data formats such as BIGINT, BINARY, BOOLEAN, CHAR, DECIMAL, DOUBLE, FLOAT, INT, SMALLINT, STRING, TIMESTAMP, and TINYINT. In addition, analysts can combine primitive data types to form complex data types, such as structs, maps and arrays.

Question :You have a HadoopExam Log files directory containing a number of comma files.

Each of the files has three columns and each of the filenames has a .log extension like 12012014.log, 13012014.log, 14012014.log etc.

You want a single comma-separated file (with extension .csv) that contains all the rows from

all the files that do not contain the word "HadoopExam", case-sensitive.

1. cat *.log | grep -v HadoopExam > final.csv

2. find . -name '*.log' -print0 | xargs -0 cat | awk 'BEGIN {print $1, $2, $3}' | grep -v HadoopExam > final.csv

3. Access Mostly Uused Products by 50000+ Subscribers

4. grep HadoopExam *.log > final.csv

Ans: 2

Exp : There are three variations of AWK: AWK - the (very old) original from AT&T NAWK - A newer, improved version from AT&T

GAWK - The Free Software foundation's version , The essential organization of an AWK program follows the form: pattern { action }

The pattern specifies when the action is performed. Like most UNIX utilities, AWK is line oriented. That is, the pattern specifies a test that is performed with each line read as input. If the condition is true, then the action is taken. The default pattern is something that matches every line. This is the blank or null pattern. Two other important patterns are specified by the keywords "BEGIN" and "END". As you might expect, these two words specify actions to be taken before any lines are read, and after the last line is read. The AWK program below:

BEGIN { print "START" } { print } END { print "STOP" }

adds one line before and one line after the input file. This isn't very useful, but with a simple change, we can make this into a typical AWK program:

BEGIN { print "File\tOwner"} { print $8, "\t", $3} END { print " - DONE -" } I'll improve the script in the next sections, but we'll call it "FileOwner". But let's not put it into a script or file yet. I will cover that part in a bit. Hang on and follow with me so you get the flavor of AWK. The characters "\t" Indicates a tab character so the output lines up on even boundries. The "$8" and "$3" have a meaning similar to a shell script. Instead of the eighth and third argument, they mean the eighth and third field of the input line. You can think of a field as a column, and the action you specify operates on each line or row read in. There are two differences between AWK and a shell processing the characters within double quotes. AWK understands special characters follow the "\" character like "t". The Bourne and C UNIX shells do not. Also, unlike the shell (and PERL) AWK does not evaluate variables within strings. To explain, the second line could not be written like this: {print "$8\t$3" } That example would print "$8 $3". Inside the quotes, the dollar sign is not a special character. Outside, it corresponds to a field. What do I mean by the third and eight field? Consider the Solaris "/usr/bin/ls -l" command, which has eight columns of information. The System V version (Similar to the Linux version), "/usr/5bin/ls -l" has 9 columns. The third column is the owner, and the eighth (or nineth) column in the name of the file. This AWK program can be used to process the output of the "ls -l" command, printing out the filename, then the owner, for each file. I'll show you how. Update: On a linux system, change "$8" to "$9". One more point about the use of a dollar sign. In scripting languages like Perl and the various shells, a dollar sign means the word following is the name of the variable. Awk is different. The dollar sign means that we are refering to a field or column in the current line. When switching between Perl and AWK you must remener that "$" has a different meaning. So the following piece of code prints two "fields" to standard out. The first field printed is the number "5", the second is the fifth field (or column) on the input line. BEGIN { x=5 } { print x, $x}

Originally, I didn't plan to discuss NAWK, but several UNIX vendors have replaced AWK with NAWK, and there are several incompatibilities between the two. It would be cruel of me to not warn you about the differences. So I will highlight those when I come to them. It is important to know than all of AWK's features are in NAWK and GAWK. Most, if not all, of NAWK's features are in GAWK. NAWK ships as part of Solaris. GAWK does not. However, many sites on the Internet have the sources freely available. If you user Linux, you have GAWK. But in general, assume that I am talking about the classic AWK unless otherwise noted.

You can only specify a small number of files to a command like cat, so globbing 100,000 files will cause an error. In this instance, you must use find and xargs. The grep flag should be -v to invert the sense of matching. The -i ignores the case. You switch the delimiter with awk, though tr works also.

Question : In recommender systems, the ___________ problem is often reduced by adopting a hybrid approach

between content-based matching and collaborative filtering. New items (which have not yet received any

ratings from the community) would be assigned a rating automatically, based on the ratings assigned by

the community to other similar items. Item similarity would be determined according to the items' content-based characteristics.

1. hot-start

2. cold start

3. Access Mostly Uused Products by 50000+ Subscribers

4. Automated rating

Ans : 2

Exp :

Question : The cold start" problem happens in recommendation systems due to

1. Huge volume of data to analyze

2. Very small amount of data to analyze

3. Access Mostly Uused Products by 50000+ Subscribers

4. Information is enough but less memory

Ans :3

Exp : The cold start" problem happens in recommendation systems due to the lack of information, on users or items.

Question : Select the correct statement which applies to Collaborative filtering

1. Make recommendation based on past user-item interaction

2. Better performance for old users and old items

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1 and 2 are correct

5. All 1,2 and 3 are correct

Ans : 5

Exp : Collaborative filtering (CF aka Memory based)

Make recommendation based on past user-item interaction

Better performance for old users and old items

Does not naturally handle new users and new items

Question : You are creating a model for the recommending the book at Amazon.com, so which of the following

recommender system you will use you don't have cold start problem?

1. Naive Bayes classifier

2. K-Means Clustring

3. Access Mostly Uused Products by 50000+ Subscribers

4. Content-based filtering

Ans : 4

Exp : The cold start problem is most prevalent in recommender systems. Recommender systems form a specific type of information filtering (IF) technique that attempts to present information items (movies, music, books, news, images, web pages) that are likely of interest to the user. Typically, a recommender system compares the user's profile to some reference characteristics. These characteristics may be from the information item (the content-based approach) or the user's social environment (the collaborative filtering approach). In the content-based approach, the system must be capable of matching the characteristics of an item against relevant features in the user's profile. In order to do this, it must first construct a sufficiently-detailed model of the user's tastes and preferences through preference elicitation. This may be done either explicitly (by querying the user) or implicitly (by observing the user's behaviour). In both cases, the cold start problem would imply that the user has to dedicate an amount of effort using the system in its 'dumb' state - contributing to the construction of their user profile - before the system can start providing any intelligent recommendations. Content-based filtering recommender systems use information about items or users to make recommendations, rather than user preferences, so it will perform well with little user preference data. Item-based and user-based collaborative filtering makes predictions based on users' preferences for items, os they will typically perform poorly with little user preference data. Logistic regression is not recommender system technique. Content-based filtering, also referred to as cognitive filtering, recommends items based on a comparison between the content of the items and a user profile. The content of each item is represented as a set of descriptors or terms, typically the words that occur in a document. The user profile is represented with the same terms and built up by analyzing the content of items which have been seen by the user.

Several issues have to be considered when implementing a content-based filtering system. First, terms can either be assigned automatically or manually. When terms are assigned automatically a method has to be chosen that can extract these terms from items. Second, the terms have to be represented such that both the user profile and the items can be compared in a meaningful way. Third, a learning algorithm has to be chosen that is able to learn the user profile based on seen items and can make recommendations based on this user profile. The information source that content-based filtering systems are mostly used with are text documents. A standard approach for term parsing selects single words from documents. The vector space model and latent semantic indexing are two methods that use these terms to represent documents as vectors in a multi dimensional space. Relevance feedback, genetic algorithms, neural networks, and the Bayesian classifier are among the learning techniques for learning a user profile. The vector space model and latent semantic indexing can both be used by these learning methods to represent documents. Some of the learning methods also represent the user profile as one or more vectors in the same multi dimensional space which makes it easy to compare documents and profiles. Other learning methods such as the Bayesian classifier and neural networks do not use this space but represent the user profile in their own way.

The efficiency of a learning method does play an important role in the decision of which method to choose. The most important aspect of efficiency is the computational complexity of the algorithm, although storage requirements can also become an issue as many user profiles have to be maintained. Neural networks and genetic algorithms are usually much slower compared to other learning methods as several iterations are needed to determine whether or not a document is relevant. Instance based methods slow down as more training examples become available because every example has to be compared to all the unseen documents. Among the best performers in terms of speed are the Bayesian classifier and relevance feedback.

The ability of a learning method to adapt to changes in the user's preferences also plays an important role. The learning method has to be able to evaluate the training data as instances do not last forever but become obsolete as the user's interests change. Another criteria is the number of training instances needed. A learning method that requires many training instances before it is able to make accurate predictions is only useful when the user's interests remain constant for a long period of time. The Bayesian classifier does not do well here. There are many training instances needed before the probabilities will become accurate enough to base a prediction on. Conversely, a relevance feedback method and a nearest neighbor method that uses a notion of distance can start making suggestions with only one training instance.

Learning methods also differ in their ability to modulate the training data as instances age. In the nearest neighbor method and in a genetic algorithm old training instances will have to be removed entirely. The user models employed by relevance feedback methods and neural networks can be adjusted more smoothly by reducing weights of corresponding terms or nodes. The learning methods applied to content-based filtering try to find the most relevant documents based on the user's behavior in the past. Such approach however restricts the user to documents similar to those already seen. This is known as the over-specialization problem. As stated before the interests of a user are rarely static but change over time. Instead of adapting to the user's interests after the system has received feedback one could try to predict a user's interests in the future and recommend documents that contain information that is entirely new to the user.

A recommender system has to decide between two types of information delivery when providing the user with recommendations:

Exploitation. The system chooses documents similar to those for which the user has already expressed a preference.

Exploration. The system chooses documents where the user profile does not provide evidence to predict the user's reaction.

Question : Stories appear in the front page of Digg as they are "voted up" (rated positively) by the community.

As the community becomes larger and more diverse, the promoted stories can better reflect the average

interest of the community members. Which of the following technique is used to make such recommendation engine?

1. Naive Bayes classifier

2. Collaborative filtering

3. Access Mostly Uused Products by 50000+ Subscribers

4. Content-based filtering

Ans : 2

Exp : Collaborative filtering, also referred to as social filtering, filters information by using the recommendations of other people. It is based on the idea that people who agreed in their evaluation of certain items in the past are likely to agree again in the future. A person who wants to see a movie for example, might ask for recommendations from friends. The recommendations of some friends who have similar interests are trusted more than recommendations from others. This information is used in the decision on which movie to see. Most collaborative filtering systems apply the so called neighborhood-based technique. In the neighborhood-based approach a number of users is selected based on their similarity to the active user. A prediction for the active user is made by calculating a weighted average of the ratings of the selected users. To illustrate how a collaborative filtering system makes recommendations consider the example in movie ratings table below. This shows the ratings of five movies by five people. A "+" indicates that the person liked the movie and a "-" indicates that the person did not like the movie. To predict if Ken would like the movie "Fargo", Ken's ratings are compared to the ratings of the others. In this case the ratings of Ken and Mike are identical and because Mike liked Fargo, one might predict that Ken likes the movie as well.One scenario of collaborative filtering application is to recommend interesting or popular information as judged by the community. As a typical example, stories appear in the front page of Digg as they are "voted up" (rated positively) by the community. As the community becomes larger and more diverse, the promoted stories can better reflect the average interest of the community members. Selecting Neighbourhod : Many collaborative filtering systems have to be able to handle a large number of users. Making a prediction based on the ratings of thousands of people has serious implications for run-time performance. Therefore, when the number of users reaches a certain amount a selection of the best neighbors has to be made. Two techniques, correlation-thresholding and best-n-neighbor, can be used to determine which neighbors to select. The first technique selects only those neighbors who's correlation is greater than a given threshold. The second technique selects the best n neighbors with the highest correlation.

Question :

You are designing a recommendation engine for a website where the ability to generate

more personalized recommendations by analyzing information from the past activity of a specific user,

or the history of other users deemed to be of similar taste to a given user. These resources are used

as user profiling and helps the site recommend content on a user-by-user basis.

The more a given user makes use of the system, the better the recommendations become,

as the system gains data to improve its model of that user. What kind of this recommendation engine is ?

1. Naive Bayes classifier

2. Collaborative filtering

3. Access Mostly Uused Products by 50000+ Subscribers

4. Content-based filtering

Ans : 2

Exp : Collaborative filtering, also referred to as social filtering, filters information by using the recommendations of other people. It is based on the idea that people who agreed in their evaluation of certain items in the past are likely to agree again in the future. A person who wants to see a movie for example, might ask for recommendations from friends. The recommendations of some friends who have similar interests are trusted more than recommendations from others. This information is used in the decision on which movie to see. Most collaborative filtering systems apply the so called neighborhood-based technique. In the neighborhood-based approach a number of users is selected based on their similarity to the active user. A prediction for the active user is made by calculating a weighted average of the ratings of the selected users. To illustrate how a collaborative filtering system makes recommendations consider the example in movie ratings table below. This shows the ratings of five movies by five people. A "+" indicates that the person liked the movie and a "-" indicates that the person did not like the movie. To predict if Ken would like the movie "Fargo", Ken's ratings are compared to the ratings of the others. In this case the ratings of Ken and Mike are identical and because Mike liked Fargo, one might predict that Ken likes the movie as well.One scenario of collaborative filtering application is to recommend interesting or popular information as judged by the community. As a typical example, stories appear in the front page of Digg as they are "voted up" (rated positively) by the community. As the community becomes larger and more diverse, the promoted stories can better reflect the average interest of the community members. Selecting Neighbourhod : Many collaborative filtering systems have to be able to handle a large number of users. Making a prediction based on the ratings of thousands of people has serious implications for run-time performance. Therefore, when the number of users reaches a certain amount a selection of the best neighbors has to be made. Two techniques, correlation-thresholding and best-n-neighbor, can be used to determine which neighbors to select. The first technique selects only those neighbors who's correlation is greater than a given threshold. The second technique selects the best n neighbors with the highest correlation.Another aspect of collaborative filtering systems is the ability to generate more personalized recommendations by analyzing information from the past activity of a specific user, or the history of other users deemed to be of similar taste to a given user. These resources are used as user profiling and help the site recommend content on a user-by-user basis. The more a given user makes use of the system, the better the recommendations become, as the system gains data to improve its model of that user

Question :

As a data scientist consultant at HadoopExam.com, you are working on a recommendation

engine for the learning resources for end user. So Which recommender system technique benefits most from additional user preference data?

1. Naive Bayes classifier

2. Item-based collaborative filtering

3. Access Mostly Uused Products by 50000+ Subscribers

4. Logistic Regression

Ans : 2

Exp : Item-based scales with the number of items, and user-based scales with the number of users you have. If you have something like a store, you'll have a few thousand items at the most. The biggest stores at the time of writing have around 100,000 items. In the Netflix competition, there were 480,000 users and 17,700 movies. If you have a lot of users, then you'll probably want to go with item-based similarity. For most product-driven recommendation engines, the number of users outnumbers the number of items. There are more people buying items than unique items for sale. Item-based collaborative filtering makes predictions based on users preferences for items. More preference data should be beneficial to this type of algorithm. Content-based filtering recommender systems use information about items or users, and not user preferences, to make recommendations. Logistic Regression, Power iteration and a Naive Bayes classifier are not recommender system techniques. Collaborative filtering, also referred to as social filtering, filters information by using the recommendations of other people. It is based on the idea that people who agreed in their evaluation of certain items in the past are likely to agree again in the future. A person who wants to see a movie for example, might ask for recommendations from friends. The recommendations of some friends who have similar interests are trusted more than recommendations from others. This information is used in the decision on which movie to see. Most collaborative filtering systems apply the so called neighborhood-based technique. In the neighborhood-based approach a number of users is selected based on their similarity to the active user. A prediction for the active user is made by calculating a weighted average of the ratings of the selected users. To illustrate how a collaborative filtering system makes recommendations consider the example in movie ratings table below. This shows the ratings of five movies by five people. A "+" indicates that the person liked the movie and a "-" indicates that the person did not like the movie. To predict if Ken would like the movie "Fargo", Ken's ratings are compared to the ratings of the others. In this case the ratings of Ken and Mike are identical and because Mike liked Fargo, one might predict that Ken likes the movie as well.One scenario of collaborative filtering application is to recommend interesting or popular information as judged by the community. As a typical example, stories appear in the front page of Digg as they are "voted up" (rated positively) by the community. As the community becomes larger and more diverse, the promoted stories can better reflect the average interest of the community members. Selecting Neighbourhod : Many collaborative filtering systems have to be able to handle a large number of users. Making a prediction based on the ratings of thousands of people has serious implications for run-time performance. Therefore, when the number of users reaches a certain amount a selection of the best neighbors has to be made. Two techniques, correlation-thresholding and best-n-neighbor, can be used to determine which neighbors to select. The first technique selects only those neighbors who's correlation is greater than a given threshold. The second technique selects the best n neighbors with the highest correlation.

Question :

While working with Netflix the movie rating websites you have developed a recommender system that has produced ratings

predictions for your data set that are consistently exactly 1 higher for the user-item pairs in your dataset than the

ratings given in the dataset. There are n items in the dataset. What will be the calculated RMSE of your recommender system on the dataset?

1. 1

2. 2

3. Access Mostly Uused Products by 50000+ Subscribers

4. n

5. n/2

Ans :1

Exp : The root-mean-square deviation (RMSD) or root-mean-square error (RMSE) is a frequently used measure of the differences between values predicted by a model or an estimator and the values actually observed. Basically, the RMSD represents the sample standard deviation of the differences between predicted values and observed values. These individual differences are called residuals when the calculations are performed over the data sample that was used for estimation, and are called prediction errors when computed out-of-sample. The RMSD serves to aggregate the magnitudes of the errors in predictions for various times into a single measure of predictive power. RMSD is a good measure of accuracy, but only to compare forecasting errors of different models for a particular variable and not between variables, as it is scale-dependent. RMSE is calculated as the square root of the mean of the squares of the errors. The error in every case in this example is 1. The square of 1 is 1 The average of n items with value 1 is 1 The square root of 1 is 1 The RMSE is therefore 1

Question :

Select the correct statement which applies to MAE

1. The MAE measures the average magnitude of the errors in a set of forecasts, without considering their direction

2. The MAE measures the average magnitude of the errors in a set of forecasts, with considering their direction

3. Access Mostly Uused Products by 50000+ Subscribers

4. It measures accuracy for discrete variables

5. The MAE is a linear score which means that all the individual differences are weighted equally in the average.

6. The MAE is a non-linear score which means that all the individual differences are weighted equally in the average.

1. 1,3,5

2. 2,4,6

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1,3,6

5. None of the above

Ans : 1

Exp : Mean absolute error (MAE)

The MAE measures the average magnitude of the errors in a set of forecasts, without considering their direction. It measures accuracy for continuous variables. The equation is given in the library references. Expressed in words, the MAE is the average over the verification sample of the absolute values of the differences between forecast and the corresponding observation. The MAE is a linear score which means that all the individual differences are weighted equally in the average.

Question :

You read that a set of temperature forecasts shows a MAE of 1.5 degrees and a RMSE of 2.5 degrees. What does this mean? Choose the best answer:

1. There is some variation in the magnitude of the errors

2. Very large errors are unlikely to have occured

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above

Question :

Select the correct statement for AUC which is a commonly used evaluation method in measuring the accuracy and quality of a recommender system

1. is a commonly used evaluation method for binary choice problems,

2. It involves classifying an instance as either positive or negative

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1 and 2 only

5. All 1,2 and 3

Ans :4

Exp : AUC is a commonly used evaluation method for binary choice problems, which involve classifying an instance as either positive or negative. Its main advantages over other evaluation methods, such as the simpler misclassification error, are:

1. It's insensitive to unbalanced datasets (datasets that have more installeds than not-installeds or vice versa).

2. For other evaluation methods, a user has to choose a cut-off point above which the target variable is part of the positive class (e.g. a logistic regression model returns any real number between 0 and 1 - the modeler might decide that predictions greater than 0.5 mean a positive class prediction while a prediction of less than 0.5 mean a negative class prediction). AUC evaluates entries at all cut-off points, giving better insight into how well the classifier is able to separate the two classes.

Question : You have created a recommender system for QuickTechie.com website, where you recommend the Software professional

based on some parameters like technologies, location, companies etc. Now but you have little doubt that this model is not

giving proper recommendation as Rahul is working on Hadoop in Mumbai and John from france is working on UI application created in flash,

are recommended as a similar professional, which is not correct. Select the correct option which will be helpful to measure the accuracy and quality of a recommender system you created for QuickTechie.com?

1. Cluster Density

2. Support Vector Count

3. Access Mostly Uused Products by 50000+ Subscribers

4. Sum of Absolute Errors

Ans 3

Exp :The MAE measures the average magnitude of the errors in a set of forecasts, without considering their direction. It measures accuracy for continuous variables. The equation is given in the library references. Expressed in words, the MAE is the average over the verification sample of the absolute values of the differences between forecast and the corresponding observation. The MAE is a linear score which means that all the individual differences are weighted equally in the average.

The sum of absolute errors is a valid metric, but doesn't give any useful sense of how the recommender system is performing.

Support vector count and cluster density do not apply to recommender systems.

MAE and AUC are both valid and useful metrics for measuring recommender systems. Mean absolute error (MAE)

The MAE measures the average magnitude of the errors in a set of forecasts, without considering their direction. It measures accuracy for continuous variables. The equation is given in the library references. Expressed in words, the MAE is the average over the verification sample of the absolute values of the differences between forecast and the corresponding observation. The MAE is a linear score which means that all the individual differences are weighted equally in the average.

Root mean squared error (RMSE) : The RMSE is a quadratic scoring rule which measures the average magnitude of the error. The equation for the RMSE is given in both of the references. Expressing the formula in words, the difference between forecast and corresponding observed values are each squared and then averaged over the sample. Finally, the square root of the average is taken. Since the errors are squared before they are averaged, the RMSE gives a relatively high weight to large errors. This means the RMSE is most useful when large errors are particularly undesirable.

The MAE and the RMSE can be used together to diagnose the variation in the errors in a set of forecasts. The RMSE will always be larger or equal to the MAE; the greater difference between them, the greater the variance in the individual errors in the sample. If the RMSE=MAE, then all the errors are of the same magnitude

Question : You have created a recommender system for QuickTechie.com website, where you recommend the Software professional

based on some parameters like technologies, location, companies etc. Now but you have little doubt that this model is not

giving proper recommendation as Rahul is working on Hadoop in Mumbai and John from france is working on UI application created in flash,

are recommended as a similar professional, which is not correct. Select the correct option which will be helpful to measure the accuracy and quality of a recommender system you created for QuickTechie.com?

1. Cluster Density

2. Support Vector Count

3. Access Mostly Uused Products by 50000+ Subscribers

4. Sum of Absolute Errors

Ans : 3

Exp : AUC is a commonly used evaluation method for binary choice problems, which involve classifying an instance as either positive or negative. Its main advantages over other evaluation methods, such as the simpler misclassification error, are:

1. It's insensitive to unbalanced datasets (datasets that have more installeds than not-installeds or vice versa).

2. For other evaluation methods, a user has to choose a cut-off point above which the target variable is part of the positive class (e.g. a logistic regression model returns any real number between 0 and 1 - the modeler might decide that predictions greater than 0.5 mean a positive class prediction while a prediction of less than 0.5 mean a negative class prediction). AUC evaluates entries at all cut-off points, giving better insight into how well the classifier is able to separate the two classes.

The MAE measures the average magnitude of the errors in a set of forecasts, without considering their direction. It measures accuracy for continuous variables. The equation is given in the library references. Expressed in words, the MAE is the average over the verification sample of the absolute values of the differences between forecast and the corresponding observation. The MAE is a linear score which means that all the individual differences are weighted equally in the average.

The sum of absolute errors is a valid metric, but doesn't give any useful sense of how the recommender system is performing.

Support vector count and cluster density do not apply to recommender systems.

MAE and AUC are both valid and useful metrics for measuring recommender systems.

Question :

Scatetr plots provide the following information about the relationship between two variables

1. Strength

2. Shape - linear, curved, etc.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Presence of outliers

1. 1,2,3

2. 1,3,4

3. Access Mostly Uused Products by 50000+ Subscribers

4. 2,3,4

5. All 1,2,3,4

Ans :5

Exp : Scatter plots show the relationship between two variables by displaying data points on a two-dimensional graph. The variable that might be considered an explanatory variable is plotted on the x axis, and the response variable is plotted on the y axis.

Scatter plots are especially useful when there are a large number of data points. They provide the following information about the relationship between two variables

Strength

Shape - linear, curved, etc.

Direction - positive or negative

Presence of outliers

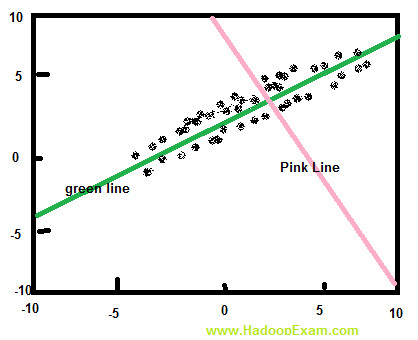

A correlation between the variables results in the clustering of data points along a line. The following is an example of a scatter plot suggestive of a positive linear relationship.

Question : You are given a data set that contains information about tv advertisement placed between and of Zee News Channel

(Total Asia continent information). With the following detailed information.

Advertisement duration, Cost rate per minute of Advertissement, Country of the Advertisers, City from which addvertiser

Country to which advertise needs to be shown., City to which advertise needs to be shown., Month total advertisement

Days (of month) advertisement shown, Total hourds for which advertisement shown. , Total Minutes for which advertisement shown.

From the data set you can determine the frequencies of all the advertisement shown in Asia continent. For example, between 1990 and 2014,

500 advertisement were given from China to Shown in India, While 2000 advertisement given by Russia to shown in Japan.

Now you want to draw the pictue which shows the relation between Ad duration and cost per Minute, which technique you feel would be better.

1. Scatter plot

2. Tree map

3. Access Mostly Uused Products by 50000+ Subscribers

4. Box plot

5. Bar chart

Ans : 1

Exp : A scatter plot, scatterplot, or scattergraph is a type of mathematical diagram using Cartesian coordinates to display values for two variables for a set of data. The data is displayed as a collection of points, each having the value of one variable determining the position on the horizontal axis and the value of the other variable determining the position on the vertical axis. This kind of plot is also called a scatter chart, scattergram, scatter diagram, or scatter graph.

A heat map is a two-dimensional representation of data in which values are represented by colors. A simple heat map provides an immediate visual summary of information. More elaborate heat maps allow the viewer to understand complex data sets. Another type of heat map, which is often used in business, is sometimes referred to as a tree map. This type of heat map uses rectangles to represent components of a data set. The largest rectangle represents the dominant logical division of data and smaller rectangles illustrate other sub-divisions within the data set. The color and size of the rectangles on this type of heat map can correspond to two different values, allowing the viewer to perceive two variables at once. Tree maps are often used for budget proposals, stock market analysis, risk management, project portfolio analysis, market share analysis, website design and network management. In descriptive statistics, a box plot or boxplot is a convenient way of graphically depicting groups of numerical data through their quartiles. Box plots may also have lines extending vertically from the boxes (whiskers) indicating variability outside the upper and lower quartiles, hence the terms box-and-whisker plot and box-and-whisker diagram. Outliers may be plotted as individual points. To visualize correlations between two variables, a scatter plot is typically the best choice. By plotting the data on a scatter plot, you can easily see any trends in the correlation, such as a linear relationship, a log normal relationship, or a polynomial relationship. A heat map uses three dimensions and so would be a poor choice for this purpose. Box plots, bar charts, and tree maps do not provide the kind of uniform special mapping of the data onto the graph that is required to see trends.

Question :

Which of the following provide the kind of uniform special mapping of the data onto the graph that is required to see trends.

1. Box plots

2. Bar charts

3. Access Mostly Uused Products by 50000+ Subscribers

4. All 1,2 and 3

5. None of 1,2 and 3

Ans 5

Exp : Box Plots:

In descriptive statistics, a box plot or boxplot is a convenient way of graphically depicting groups of numerical data through their quartiles. Box plots may also have lines extending vertically from the boxes (whiskers) indicating variability outside the upper and lower quartiles, hence the terms box-and-whisker plot and box-and-whisker diagram. Outliers may be plotted as individual points.

Box plots display differences between populations without making any assumptions of the underlying statistical distribution: they are non-parametric. The spacings between the different parts of the box help indicate the degree of dispersion (spread) and skewness in the data, and identify outliers. In addition to the points themselves, they allow one to visually estimate various L-estimators, notably the interquartile range, midhinge, range, mid-range, and trimean. Boxplots can be drawn either horizontally or vertically.

A heat map is a two-dimensional representation of data in which values are represented by colors. A simple heat map provides an immediate visual summary of information. More elaborate heat maps allow the viewer to understand complex data sets.

In the United States, many people are familiar with heat maps from viewing television news programs. During a presidential election, for instance, a geographic heat map with the colors red and blue will quickly inform the viewer which states each candidate has won.

Another type of heat map, which is often used in business, is sometimes referred to as a tree map. This type of heat map uses rectangles to represent components of a data set. The largest rectangle represents the dominant logical division of data and smaller rectangles illustrate other sub-divisions within the data set. The color and size of the rectangles on this type of heat map can correspond to two different values, allowing the viewer to perceive two variables at once. Tree maps are often used for budget proposals, stock market analysis, risk management, project portfolio analysis, market share analysis, website design and network management.

Question : You are given a data set that contains information about tv advertisement placed between and of Zee News Channel

(Total Asia continent information). With the following detailed information.

Advertisement duration, Cost rate per minute of Advertissement, Country of the Advertisers, City from which addvertiser

Country to which advertise needs to be shown., City to which advertise needs to be shown., Month total advertisement

Days (of month) advertisement shown, Total hourds for which advertisement shown. , Total Minutes for which advertisement shown.

From the data set you can determine the frequencies of all the advertisement shown in Asia continent. For example, between 1990 and 2014,

500 advertisement were given from China to Shown in India, While 2000 advertisement given by Russia to shown in Japan.

Now you want to draw the pictue which shows the relation between which contries given most advertisement in the other country.

Select the correct option.

1. Heat map

2. Tree map

3. Access Mostly Uused Products by 50000+ Subscribers

4. Bar chart

5. Scatter plot

Ans :1 Exp : A scatter plot, scatterplot, or scattergraph is a type of mathematical diagram using Cartesian coordinates to display values for two variables for a set of data. The data is displayed as a collection of points, each having the value of one variable determining the position on the horizontal axis and the value of the other variable determining the position on the vertical axis. This kind of plot is also called a scatter chart, scattergram, scatter diagram, or scatter graph.

A heat map is a two-dimensional representation of data in which values are represented by colors. A simple heat map provides an immediate visual summary of information. More elaborate heat maps allow the viewer to understand complex data sets.

Another type of heat map, which is often used in business, is sometimes referred to as a tree map. This type of heat map uses rectangles to represent components of a data set. The largest rectangle represents the dominant logical division of data and smaller rectangles illustrate other sub-divisions within the data set. The color and size of the rectangles on this type of heat map can correspond to two different values, allowing the viewer to perceive two variables at once. Tree maps are often used for budget proposals, stock market analysis, risk management, project portfolio analysis, market share analysis, website design and network management. In descriptive statistics, a box plot or boxplot is a convenient way of graphically depicting groups of numerical data through their quartiles. Box plots may also have lines extending vertically from the boxes (whiskers) indicating variability outside the upper and lower quartiles, hence the terms box-and-whisker plot and box-and-whisker diagram. Outliers may be plotted as individual points.

To visualize correlations between two variables, a scatter plot is typically the best choice. By plotting the data on a scatter plot, you can easily see any trends in the correlation, such as a linear relationship, a log normal relationship, or a polynomial relationship. A heat map uses three dimensions and so would be a poor choice for this purpose. Box plots, bar charts, and tree maps do not provide the kind of uniform special mapping of the data onto the graph that is required to see trends.In order to effectively visualize the advertisement source and destination frequencies, you'll need a plot that gives at least three dimensions: the source, destination, and frequency. A heat map provides exactly that. Scatter plots, box plots, tree maps, and bar charts provide at most two dimensions. In theory, you could use a three-dimensional variant of one of the two dimensions graphs, but three-dimensional graphs are never a good idea. Three-dimensional graphs can only be shown in two dimensions in print and hence cause visual distortions to the data. They can also hide some data points, and they make it very difficult to compare data points from different parts of the graph.

Question :

Which of the following graph can be best presented in two-dimension

1. Scatter plots

2. Box plots

3. Access Mostly Uused Products by 50000+ Subscribers

4. Bar charts

1. 1,2,3

2. 2,3,4

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1,2,4

5. All 1,2,3 and 4

Ans : 5

Exp : A heat map provides exactly that. Scatter plots, box plots, tree maps, and bar charts provide at most two dimensions. In theory, you could use a three-dimensional variant of one of the two dimensions graphs, but three-dimensional graphs are never a good idea. Three-dimensional graphs can only be shown in two dimensions in print and hence cause visual distortions to the data. They can also hide some data points, and they make it very difficult to compare data points from different parts of the graph.

Question : You are given a data set that contains information about tv advertisement placed between and of Zee News Channel

(Total Asia continent information). With the following detailed information.

Advertisement duration, Cost rate per minute of Advertissement, Country of the Advertisers, City from which addvertiser

Country to which advertise needs to be shown., City to which advertise needs to be shown., Month total advertisement

Days (of month) advertisement shown, Total hourds for which advertisement shown. , Total Minutes for which advertisement shown.

From the data set you can determine the frequencies of all the advertisement shown in Asia continent. For example, between 1990 and 2014,

500 advertisement were given from China to Shown in India, While 2000 advertisement given by Russia to shown in Japan.

Now you want to draw the pictue which shows the relation between Ad dthat every city and country has of the overall ad data, which technique you feel would be better.

1. Scatter plot

2. Heat map

3. Access Mostly Uused Products by 50000+ Subscribers

4. Tree map

Ans : 4

Exp : To show the share of advertisement originations for every city and state, you'll need a way to show hierarchical information. A tree map is a natural choice, since it's designed for exactly that purpose. You could, however, use a stacked bar chart to present the same information. A heat map has an extra, unneeded dimension, which would make the graph confusing. A scatter plot is for numeric data in both dimensions. A box plot is for groupings of multiple values.

A scatter plot, scatterplot, or scattergraph is a type of mathematical diagram using Cartesian coordinates to display values for two variables for a set of data.

The data is displayed as a collection of points, each having the value of one variable determining the position on the horizontal axis and the value of the other variable determining the position on the vertical axis. This kind of plot is also called a scatter chart, scattergram, scatter diagram, or scatter graph.

A heat map is a two-dimensional representation of data in which values are represented by colors. A simple heat map provides an immediate visual summary of information. More elaborate heat maps allow the viewer to understand complex data sets.

Another type of heat map, which is often used in business, is sometimes referred to as a tree map. This type of heat map uses rectangles to represent components of a data set. The largest rectangle represents the dominant logical division of data and smaller rectangles illustrate other sub-divisions within the data set. The color and size of the rectangles on this type of heat map can correspond to two different values, allowing the viewer to perceive two variables at once. Tree maps are often used for budget proposals, stock market analysis, risk management, project portfolio analysis, market share analysis, website design and network management.

In descriptive statistics, a box plot or boxplot is a convenient way of graphically depicting groups of numerical data through their quartiles. Box plots may also have lines extending vertically from the boxes (whiskers) indicating variability outside the upper and lower quartiles, hence the terms box-and-whisker plot and box-and-whisker diagram. Outliers may be plotted as individual points.

To visualize correlations between two variables, a scatter plot is typically the best choice. By plotting the data on a scatter plot, you can easily see any trends in the correlation, such as a linear relationship, a log normal relationship, or a polynomial relationship. A heat map uses three dimensions and so would be a poor choice for this purpose. Box plots, bar charts, and tree maps do not provide the kind of uniform special mapping of the data onto the graph that is required to see trends. In order to effectively visualize the advertisement source and destination frequencies, you'll need a plot that gives at least three dimensions: the source, destination, and frequency. A heat map provides exactly that. Scatter plots, box plots, tree maps, and bar charts provide at most two dimensions. In theory, you could use a three-dimensional variant of one of the two dimensions graphs, but three-dimensional graphs are never a good idea. Three-dimensional graphs can only be shown in two dimensions in print and hence cause visual distortions to the data. They can also hide some data points, and they make it very difficult to compare data points from different parts of the graph.

Question :

Which of the following is a correct use case for the scatter plots

1. Male versus female likelihood of having lung cancer at different ages

2. technology early adopters and laggards' purchase patterns of smart phones

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above

Ans :4

Exp : Looking to dig a little deeper into some data, but not quite sure how - or if - different

pieces of information relate? Scatter plots are an effective way to give you a sense of

trends, concentrations and outliers that will direct you to where you want to focus your

investigation efforts further.

When to use scatter plots:

o Investigating the relationship between different variables. Examples: Male

versus female likelihood of having lung cancer at different ages, technology early

adopters' and laggards' purchase patterns of smart phones, shipping costs of

different product categories to different regions.

Question :

Which of the following places where we cannot use Gantt charts

1. Displaying a project schedule. Examples: illustrating key deliverables, owners, and deadlines.

2. Showing other things in use over time. Examples: duration of a machine's use,

3. Access Mostly Uused Products by 50000+ Subscribers

4. None of the above

Ans : 4

Exp : Gantt charts excel at illustrating the start and finish dates elements of a project. Hitting

deadlines is paramount to a project's success. Seeing what needs to be accomplished -

and by when - is essential to make this happen. This is where a Gantt chart comes in.

While most associate Gantt charts with project management, they can be used to

understand how other things such as people or machines vary over time. You could

use a Gantt, for example, to do resource planning to see how long it took people to hit

specific milestones, such as a certification level, and how that was distributed over time.

When to use Gantt charts:

o Displaying a project schedule. Examples: illustrating key deliverables, owners,

and deadlines.

o Showing other things in use over time. Examples: duration of a machine's use,

availability of players on a team.

Question :

Which of the following is the best example where we can use Heat maps

1. Segmentation analysis of target market

2. product adoption across regions

3. Access Mostly Uused Products by 50000+ Subscribers

4. All of the above

5. None of 1,2 and 3

Ans : 4

Exp : Heat maps are a great way to compare data across two categories using color. The

Effect is to quickly see where the intersection of the categories is strongest and weakest.

When to use heat maps:

Showing the relationship between two factors. Examples: segmentation analysis of target market, product adoption across regions, sales leads by Individual rep.

Question :

Which of the following cannot be presented using TreeMap?

1. Storage usage across computer machines

2. managing the number and priority of technical support cases

3. Access Mostly Uused Products by 50000+ Subscribers

4. None of the above

Question :

If you want to understanding your data at a glance, seeing how data is skewed towards one end, which is the best fit graph or chart.

1. Scatter graph

2. Tree Map

3. Access Mostly Uused Products by 50000+ Subscribers

4. Box-and-whisker plot

Ans : 4

Exp : : Box-and-whisker Plot

Box-and-whisker plots, or boxplots, are an important way to show distributions of data. The name refers to the two parts of the plot: the box, which contains the median of the data along with the 1st and 3rd quartiles (25% greater and less than the median), and the whiskers, which typically represents data within 1.5 times the Inter-quartile Range (the difference between the 1st and 3rd quartiles). The whiskers can also be used to also show the maximum and minimum points within the data. When to use box-and-whisker plots: o Showing the distribution of a set of a data: Examples: understanding your data at a glance, seeing how data is skewed towards one end, identifying outliers in your data.

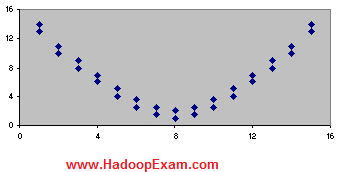

Question : The scatterplot below shows the relation between two variables.

Which of the following statements are true?

I. The relation is strong.

II. The slope is positive.

III. The slope is negative.

1. I only

2. II only

3. Access Mostly Uused Products by 50000+ Subscribers

4. I and II

5. I and III

Ans : 1

Exp : The correct answer is 1. The relation is strong because the dots are tightly clustered around a line. Note that a line does not have to be straight for a relationship to be strong. In this case, the line is U-shaped.

Across the entire scatterplot, the slope is zero. In the first half of the scatterplot, the Y variable gets smaller as the X variable gets bigger; so the slope in the first half of the scatterplot is negative. But in the second half of the scatterplot, just the opposite occurs. The Y variable gets bigger as the X variable gets bigger; so the slope in the second half is positive. When the slope is positive in one half of a scatterplot and negative in the other half, the slope for the entire scatterplot is zero.

Question :

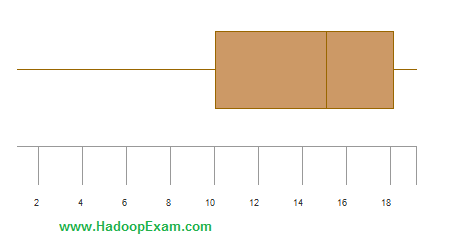

Consider the boxplot below.

Which of the following statements are true?

I. The distribution is skewed right.

II. The interquartile range is about 8.

III. The median is about 10.

1. I only

2. II only

3. Access Mostly Uused Products by 50000+ Subscribers

4. I and III

5. II and III

Ans : 2

Exp :

The correct answer is (B). Most of the observations are on the high end of the scale, so the distribution is skewed left. The interquartile range is indicated by the length of the box, which is 18 minus 10 or 8. And the median is indicated by the vertical line running through the middle of the box, which is roughly centered over 15. So the median is about 15.

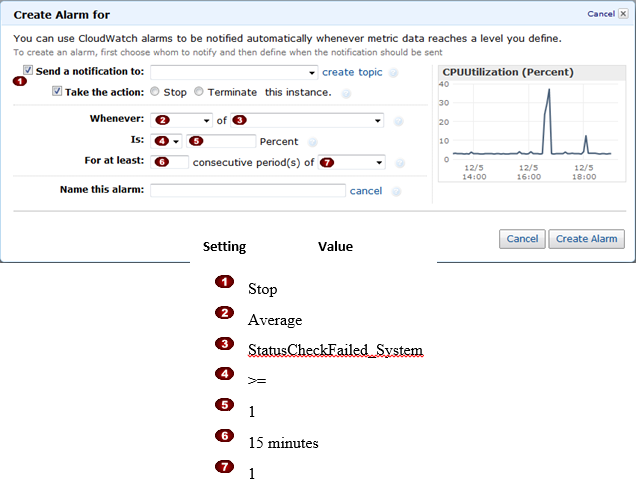

Question : You are working with CISCO telephone department and

you need to model the failure rate of the telephone devices,

you chose the beta distribution to model the telephone failure rates,

with two variables a,b as shown in image why you decided to use beta distribution?

1. Because it has two parameters rather than one

2. Because it is conjugate to the binomial distribution

3. Access Mostly Uused Products by 50000+ Subscribers

4. Because it distribution ant bowl shape.

Ans : 2

Exp : Binomial distribution pops up in our problems daily, given that the number of occurrences k of events with probability p in a sequence of size n can be described as k=Binomial(n,p) Question that naturally arises in this context is - given observations of k and n , how do we estimate p ? One might say that simply computing p=kn should be enough, since that's both uniformly minimum variance and a maximum likelihood estimator. However, such estimator might be misleading when n is small (as anyone trying to estimate clickthrough rates from small number of impressions can testify). In this context, we can express our model as:

ki=Binomial(ni,pi)

pi=Beta(a,b),i=1...N

where N is total number of observations and a and b are parameters to be estimated. Such model is also called Empirical Bayes. Unlike traditional Bayes, in which we pull prior distribution and it's parameters out of the thin air, Empirical Bayes estimates prior parameters from the data.

The question is - what can we do ? One thing that naturally comes to mind is incorporating any prior knowledge about the distribution we might have. A wise choice for prior of binomial distribution is usually Beta distribution, not just because of it's convenience (given that it's conjugate prior), but also because of flexibility in incorporating different distribution shapes

In Bayesian probability theory, if the posterior distributions p(?|x) are in the same family as the prior probability distribution p(?), the prior and posterior are then called conjugate distributions, and the prior is called a conjugate prior for the likelihood function. For example, the Gaussian family is conjugate to itself (or self-conjugate) with respect to a Gaussian likelihood function: if the likelihood function is Gaussian, choosing a Gaussian prior over the mean will ensure that the posterior distribution is also Gaussian. This means that the Gaussian distribution is a conjugate prior for the likelihood which is also Gaussian. The concept, as well as the term "conjugate prior", was introduced by Howard Raiffa and Robert Schlaifer in their work on Bayesian decision theory. A similar concept had been discovered independently by George Alfred Barnard.

The following proposition states the relation between the Beta and the binomial distributions. Proposition suppose X is a random variable having a Beta distribution with parameters A and B. Let Y be another random variable such that its distribution conditional on X is a binomial distribution with parameters n and X. Then, the conditional distribution of X given Y=y is a Beta distribution with parameters A+y and B+n-y.

A simple model of hardware failure assumes that each device, independently and with equal probability, might fail over a short interval time. Given that probability, the number of failures in that interval can be modeled by the binomial distribution.

This probability is like a rate of failure and itself is not known and must be modeled. In terms of Bayesian theory, the likelihood function is from the binomial distribution. The prior and posterior distribution ought to be of the same form -- both represent knowledge about this rate of failure -- although with different estimates of that rate. A distribution with this relationship is called the conjugate prior. The conjugate prior for the binomial distribution is the beta distribution. Hence the beta distribution could usefully model knowledge about hardware failure rate.

Question :

Please select which of the following is not a supervised technique

1. Hierarchical Clustering

2. Linear Regression

3. Access Mostly Uused Products by 50000+ Subscribers

4. Naïve Bayesian Classifier

5. Decision Trees

Ans : 1

Exp : Supervised Learning

Linear Regression

Decision Trees

Naïve Bayesian Classifier

Artificial Neural Networks (Single-layer Perceptron)

k-Nearest Neighbour

Unsupervised Learning

Hierarchical Clustering

Agglomerative Clustering

Divisive Clustering

Question :

Map the followings

1. Clustering

2. Classification

3. Access Mostly Uused Products by 50000+ Subscribers

A. Build models to classify data into different categories

B. Build models to predict continuous data.

C. Find natural groupings and patterns in data.

1. 1-A,2-B,3-C

2. 1-B,2-C,3-A

3. Access Mostly Uused Products by 50000+ Subscribers

4. 1-B,2-A,3-C

Ans : 3

Exp : Classification

Build models to classify data into different categories.

Algorithms: support vector machine (SVM), boosted and bagged decision trees, k-nearest neighbor, Naïve Bayes, discriminant analysis, neural networks,

Regression

Build models to predict continuous data.

Algorithms: linear model, nonlinear model, regularization, stepwise regression, boosted and bagged decision trees, neural networks, adaptive neuro-fuzzy learning

Clustering

Find natural groupings and patterns in data.

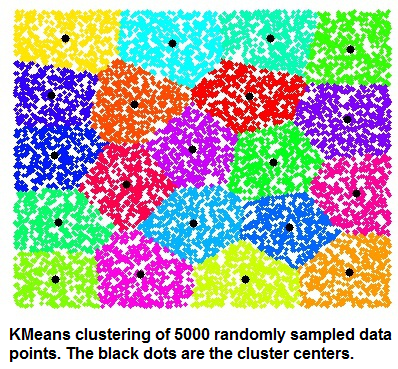

Algorithms: k-means, hierarchical clustering, Gaussian mixture models, hidden Markov models, self-organizing maps, fuzzy c-means clustering, subtractive clustering,

Question :

Which of the following is not a correct application for the Classification?

1. credit scoring

2. tumor detection

3. Access Mostly Uused Products by 50000+ Subscribers

4. drug discovery

Ans : 4

Exp : Classification : Build models to classify data into different categories

credit scoring, tumor detection, image recognition

Regression: Build models to predict continuous data.

electricity load forecasting, algorithmic trading, drug discovery

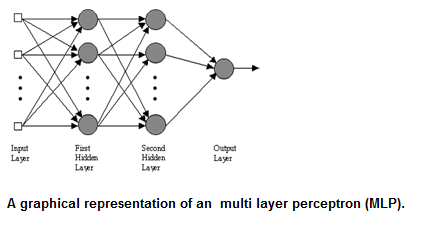

Question : The most common neural network model is the multi layer perceptron (MLP).

The perceptron is an algorithm for ____________ of an input into one of several possible non-binary outputs.

It is a type of linear classifier, i.e. a classification algorithm that makes its predictions based on a

linear predictor function combining a set of weights with the feature vector.

1. Pricipal Component Analysis

2. Simple Vector Machine

3. Access Mostly Uused Products by 50000+ Subscribers

4. Unsupervised learning

5. K-means clustering

Ans : 3

Exp : Neural Networks are an information processing technique based on the way biological nervous systems, such as the brain, process information. They resemble the human brain in the following two ways:A neural network acquires knowledge through learning. A neural network's knowledge is stored within inter-neuron connection strengths known as synaptic weights. Neural networks are being applied to an increasing large number of real world problems. Their primary advantage is that they can solve problems that are too complex for conventional technologies; problems that do not have an algorithmic solution or for which an algorithmic solution is too complex to be defined. In general, neural networks are well suited to problems that people are good at solving, but for which computers generally are not. These problems include pattern recognition and forecasting, which requires the recognition of trends in data.

The true power and advantage of neural networks lies in their ability to represent both linear and non-linear relationships and in their ability to learn these relationships directly from the data being modeled. Traditional linear models are simply inadequate when it comes to modeling data that contains non-linear characteristics.

The most common neural network model is the multi layer perceptron (MLP). This type of neural network is known as a supervised network because it requires a desired output in order to learn. The goal of this type of network is to create a model that correctly maps the input to the output using historical data so that the model can then be used to produce the output when the desired output is unknown.The MLP and many other neural networks learn using an algorithm called backpropagation. With backpropagation, the input data is repeatedly presented to the neural network. With each presentation the output of the neural network is compared to the desired output and an error is computed. This error is then fed back (backpropagated) to the neural network and used to adjust the weights such that the error decreases with each iteration and the neural model gets closer and closer to producing the desired output. This process is known as "training".In machine learning, the perceptron is an algorithm for supervised classification of an input into one of several possible non-binary outputs. It is a type of linear classifier, i.e. a classification algorithm that makes its predictions based on a linear predictor function combining a set of weights with the feature vector. The algorithm allows for online learning, in that it processes elements in the training set one at a time.

The perceptron is a binary classifier which maps its input (a real-valued vector) to an output value (a single binary value): The value of (0 or 1) is used to classify as either a positive or a negative instance, in the case of a binary classification problem. If is negative, then the weighted combination of inputs must produce a positive value greater than in order to push the classifier neuron over the 0 threshold. Spatially, the bias alters the position (though not the orientation) of the decision boundary. The perceptron learning algorithm does not terminate if the learning set is not linearly separable. If the vectors are not linearly separable learning will never reach a point where all vectors are classified properly. The most famous example of the perceptron's inability to solve problems with linearly nonseparable vectors is the Boolean exclusive-or problem. The solution spaces of decision boundaries for all binary functions and learning behaviors are studied in the reference. In the context of artificial neural networks, a perceptron is an artificial neuron using the Heaviside step function as the activation function. The perceptron algorithm is also termed the single-layer perceptron, to distinguish it from a multilayer perceptron, which is a misnomer for a more complicated neural network. As a linear classifier, the single-layer perceptron is the simplest feedforward neural network.

Question :

In which of the following scenerio, you can apply the Chi-Square test ?

1. Suppose you want to determine if certain types of products sell better in certain geographic locations than others.

2. Suppose you want to test if altering your product mix (% of upscale, mid-range and volume items, say) has impacted profits.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Only 1 and 3

5. All 1,2 and 3

Ans : 5

Exp : Any business situation where you are essentially checking if one variable, X is related to, or independent of, another variable, Y. The use of chi-square test is indicated in any of the following business scenarios.

1. Suppose you want to determine if certain types of products sell better in certain geographic locations than others. A trivial example: the type of shoes sold in winter depends strongly on whether a retail outlet is located in the upper mid-west versus in the south. A slightly more complicated example would be to check if the type of gasoline sold in a neighborhood is indicative of the median income in the region. So variable X would be the type of gasoline and variable Y would be income ranges (e.g. less than 0k, 41k-50k, etc).

2. Suppose you want to test if altering your product mix (% of upscale, mid-range and volume items, say) has impacted profits. Here you could compare sales revenues of each product type before and after the change in product mix. Thus the categories in variable X would include all the product types and the categories in variable Y would include period 1 and period 2.

3. Access Mostly Uused Products by 50000+ Subscribers

No matter the business analytics problem, the chi-square test will find uses when you are trying to establish or invalidate that a relationship exists between two given business parameters that are categorical (or nominal) data types.

Question : Select the correct statement which applies to SVM (Support vector machine)

1. The SVM algorithm is a maximum margin classifier, and tries to pick a decision boundary that creates the widest margin between classes

2. SVMs are particularly better at multi-label classification

3. Access Mostly Uused Products by 50000+ Subscribers

4. Only 1 and 3

5. All 1,2 and 3

Ans : 1

Exp : You can use a support vector machine (SVM) when your data has exactly two classes. An SVM classifies data by finding the best hyperplane that separates all data points of one class from those of the other class. The best hyperplane for an SVM means the one with the largest margin between the two classes. Margin means the maximal width of the slab parallel to the hyperplane that has no interior data points.

The support vectors are the data points that are closest to the separating hyperplane; these points are on the boundary of the slab. The following figure illustrates these definitions, with + indicating data points of type 1, and - indicating data points of type -1.

The SVM algorithm is a maximum margin classifier, and tries to pick a decision boundary that creates the widest margin between classes, rather than just any boundary that separates the classes. This helps generalization to test data, since it is less likely to misclassify points near the decision boundary, as the boundary maintains a large margin from training examples.

SVMs are not particularly better at multi-label clasification. Linear separability is not required for either classification technique, and does not relate directly to an advantage of SVMs. SVMs are not particularly more suited to low dimensional data.