Cloudera Hadoop Administrator Certification Certification Questions and Answer (Dumps and Practice Questions)

Question : You have a setup of YARN cluster where the total application memory available is GB, there are department queues,

IT and DCS. The IT queue has 15 GB allocated and DCS queue has 5 GB allocated. Each map task requires 10 GB allocation.

How does the FairScheduler assign the available memory resources under the Single Resource Fairness(SRF) rule?

1. DCS has less resources and will be granted the next 10 GB that becomes available

2. None of them will be granted any memory as both queues have allocated memory

3. Access Mostly Uused Products by 50000+ Subscribers

4. IT has more resources and will be granted the next 10 GB that becomes available

Correct Answer : Get Lastest Questions and Answer :

Exp: Fair scheduling is a method of assigning resources to applications such that all apps get, on average, an equal share of resources over time. Hadoop NextGen is capable of scheduling multiple resource types. By default, the Fair Scheduler bases scheduling fairness decisions only on memory. It can be configured to schedule with both memory and CPU, using the notion of Dominant Resource Fairness developed by Ghodsi et al. When there is a single app running, that app uses the entire cluster. When other apps are submitted, resources that free up are assigned to the new apps, so that each app eventually on gets roughly the same amount of resources. Unlike the default Hadoop scheduler, which forms a queue of apps, this lets short apps finish in reasonable time while not starving long-lived apps. It is also a reasonable way to share a cluster between a number of users. Finally, fair sharing can also work with app priorities - the priorities are used as weights to determine the fraction of total resources that each app should get.

The scheduler organizes apps further into "queues", and shares resources fairly between these queues. By default, all users share a single queue, named "default". If an app specifically lists a queue in a container resource request, the request is submitted to that queue. It is also possible to assign queues based on the user name included with the request through configuration. Within each queue, a scheduling policy is used to share resources between the running apps. The default is memory-based fair sharing, but FIFO and multi-resource with Dominant Resource Fairness can also be configured. Queues can be arranged in a hierarchy to divide resources and configured with weights to share the cluster in specific proportions.

In addition to providing fair sharing, the Fair Scheduler allows assigning guaranteed minimum shares to queues, which is useful for ensuring that certain users, groups or production applications always get sufficient resources. When a queue contains apps, it gets at least its minimum share, but when the queue does not need its full guaranteed share, the excess is split between other running apps. This lets the scheduler guarantee capacity for queues while utilizing resources efficiently when these queues don't contain applications.

The Fair Scheduler lets all apps run by default, but it is also possible to limit the number of running apps per user and per queue through the config file. This can be useful when a user must submit hundreds of apps at once, or in general to improve performance if running too many apps at once would cause too much intermediate data to be created or too much context-switching. Limiting the apps does not cause any subsequently submitted apps to fail, only to wait in the scheduler's queue until some of the user's earlier apps finish.

Initially, DCS and IT each have some resources allocated to jobs in their respective queues and only 10GB remains in the cluster. Each queue is requesting to run a map task requiring 10GB, totaling more resources than are available in the cluster. DCS currently holds less resources so the Fair Scheduler will award a container with the requested 10GB of memory to DCS.

Another way to view the allocation: 10GB per map is being requested and 10GB is available. One queue is using less than its fair share. That queue is granted the next available resources

Question : Your Data Management Hadoop cluster has a total of GB of memory capacity.

Researcher Allen submits a MapReduce Job Equity which is configured to require a total of 100 GB of memory and few seconds later,

Researcher Babita submits a MapReduce Job ETF which is configured to require a total of 15 GB of memory. Using the YARN FairScheduler,

do the tasks in Job Equity have to finish before the tasks in Job ETF can start?

1. The tasks in Job ETF have to wait until the tasks in Job Equity to finish as there is no memory left

2. The tasks in Job ETF do not have to wait for the tasks in Job Equity to finish, tasks in Job ETF can start when resources become available

3. Access Mostly Uused Products by 50000+ Subscribers

4. The tasks in Job Equity have to wait until the tasks in Job ETF to finish as small jobs will be given priority

Correct Answer : Get Lastest Questions and Answer :

Exp: The fair scheduler supports hierarchical queues. All queues descend from a queue named "root". Available resources are distributed among the children of the root queue in the typical fair scheduling fashion. Then, the children distribute the resources assigned to them to their children in the same fashion. Applications may only be scheduled on leaf queues. Queues can be specified as children of other queues by placing them as sub-elements of their parents in the fair scheduler allocation file.

A queue's name starts with the names of its parents, with periods as separators. So a queue named "queue1" under the root queue, would be referred to as "root.queue1", and a queue named "queue2" under a queue named "parent1" would be referred to as "root.parent1.queue2". When referring to queues, the root part of the name is optional, so queue1 could be referred to as just "queue1", and a queue2 could be referred to as just "parent1.queue2".

Additionally, the fair scheduler allows setting a different custom policy for each queue to allow sharing the queue's resources in any which way the user wants. A custom policy can be built by extending org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.SchedulingPolicy. FifoPolicy, FairSharePolicy (default), and DominantResourceFairnessPolicy are built-in and can be readily used.

Certain add-ons are not yet supported which existed in the original (MR1) Fair Scheduler. Among them, is the use of a custom policies governing priority "boosting" over certain apps.

The first job submitted, Equity will require X number of map tasks based on the input split. As the only job, these tasks can use up to the available 50 GB of memory across the cluster. When the second job is submitted a few seconds later, it will require Y number of map tasks based on the input split. To provide "fairness", the scheduler will allocate the next available memory resources to Job ETF. By default, with no preemption configured, resources will become available as an individual task completes from Job Equity. Remaining tasks for Job Equity will remain in the queue until resources are available, allowing both jobs to run in parallel.

A job will not use more resources than required. At most, Job ETF will use 15 GB of memory and Job Equity will continue to run with the remaining 35 GB or memory.

Question : Select the two correct statements from below regarding the Hadoop Cluster Infrastructure

configured with Fair Scheduler and each MapReduce ETL work will be assigned to a pool.

1. Pools are assigned priorities. Pools with higher priorities are executed before pools with lower priorities.

2. Each pool's share of task slots remains static during the execution of any individual job.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Each pool's share of task slots may change throughout the course of job execution.

5. Each pool gets exactly 1/N of the total available task slots, where N is the number of jobs running on the cluster.

6. Pools get a dynamically-allocated share of the available task slots (subject to additional constraints)

1. 1,4,6

2. 3,4,6

3. Access Mostly Uused Products by 50000+ Subscribers

4. 2,3,4

5. 4,5,6

Correct Answer : Get Lastest Questions and Answer :

Exp: The Fair Scheduler groups jobs into pools and performs fair sharing between these pools.Each pool can use either FIFO or fair sharing to schedule jobs internal to the pool. The pool that a job is placed in is determined by a JobConf property, the pool name property. By default, this is mapreduce.job.user.name , so that there is one pool per user. However, different properties can be used, e.g. group.name to have one pool per Unix group. A common trick is to set the pool name property to an unused property name such as pool.name and make this default to mapreduce.job.user.name , so that there is one pool per user but it is also possible to place jobs into special pools by setting their

pool.name

directly. The

mapred-site.xml

mapred.fairscheduler.poolnameproperty --> pool.name

pool.name --> ${mapreduce.job.user.name}

Normally, active pools (those that contain jobs) will get equal shares of the map and reduce task slots in the cluster. However, it is also possible to set a

minimum share of map and reduce slots on a given pool, which is a number of slots that it will always get when it is active, even if its fair share would be below this number. This is useful for guaranteeing that production jobs get a certain desired level of service when sharing a cluster with non-production jobs. Minimum shares have three effects: 1. The pools fair share will always be at least as large as its minimum share. Slots are taken from the share of other pools to achieve this. The only exception is if the minimum shares of the active pools add up to more than the total number of slots in the cluster; in this case, each pools share will be scaled down proportionally. 2. Pools whose running task count is below their minimum share get assigned slots first when slots are available. 3. It is possible to set a preemption timeout on the pool after which, if it has not received enough task slots to meet its minimum share, it is allowed to kill tasks in other jobs to meet its share. Minimum shares with preemption timeouts thus act like SLAs. Note that when a pool is inactive (contains no jobs), its minimum share is not reserved for it the slots are split up among the other pools

Pools are allocated their 'fair share' of task slots based on the total number of slots available, and also the demand in the pool a pool will never be allocated more slots than it needs. The pool's share of slots may change based on jobs running in other pools; a pool with a minimum share configured, for example, may take slots away from another pool to reach that minimum share when a job runs in that pool.

Related Questions

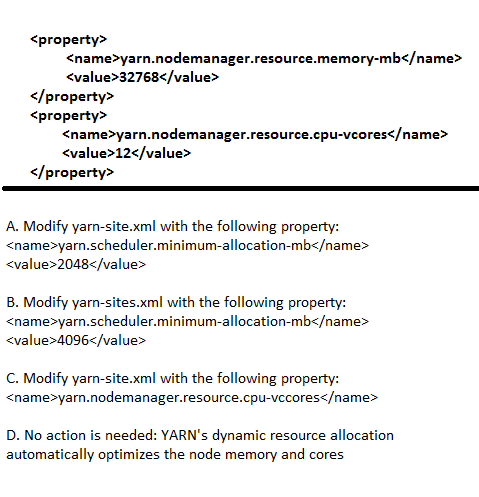

Question : Each node in your Hadoop cluster, running YARN, has GB memory and cores.

Your yarn-site.xml has the following configuration:

You want YARN to launch no more than 16 containers per node. What should you do?

1. A

2. B

3. Access Mostly Uused Products by 50000+ Subscribers

4. D

Question : You want to node to only swap Hadoop daemon data from RAM to disk when absolutely necessary. What

should you do?

1. Delete the /dev/vmswap file on the node

2. Delete the /etc/swap file on the node

3. Access Mostly Uused Products by 50000+ Subscribers

4. Set vm.swappiness file on the node

5. Delete the /swapfile file on the node

Question : You are configuring your cluster to run HDFS and MapReducer v (MRv) on YARN.

Which two daemons needs to be installed on your cluster's master nodes?

A. HMaster

B. ResourceManager

C. TaskManager

D. JobTracker

E. NameNode

F. DataNode

1. AB

2. AC

3. Access Mostly Uused Products by 50000+ Subscribers

4. DE

5. BE

Question : You observed that the number of spilled records from Map tasks far exceeds the number of map output

records. Your child heap size is 1GB and your io.sort.mb value is set to 1000MB. How would you tune your io.

sort.mb value to achieve maximum memory to disk I/O ratio?

1. For a 1GB child heap size an io.sort.mb of 128 MB will always maximize memory to disk I/O

2. Increase the io.sort.mb to 1GB

3. Access Mostly Uused Products by 50000+ Subscribers

4. Tune the io.sort.mb value until you observe that the number of spilled records equals (or is as close to

equals) the number of map output records.

Question : You are running a Hadoop cluster with a NameNode on host mynamenode, a secondary NameNode on host

mysecondarynamenode and several DataNodes.

Which best describes how you determine when the last checkpoint happened?

1. Execute hdfs namenode report on the command line and look at the Last Checkpoint information

2. Execute hdfs dfsadmin saveNamespace on the command line which returns to you the last checkpoint

value in fstime file

3. Access Mostly Uused Products by 50000+ Subscribers

Checkpoint information

4. Connect to the web UI of the NameNode (http://mynamenode:50070) and look at the Last Checkpoint

information

Question : What does CDH packaging do on install to facilitate Kerberos security setup?

1. Automatically configures permissions for log files at &MAPRED_LOG_DIR/userlogs

2. Creates users for hdfs and mapreduce to facilitate role assignment

3. Access Mostly Uused Products by 50000+ Subscribers

4. Creates a set of pre-configured Kerberos keytab files and their permissions

5. Creates and configures your kdc with default cluster values