AWS Certified Solutions Architect - Professional Questions and Answers (Dumps and Practice Questions)

Question :

1. A

2. B

3. Access Mostly Uused Products by 50000+ Subscribers

4. D

Correct Answer : Get Lastest Questions and Answer :

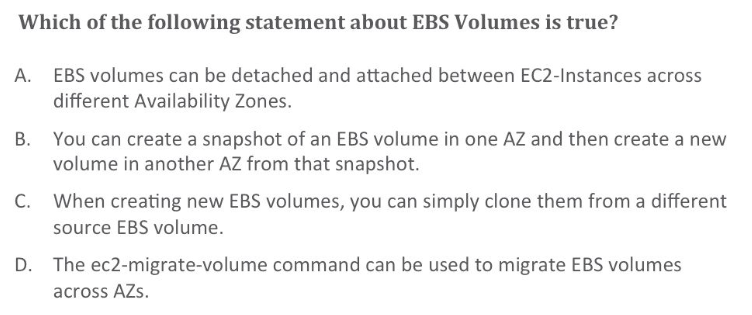

Explanation: When you create a new Amazon EBS volume, you can create it based on an existing snapshot; the new volume begins as an exact replica of the original volume that was used to

create the snapshot. New volumes created from existing Amazon S3 snapshots load lazily in the background, so you can begin using them right away. If your instance accesses a piece of

data that hasn't yet been loaded, the volume immediately downloads the requested data from Amazon S3, and then continues loading the rest of the volume's data in the background. For

more information about creating snapshots, see Creating an Amazon EBS Snapshot.

Snapshots that are taken from encrypted volumes are automatically encrypted. Volumes that are created from encrypted snapshots are also automatically encrypted. Your encrypted

volumes and any associated snapshots always remain protected. For more information, see Amazon EBS Encryption. You can share your unencrypted snapshots with specific individuals, or

make them public to share them with the entire AWS community. Users with access to your snapshots can create their own Amazon EBS volumes from your snapshot, but your snapshots

remain completely intact. For more information about how to share snapshots, see Sharing Snapshots. Encrypted snapshots cannot be shared with anyone, because your volume encryption

keys and master key are specific to your account. If you need to share your encrypted snapshot data, you can migrate the data to an unencrypted volume and share a snapshot of that

volume. For more information, see Migrating Data.

Amazon EBS snapshots are constrained to the region in which they are created. After you have created a snapshot of an Amazon EBS volume, you can use it to create new volumes in the

same region. For more information, see Restoring an Amazon EBS Volume from a Snapshot. You can also copy snapshots across AWS regions, making it easier to leverage multiple AWS

regions for geographical expansion, data center migration and disaster recovery. You can copy any accessible snapshots that are in the available state. You can restore an Amazon EBS

volume with data from a snapshot stored in Amazon S3. You need to know the ID of the snapshot you wish to restore your volume from and you need to have access permissions for the

snapshot. For more information on snapshots, see Amazon EBS Snapshots. New volumes created from existing Amazon S3 snapshots load lazily in the background. This means that after a

volume is created from a snapshot, there is no need to wait for all of the data to transfer from Amazon S3 to your Amazon EBS volume before your attached instance can start accessing

the volume and all its data. If your instance accesses data that hasn't yet been loaded, the volume immediately downloads the requested data from Amazon S3, and continues loading the

rest of the data in the background.

Amazon EBS volumes that are restored from encrypted snapshots are automatically encrypted. Encrypted volumes can only be attached to selected instance types. For more information,

see Supported Instance Types.

When a block of data on a newly restored Amazon EBS volume is accessed for the first time, you might experience longer than normal latency. To avoid the possibility of increased read

or write latency on a production workload, you should first access all of the blocks on the volume to ensure optimal performance; this practice is called pre-warming the volume.

Question :

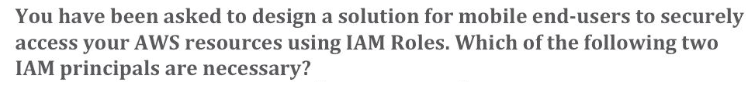

A. AWS Account

B. AWS Service

C. SAML Provider

D. Active Directory Users and Groups

E. Identity Provider

1. A,B

2. B,D

3. Access Mostly Uused Products by 50000+ Subscribers

4. A,C

5. D,E

Correct Answer : Get Lastest Questions and Answer :

Explanation:

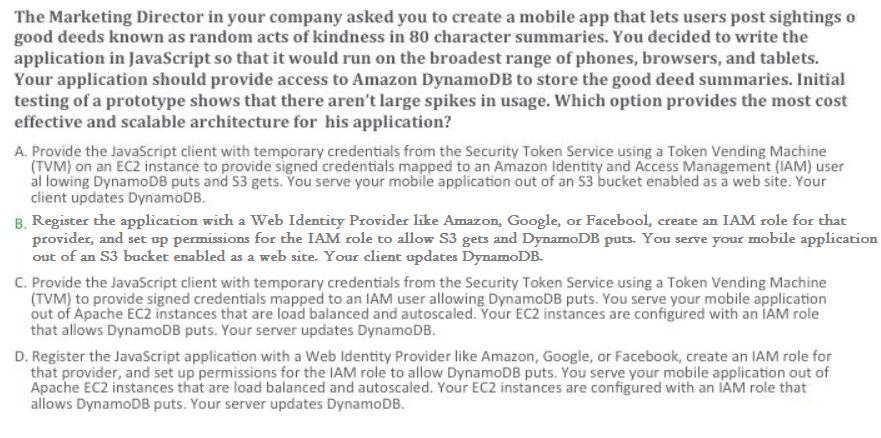

Question :

1. A

2. B

3. Access Mostly Uused Products by 50000+ Subscribers

4. D

Correct Answer : Get Lastest Questions and Answer :

Explanation:

Related Questions

Question : A user has launched an EBS backed EC instance in the US-East-a region. The user stopped the instance and started it back after days.

AWS throws up an `InsufficientInstanceCapacity' error. What can be the possible reason for this?

1. AWS does not have sufficient capacity in that availability zone

2. AWS zone mapping is changed for that user account

3. Access Mostly Uused Products by 50000+ Subscribers

4. The user account has reached the maximum EC2 instance limit

Question : An organization has created IAM users. The organization wants each of the IAM users to have access to a separate DyanmoDB table. All the

users are added to the same group and the organization wants to setup a group level policy for this. How can the organization achieve this?

1. Define the group policy and add a condition which allows the access based on the IAM name

2. Create a DynamoDB table with the same name as the IAM user name and define the policy rule which grants access based on the DynamoDB ARN using a variable

3. Access Mostly Uused Products by 50000+ Subscribers

4. It is not possible to have a group level policy which allows different IAM users to different DynamoDB Tables

Question : QuickTechie.com is currently runs several FTP servers that their customers use to upload and download large video files. They wish to move this system to AWS

to make it more scalable, but they wish to maintain customer privacy and Keep costs to a minimum. What AWS architecture would you recommend?

1. Ask their customers to use an S3 client instead of an FTP client. Create a single S3 bucket. Create an IAM user for each customer. Put the IAM Users in a Group

that has an IAM policy that permits access to sub-directories within the bucket via use of the 'username' Policy variable.

2. Create a single S3 bucket with Reduced Redundancy Storage turned on and ask their customers to use an S3 client instead of an FTP client. Create a bucket for

each customer with a Bucket Policy that permits access only to that one customer.

3. Access Mostly Uused Products by 50000+ Subscribers

threshold. Load a central list of ftp users from S3 as part of the user Data startup script on each Instance.

4. Create a single S3 bucket with Requester Pays turned on and ask their customers to use an S3 client instead of an FTP client. Create a bucket for each customer

with a Bucket Policy that permits access only to that one customer.

Question : QuickTechie.com has deployed a multi-tier web application that relies on DynamoDB in a single region. For regulatory reasons they need disaster recovery

capability. In a separate region with a Recovery Time Objective of 2 hours and a Recovery Point Objective of 24 hours. They should synchronize their data on a regular basis and be

able to provision the web application rapidly using CloudFormation. The objective is to minimize changes to the existing web application, control the throughput of DynamoDB used for

the synchronization of data and synchronize only the modified elements.

Which design would you choose to meet these requirements?

1. Use AWS data Pipeline to schedule a DynamoDB cross region copy once a day. create a Last updated attribute in your DynamoDB table that would represent the

timestamp of the last update and use it as a filter.

2. Use EMR and write a custom script to retrieve data from DynamoDB in the current region using a SCAN operation and push it to DynamoDB in the second region.

3. Access Mostly Uused Products by 50000+ Subscribers

will import data from S3 to DynamoDB in the other region.

4. Send also each update into an SQS queue in the second region; use an auto-scaiing group behind the SQS queue to replay the write in the second region.

Correct Answer : Exp : As looking at the Question RTO is (Within hrs , you should be able to recover) and RPO is hours (It is ok , if we loose data uploaded in last

hours). Only modified data needs to be synchronized hence, we need to able to copy incremental data. Amazon anouncement as below

We are excited to announce the availability of "DynamoDB Cross-Region Copy" feature in AWS Data Pipeline service. DynamoDB Cross-Region Copy enables you to configure periodic copy of

DynamoDB table data from one AWS region to a DynamoDB table in another region (or to a different table in the same region). Using this feature can enable you to deliver applications

from other AWS regions using the same data, as well as enabling you to create a copy of your data in another region for disaster recovery purposes.

To get started with this feature, from the AWS Data Pipeline console choose the "Cross Region DynamoDB Copy" Data Pipeline template and select the source and destination DynamoDB

tables you want to copy from and to. You can also choose whether you want to perform an incremental or full copy of a table. Specify the time you want the first copy to start and the

frequency of the copy, and your scheduled copy will be ready to go. Hence option 2,3 and 4 is out. So option 1 should be correct.

Question : QuickTechie.com is serving on-demand training videos to your workforce. Videos are uploaded monthly in high resolution MP format. Your workforce is distributed globally

often on the move and using company-provided tablets that require the HTTP Live Streaming (HLS) protocol to watch a video. Your company has no video transcoding expertise and it

required, you may need to pay for a consultant. How do you implement the most cost-efficient architecture without compromising high availability and quality of video delivery?

1. Elastic Transcoder to transcode original high-resolution MP4 videos to HLS. S3 to host videos with Lifecycle Management to archive original files to Glacier after a

few days. CloudFront to serve HLS transcoded videos from S3

2. A video transcoding pipeline running on EC2 using SQS to distribute tasks and Auto Scaling to adjust the number or nodes depending on the length of the queue S3 to

host videos with Lifecycle Management to archive all files to Glacier after a few days. CloudFront to serve HLS transcoding videos from Glacier

3. Access Mostly Uused Products by 50000+ Subscribers

after a few days. CioudFront to serve HLS transcoded videos from EC2.

4. A video transcoding pipeline running on EC2 using SQS to distribute tasks and Auto Scaling to adjust the number of nodes depending on the length of the queue. EBS

volumes to host videos and EBS snapshots to incrementally backup original files after a few days. CloudFront to serve HLS transcoded videos from EC2

Question : Your QuickTechie INC wants to implement an order fulfillment process for selling a personalized gadget that needs an average of - days to produce with some orders

taking up

to 6 months. You expect 10 orders per day on your first day. 1000 orders per day after 6 months and 10,000 orders after 12 months. Orders coming in are checked for consistency then

dispatched to your manufacturing plant for production quality control packaging shipment and payment processing. If the product does not meet the quality standards at any stage of

the process employees may force the process to repeat a step. Customers are notified via email about order status and any critical issues with their orders such as payment failure.

Your case architecture includes AWS Elastic Beanstalk for your website with an RDS MySQL instance for customer data and orders.

How can you implement the order fulfillment process while making sure that the emails are delivered reliably?

1. Add a business process management application to your Elastic Beanstalk app servers and re-use the RDS database for tracking order status use one of the Elastic

Beanstalk instances to send emails to customers.

2. Use SWF with an Auto Scaling group of activity workers and a decider instance in another Auto Scaling group with min/max=1. Use the decider instance to send emails

to customers.

3. Access Mostly Uused Products by 50000+ Subscribers

4. Use an SQS queue to manage all process tasks. Use an Auto Scaling group of EC2 Instances that poll the tasks and execute them. Use SES to send emails to customers.

Question : An AWS customer runs a public blogging website. The site users upload two million blog entries a month. The average blog entry size is KB. The access rate to blog

entries drops to negligible 6 months after publication and users rarely access a blog entry 1 year after publication. Additionally, blog entries have a high update rate during the

first 3 months following publication, this drops to no updates after 6 months. The customer wants to use CloudFront to improve his user's load times. Which of the following

recommendations would you make to the customer?

1. Duplicate entries into two different buckets and create two separate CloudFront distributions where S3 access is restricted only to Cloud Front identity

2. Create a CloudFront distribution with US/Europe price class for US/Europe users and a different CloudFront distribution with All Edge Locations for the remaining

users.

3. Access Mostly Uused Products by 50000+ Subscribers

was uploaded to be used with CloudFront behaviors.

4. Create a CloudFront distribution with Restrict Viewer Access Forward Query string set to true and minimum TTL of 0.