Cloudera Databricks Data Science Certification Questions and Answers (Dumps and Practice Questions)

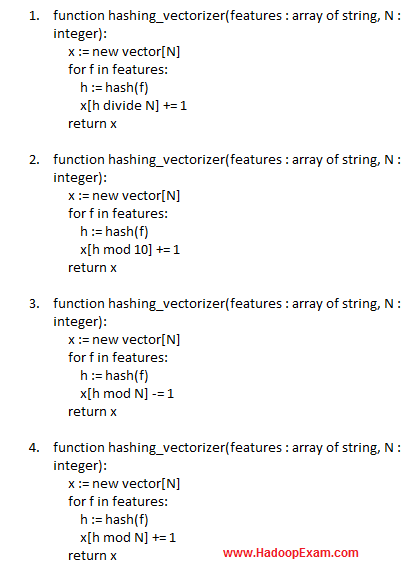

Question : Select the correct pseudo function for the hashing trick

1.

2.

3.

4.

Correct Answer : 4

Explanation:Instead of maintaining a dictionary, a feature vectorizer that uses the hashing trick can build a vector of a pre-defined length by applying a hash function h to the features (e.g., words) in the items under consideration, then using the hash values modulo number of features, directly as feature indices and updating the resulting vector at those indices.

The hashing trick takes a high-dimensional set of features (such as the words in a language) and maps them to a lower dimensional space by taking the hash of each value modulo the number of features we want in our model.

Question : What is the considerable difference between L and L regularization?

1. L1 regularization has more accuracy of the resulting model

2. Size of the model can be much smaller in L1 regularization than that produced by L2-regularization

3. L2-regularization can be of vital importance when the application is deployed in resource-tight environments such as cell-phones.

4. All of the above are correct

Correct Answer : 2

Explanation: The two most common regularization methods are called L1 and L2 regularization. L1 regularization penalizes the weight vector for its L1-norm (i.e. the sum of the absolute values of the weights), whereas L2 regularization uses its L2-norm. There is usually not a considerable difference between the two methods in terms of the accuracy of the resulting model (Gao et al., 2007), but L1 regularization has a significant advantage in practice. Because many of the weights of the features become zero as a result of L1-regularized training, the size of the model can be much smaller than that produced by L2-regularization. Compact models require less space on memory and storage, and enable the application to start up quickly. These merits can be of vital importance when the application is deployed in resource-tight environments such as cell-phones.

Regularization works by adding the penalty associated with the coefficient values to the error of the hypothesis. This way, an accurate hypothesis with unlikely coefficients would be penalized whila a somewhat less accurate but more conservative hypothesis with low coefficients would not be penalized as much.

Question :

Which of the following could be features?

1. 1. Words in the document

2. 2. Symptoms of a diseases

3. 3. Characteristics of an unidentified object

4. 4. Only 1 and 2

5. 5. All 1,2 and 3 are possible

Correct Answer : 5

Explanation: Any dataset that can be turned into lists of features. A feature is simply something that is either present or absent for a given item. In the case of documents, the features are the words in the document, but they could also be characteristics of an unidentified object, symptoms of a disease, or anything else that can be said to be present of absent.

Related Questions

Question : A fruit may be considered to be an apple if it is red, round, and about " in diameter.

A naive Bayes classifier considers each of these features to contribute independently

to the probability that this fruit is an apple, regardless of the

1. Presence of the other features.

2. Absence of the other features.

3. Presence or absence of the other features.

4. None of the above

Question : Regularization is a very important technique in machine learning to prevent over fitting.

And Optimizing with a L1 regularization term is harder than with an L2 regularization term because

1. Since the L1 norm is not differentiable

2. Since derivative is not constant

3. The objective function is not convex

4. The objective function is convex

Question :

One can work with the naive Bayes model without accepting Bayesian probability

1. True

2. False

Question :

Logistic regression is a model used for prediction of the probability of occurrence of an event.

It makes use of several variables that may be___________

1. Numerical

2. Categorical

3. Both 1 and 2 are correct

4. None of the 1 and 2 are correct

Question : Select the correct statement regarding the naive Bayes classification

1. it only requires a small amount of training data to estimate the parameters

2. Independent variables can be assumed

3. only the variances of the variables for each class need to be determined

4. for each class entire covariance matrix need to be determined

1. 1,2,3

2. 2,3,4

3. 1,3,4

4. 2,3,4

Question : Spam filtering of the emails is an example of

1. Supervised learning

2. Unsupervised learning

3. Clustering

4. 1 and 3 are correct

5. 2 and 3 are correct