Cloudera Databricks Data Science Certification Questions and Answers (Dumps and Practice Questions)

Question : In regards of Feature Hashing, with large vectors or with multiple locations per feature_____________

1. Is a problem with accuracy

2. It is hard to understand what classifier is doing

3. It is easy to understand what classifier is doing

4. Is a problem with accuracy as well as hard to understand what classifier us doing

Correct Answer 2 :

Explanation: SGD-based classifiers avoid the need to predetermine vector size by simply picking a reasonable size and shoehorning the training data into vectors of that size. This approach is known as feature hashing. The shoehorning is done by picking one or more locations by using a hash of the name of the variable for continuous variables, or a hash of the variable name and the category name or word for categorical, text-like, or word-like data.

This hashed feature approach has the distinct advantage of requiring less memory and one less pass through the training data, but it can make it much harder to reverse engineer vectors to determine which original feature mapped to a vector location. This is because multiple features may hash to the same location. With large vectors or with multiple locations per feature, this isn't a problem for accuracy, but it can make it hard to understand what a classifier is doing.

An additional benefit of feature hashing is that the unknown and unbounded vocabularies typical of word-like variables aren't a problem.

Question : What are the advantages of the Hashing Features?

1. Requires the less memory

2. Less pass through the training data

3. Easily reverse engineer vectors to determine which original feature mapped to a vector location

4. 1 and 2 are correct

5. All 1,2 and 3 are correct

Correct Answer 4 :

Explanation:

SGD-based classifiers avoid the need to predetermine vector size by simply picking a reasonable size and shoehorning the training data into vectors of that size. This approach is known as feature hashing. The shoehorning is done by picking one or more locations by using a hash of the name of the variable for continuous variables, or a hash of the variable name and the category name or word for categorical, text-like, or word-like data.

This hashed feature approach has the distinct advantage of requiring less memory and one less pass through the training data, but it can make it much harder to reverse engineer vectors to determine which original feature mapped to a vector location. This is because multiple features may hash to the same location. With large vectors or with multiple locations per feature, this isn't a problem for accuracy, but it can make it hard to understand what a classifier is doing.

An additional benefit of feature hashing is that the unknown and unbounded vocabularies typical of word-like variables aren't a problem.

Question : In machine learning, feature hashing, also known as the hashing trick (by analogy to the kernel trick),

is a fast and space-efficient way of vectorizing features (such as the words in a language), i.e. turning arbitrary

features into indices in a vector or matrix. It works by applying a hash function to the features and using their hash

values modulo the number of features as indices directly, rather than looking the indices up in an associative array.

So what is the primary reason of the hashing trick for building classifiers?

1. It creates the smaller models

2. It requires the lesser memory to store the coefficients for the model

3. It reduces the non-significant features e.g. punctuations

4. Noisy features are removed

Correct Answer : 2

Explanation: This hashed feature approach has the distinct advantage of requiring less memory and one less pass through the training data, but it can make it much harder to reverse engineer vectors to determine which original feature mapped to a vector location. This is because multiple features may hash to the same location. With large vectors or with multiple locations per feature, this isn't a problem for accuracy, but it can make it hard to understand what a classifier is doing.

Models always have a coefficient per feature, which are stored in memory during model building. The hashing trick collapses a high number of features to a small number, which reduces the number of coefficients and thus memory requirements.

Noisy features are not removed; they are combined with other features and so still have an impact.

The validity of this approach depends a lot on the nature of the features and problem domain; knowledge of the domain is important to understand whether it is applicable or will likely produce poor results. While hashing features may produce a smaller model, it will be one built from odd combinations of real-world features, and so will be harder to interpret.

An additional benefit of feature hashing is that the unknown and unbounded vocabularies typical of word-like variables aren't a problem.

Related Questions

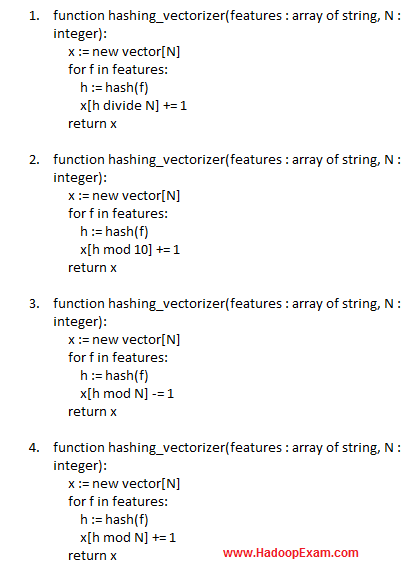

Question : Select the correct pseudo function for the hashing trick

1.

2.

3.

4.

Question : What is the considerable difference between L and L regularization?

1. L1 regularization has more accuracy of the resulting model

2. Size of the model can be much smaller in L1 regularization than that produced by L2-regularization

3. L2-regularization can be of vital importance when the application is deployed in resource-tight environments such as cell-phones.

4. All of the above are correct

Question :

Which of the following could be features?

1. 1. Words in the document

2. 2. Symptoms of a diseases

3. 3. Characteristics of an unidentified object

4. 4. Only 1 and 2

5. 5. All 1,2 and 3 are possible

Question : Regularization is a very important technique in machine learning to prevent overfitting.

Mathematically speaking, it adds a regularization term in order to prevent the coefficients to fit so perfectly to overfit.

The difference between the L1 and L2 is_________

1. L2 is the sum of the square of the weights, while L1 is just the sum of the weights

2. L1 is the sum of the square of the weights, while L2 is just the sum of the weights

3. L1 gives Non-sparse output while L2 gives sparse outputs

4. None of the above

Question :

1.

2.

3.

4.

Question : Select the correct option which applies to L regularization

1. Computational efficient due to having analytical solutions

2. Non-sparse outputs

3. No feature selection

4. All of the above